Enterprise Website Hosting

Table of Contents

Enterprise website hosting is a specialized infrastructure layer designed to support the operational, security, and performance demands of large-scale enterprise websites. Unlike general-purpose hosting, it provides a scalable, high-performance hosting environment that accommodates complex systems, intensive traffic loads, and compliance-bound operations.

Enterprise hosting serves as the infrastructure layer within enterprise digital architecture, enabling controlled environments with configuration flexibility, integration readiness, and service-level guarantees necessary for enterprise-grade web platforms.

At its core, enterprise website hosting is structured to fulfill the multifaceted demands of enterprise-grade infrastructure: infrastructure scalability, security architecture, and uptime SLAs that align with enterprise SLAs.

Such infrastructural needs provided by enterprise hosting are non-optional in enterprise contexts, where digital delivery often spans across regions, serves thousands of concurrent users, and handles integrated content management systems, customer data, and application services. As such, the hosting platform is an operational hub with integrated load distribution mechanisms, compliance-readiness protocols, and real-time monitoring systems built into its framework.

This category of hosting also underpins the full website development lifecycle. From development-ready server environments to staging, rollback, and dedicated testing environments, enterprise hosting provides the necessary isolation and control for development workflows.

DevOps pipelines, CMS integration pipelines, version control tools, and multi-framework compatibility are baseline requirements for enterprise development teams tasked with rapid iteration and deployment under strict performance constraints.

What follows is a breakdown of the various dimensions that characterize enterprise website hosting, including the management models it supports, the infrastructure types in which it is delivered, and the performance attributes that define its reliability.

From managed to unmanaged services, from dedicated hardware to scalable cloud clusters, these dimensions will anchor the technical distinctions that define hosting decisions for enterprise-grade websites.

What is Enterprise Website Hosting?

Enterprise website hosting is a dedicated class of enterprise hosting architecture that fulfills the infrastructure demands of enterprise-scale websites. It is architected to support the operational and developmental requirements of complex, high-volume digital platforms that function across multiple regions, teams, and integrations.

This hosting environment is provisioned for sustaining the lifecycle of enterprise website development, spanning automated staging workflows, scalable deployment pipelines, and runtime workloads that demand uninterrupted service continuity.

As the base layer of the enterprise deployment stack, this infrastructure integrates with CI/CD systems and resource orchestration platforms, serving development pipelines that rely on repeatable, container-based environment replication. This orchestration-ready hosting model provides CI/CD compatible environments that enable repeatable deployment across development pipelines.

The hosting environments operate under system-level isolation, supporting multi-instance architecture to maintain redundancy protocols and uphold compliance provisioning at scale. Enterprise website hosting maintains workload segmentation and uptime guarantees to tolerate dynamic usage patterns typical of mission-critical, content-heavy enterprise websites.

Unlike traditional consumer-grade hosting, enterprise website hosting prioritizes compliance-ready infrastructure and real-time operational resiliency. It integrates developer-oriented control panels and scalable container-based infrastructure to support team-driven operations and environment standardization.

These systems fulfill the security and performance attributes required by industry regulations and internal governance, maintaining compliance as a functional baseline.

By aligning the application hosting layer with the broader enterprise resource architecture, this type of hosting facilitates high-concurrency workflows across multiple development branches.

From managing regional deployments to integrating staging systems into distributed CI workflows, the infrastructure acts as a synchronization anchor point between system codebases and runtime environments.

To understand what sets enterprise-grade hosting apart, it’s essential to compare it directly with conventional hosting environments.

How Does it Differ From Regular Hosting?

Enterprise website hosting serves organizations that treat their websites as production-critical software systems, whereas regular hosting targets a lightweight, general-purpose web presence. While shared and VPS hosting focus on cost-effective web presence for individuals or small teams, enterprise hosting supports high-scale deployments, strict compliance needs, and development-heavy operations.

Functionally, enterprise hosting provides isolated infrastructure with dedicated resources per tenant. Shared hosting operates in multitenant environments where multiple users compete for CPU, memory, and bandwidth. Enterprise environments use containerized or segmented systems that maintain performance consistency under load.

From a development perspective, enterprise hosting integrates with CI/CD pipelines, version control systems, and supports deployment staging, while regular hosting lacks these environments entirely. Regular hosting lacks support for continuous deployment, and typically doesn’t offer rollback environments, pre-production mirrors, or full-stack dev tooling.

Enterprise hosting embeds security and compliance into its architecture through role-based access control and industry certifications, whereas shared platforms rarely meet an enforceable standard.

Solutions for enterprises enforce role-based access, encryption standards, and offer compliance support for frameworks like PCI–DSS, HIPAA, or GDPR. Shared platforms rarely include certified environments or enforceable isolation required for regulated industries.

Enterprise hosting includes advanced monitoring, observability tools, and SLA-backed uptime guarantees. Regular hosting provides minimal diagnostics and best-effort support.

In traffic handling, enterprise systems scale horizontally and vertically with automated orchestration. Shared hosting cannot scale under load and lacks the architectural support to maintain performance during demand spikes.

For engineering teams, the infrastructure behind enterprise hosting directly supports iterative development, testing, and deployment processes — from Git integration to automated build pipelines. It provides what regular hosting does not: control, integration, and operational depth. These differences become especially critical when evaluating how enterprise hosting is managed and structured.

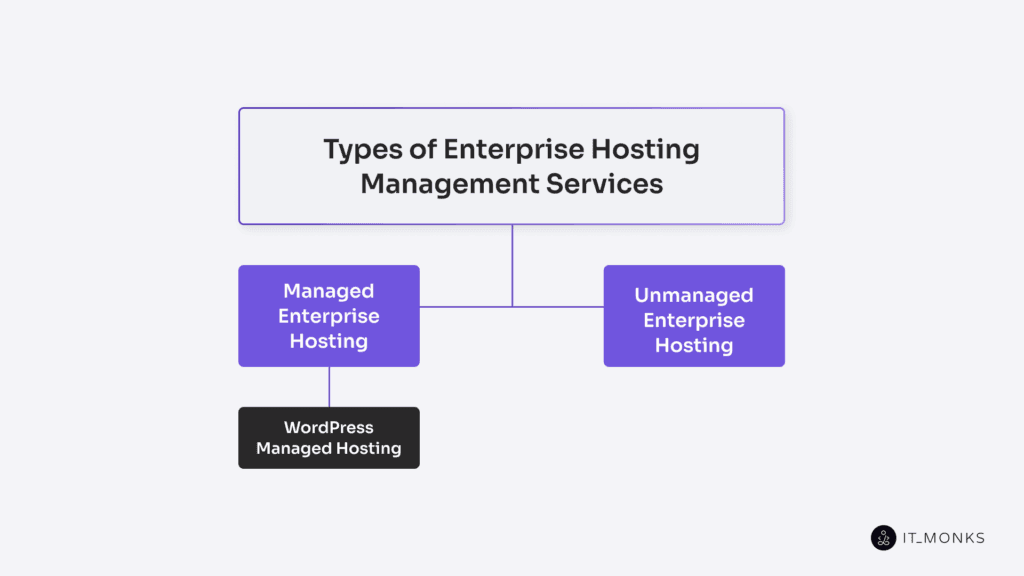

Types of Enterprise Hosting Management Services

Enterprise hosting management services define how control, accountability, and operational burden are distributed across the organization and its infrastructure provider.

Within the context of enterprise website hosting, management service models function as a tier of classification that determines how hosting environments are maintained, provisioned, and integrated into DevOps workflows.

The selected management tier directly affects how an enterprise website is deployed, maintained, and scaled, depending on which responsibilities are handled by the infrastructure provider versus internal teams.

Enterprise hosting management services determine how responsibilities are assigned between the vendor and the enterprise’s internal teams. This distribution of control has direct implications for how development pipelines operate, how server configurations are managed, and how consistently system maintenance tasks are executed.

In highly regulated or high-traffic environments, these models identify whether tasks like software patching, infrastructure configuration, and incident response are handled by internal DevOps teams or the infrastructure provider.

The management tier chosen will either shift sysadmin tasks to external infrastructure providers or retain them in-house, demanding a more hands-on approach from internal DevOps personnel.

From a strategic standpoint, selecting a hosting management service model is one of the initial decisions in enterprise infrastructure planning, as it structures technical autonomy, support delegation, and system upkeep workflows.

Not all enterprise websites operate with the same internal capabilities, and hosting services must accommodate a range of operational readiness, from hands-off vendor-maintained systems to self-managed environments that require deep infrastructure fluency.

Some enterprise environments benefit from hands-off hosting models where the provider manages infrastructure, while others rely on self-managed environments that demand deep internal expertise.

There are two primary management models that enterprise teams typically evaluate – managed enterprise hosting and unmanaged enterprise hosting.

Managed Enterprise Hosting

Managed enterprise hosting is a service delivery model in which the hosting provider assumes infrastructure control, system-level responsibilities, and runtime environment management, allowing enterprise dev teams to focus on application layer development, content delivery, and deployment logic.

Enterprise managed hosting assigns administrative authority across the infrastructure layer to the hosting vendor, automating OS-level patching, enforcing uptime SLAs, orchestrating services, resolving vulnerabilities, and managing real-time monitoring.

The managed hosting environment provides a platform-administered infrastructure with a compliance-ready stack, integrated monitoring tools, and automated backup routines.

The vendor maintains a runtime environment that is optimized for continuous deployment pipelines, supporting tasks like version-controlled deployments, CI/CD compatibility, and environment staging.

Developer-facing toolsets such as role-based access controls, centralized configuration panels, and dashboard-driven performance metrics provide operational clarity without obligating the team to perform system-level work. These dashboards consolidate runtime monitoring, staging visibility, and rollback tracking into a unified control panel, reducing response time and operational uncertainty.

This model is tailored for enterprise development teams operating with high-frequency deployment schedules, automated QA pipelines, and content-driven workflows, especially when internal DevOps capabilities are limited. It benefits organizations that require immediate recovery pathways, audit-ready infrastructure, and development-ready environments without diverting resources toward infrastructure upkeep.

However, the platform-controlled nature of managed enterprise hosting introduces certain constraints. Reduced access to low-level system components and limited customization across the stack may limit advanced system-level tuning or compatibility with non-standard, legacy application frameworks..

One of the most common implementations of managed enterprise hosting occurs within WordPress-based enterprise websites, where CMS-specific tools and automation play a central role in operations.

WordPress Managed Hosting

WordPress managed hosting is a CMS-specific hosting subtype optimized for enterprise-level WordPress websites, where the hosting provider handles both infrastructure tasks and WordPress-specific operations such as plugin and theme lifecycle management, database optimization, and multisite orchestration.

Unlike conventional managed hosting, this model overlays a CMS infrastructure layer specifically architected for the operational demands of WordPress at scale. In this model, the provider delivers a pre-configured environment that tightly integrates with the plugin management system, deployment pipeline, and filesystem configuration, ensuring stability, version control, and scalability.

A WordPress enterprise website hosted on this stack benefits from centralized governance and predictable performance characteristics. It represents a core component of a broader WordPress enterprise solution — an architecture designed to unify governance, security, and performance across complex digital ecosystems.

The hosting provider automates plugin oversight, enforces version control across custom or marketplace themes, and deploys auto-core updates with rollback support. Integrated WAF policies and continuous malware scanning with auto-remediation safeguard the CMS firewall surface.

The CDN/WAF/cache layer is fully unified, including object caching tuned for WordPress query patterns, edge cache purging tied to permalink changes, and content-aware invalidation rules. Database engines are optimized for high I/O throughput and schema-specific tasks such as postmeta indexing and transients cleanup.

Enterprise multisite networks are orchestrated using shared-core topologies with isolated plugin/theme policies, governed entirely by the provider.

These environments are engineered for development-ready workflows, with Git-integrated deploy hooks, CLI-accessible operations via WP–CLI, and staging layers built directly into the hosting control plane.

The provider exposes REST API endpoints through secure, rate-limited gateways, enabling decoupled frontend deployments across headless WordPress and JAMstack setups. Staging environments support full-content synchronization and snapshot-based rollbacks, allowing the provider to roll back update failures or sync staging to live instances on demand, without developer intervention.

Editorial workflows are streamlined through structured content scheduling, Gutenberg block-level customization, and multi-author revision controls, making it ideal for newsroom-style publishing, marketing-heavy CMS stacks, or multinational content operations.

Whether powering a global brand hub, a multilingual editorial portal, or a marketing-driven CMS implementation, WordPress managed hosting delivers scalable, secure, and CI/CD-compatible infrastructure for modern enterprise applications.

For organizations not operating on a CMS like WordPress — or requiring deeper system control — an unmanaged hosting model may offer more flexibility for custom deployments and infrastructure tuning.

Unmanaged Enterprise Hosting

Unmanaged enterprise hosting is a hosting model where infrastructure-level operations, maintenance, and system configuration are fully controlled by the enterprise’s internal development or DevOps team. The provider provisions hardware and maintains uptime, but all configuration, patching, security, backups, and monitoring are owned internally.

This SLA responsibility shift places operational accountability directly on the DevOps-owned infrastructure. It’s a model built around full root-level access, not vendor oversight.

Such setup excludes CMS-level tooling, automated updates, and system maintenance by design. Teams must manage Linux administration, log aggregation (e.g. ELK, Grafana), orchestration tools (Docker, Kubernetes), and provisioning systems like Terraform or Ansible. Manual patching workflows, runtime alerting systems, and custom firewall rule configuration are essential components of the monitoring stack.

Unmanaged hosting enables developer-controlled environments using custom CI/CD logic, containerized deployments, and complex staging servers. This configuration supports unrestricted stack deployment, middleware integration, and custom CI/CD orchestration pipelines. It’s preferred in ecosystems with strict compliance frameworks, hybrid legacy-modern stacks, or microservices that require non-standard infrastructure.

It offers flexibility and control, but with increased maintenance and monitoring responsibilities. Every decision — from update timing to scaling policy — rests with the internal team. This model suits engineering-driven enterprises with infrastructure maturity, not teams seeking hands-off hosting. It facilitates enterprise use cases such as bare-metal deployments, compliance pipelines like HIPAA or SOC 2, and headless architecture integration.

Regardless of how responsibilities are assigned, the underlying architecture — cloud, dedicated, or hybrid — remains central to performance, availability, and control.

Unmanaged enterprise hosting offers flexibility and control, but with operational load. Every decision — from update timing to scaling policy — rests with the internal team. This model suits engineering-driven enterprises with infrastructure maturity, not teams seeking hands-off hosting.

Regardless of how responsibilities are assigned, the underlying architecture — cloud, dedicated, or hybrid — remains central to performance, availability, and control.

Hosting Infrastructure Types for Enterprise Websites

Enterprise hosting is underpinned by infrastructure architectures that serve as the foundation for all technical functions, from deployment to scalability. This infrastructure layer, distinct from the management model, defines the physical or virtual architecture where the enterprise website is hosted, and it significantly impacts application performance, uptime strategies, development integration, and resource scaling.

There are two primary hosting infrastructure types that support enterprise environments: dedicated server hosting and cloud hosting. These represent divergent approaches to how resources are provisioned, isolated, and scaled. The enterprise’s selection between these models typically reflects internal priorities around performance, budget control, operational complexity, and alignment with engineering workflows.

Dedicated server hosting provisions enterprise websites on single-tenant, bare-metal hardware. Such architecture supports physical isolation and deterministic performance, making it suitable for workloads with strict compliance requirements or consistent resource demand.

In contrast, cloud hosting utilizes virtualized infrastructure layered over distributed compute resources. It favors elastic scaling, rapid provisioning, and integration with container orchestration platforms, which align with DevOps-heavy teams and CI/CD-driven development.

Each hosting infrastructure type configures distinct runtime environments and performance boundaries, influencing uptime strategies and CI/CD compatibility. The resource provisioning model, whether fixed or dynamic, determines how well an enterprise can support horizontal scaling, real-time failover, or versioned deployment strategies.

Enterprise Dedicated Server Hosting

Enterprise dedicated server hosting is a single-tenant physical hosting solution that offers organizations exclusive access to an entire server, giving them full control over hardware-level resources, system stack configurations, and security implementations.

Unlike virtualized or shared infrastructure models, dedicated enterprise hosting is a bare-metal machine provisioned solely for one enterprise’s workload, guarantees exclusive access to CPU, memory, and disk IOPS, ensuring full resource isolation from neighboring tenants.

A dedicated server is provisioned on physical server hardware housed in a datacenter operated by an infrastructure provider, but the machine is never virtualized or partitioned among multiple clients.

Enterprises maintain root-level control over the operating system, middleware stack, deployment tools, and all associated services, which allows for tailored kernel modifications, OS-level patch schedules, custom RAID setup, ECC memory tuning, and performance tuning based on specific workload profiles. This depth of system-level access makes the model well-suited for environments requiring strict configuration governance, like those tied to HIPAA or SOC 2 compliance.

Because enterprise dedicated server hosting provides predictable throughput and fixed resource allocations, it’s particularly well-aligned with latency-sensitive applications, vertically scaled CMS deployments, or legacy enterprise software that cannot efficiently operate within hypervisor-based systems, making dedicated hosting ideal for hypervisor-free execution environments.

Use cases frequently include on-premise to hosted migrations, monolithic ERP platforms, and security-sensitive web applications with fixed capacity profiles. Static provisioning also benefits teams who require infrastructure consistency across development, staging, and production, especially when CI/CD pipelines need to reflect identical machine states.

However, this model comes with operational tradeoffs. Bare-metal deployment introduces longer provisioning windows compared to cloud services, lacks automated elasticity, and typically demands higher upfront commitments in both hardware and management.

The absence of rapid horizontal scaling may also introduce challenges for traffic bursts unless paired with enterprise-grade load balancing, manual orchestration, and failover setups.

For enterprise website development, dedicated servers offer deterministic performance and complete autonomy over the deployment stack, making them valuable for scenarios where uptime, isolation, and performance reproducibility take precedence over rapid scale-out strategies.

While dedicated servers offer unmatched control and isolation, many enterprises prioritize elasticity and rapid scaling, leading them toward cloud-based infrastructure models.

Enterprise Cloud Hosting

Enterprise cloud hosting is a virtualized, on-demand infrastructure environment that delivers scalable compute, storage, and networking resources through a distributed, API-accessible architecture. It abstracts physical server constraints by provisioning virtual machines and containers on dynamically orchestrated platforms maintained by enterprise-grade cloud infrastructure platforms like AWS, GCP, or Azure, without attributing provisioning control to the vendor.

These platforms are optimized for performance elasticity, real-time redundancy, and automation-driven provisioning through RESTful APIs.

The core advantage of this infrastructure model lies in its elastic resource provisioning. Enterprise workloads are orchestrated with horizontal and vertical scaling policies that adjust compute power and storage based on real-time traffic demands. Deployments are distributed across multiple global availability zones, maintaining redundancy strategies that support fault tolerance and regional resilience.

Cloud-native environments enable dynamic provisioning of virtual instances, backed by load balancer configurations and container runtimes such as Docker or Kubernetes, all of which are integrated with infrastructure-as-code (IaC) pipelines and version-controlled manifests.

Enterprise web development workflows align with cloud hosting due to the system’s compatibility with modern DevOps toolchains and CI/CD pipelines. Infrastructure components integrate with IaC manifests and are declaratively managed through tools like Terraform, allowing for granular control over deployment phases.

Container orchestration frameworks facilitate rolling updates, blue-green deployments, and microservice-based architecture support. Automated scaling policies, real-time logging, and distributed monitoring reduce manual overhead while improving service uptime and release velocity.

This infrastructure model fits enterprises handling auto-scaled deployments, international content delivery through CDN integrations, or frequent staging/testing cycles. It’s an essential fit for agile teams that iterate rapidly, handle high-concurrency traffic, or deploy headless CMS stacks. Cloud hosting enables organizations to orchestrate dynamic scaling policies instead of relying on static capacity forecasts.

Compared to dedicated hosting, which relies on isolated hardware, cloud hosting for enterprise uses a shared resource pool structured for scalability, programmability, and global reach, reinforcing its role as a foundational component of contemporary enterprise website hosting infrastructure.

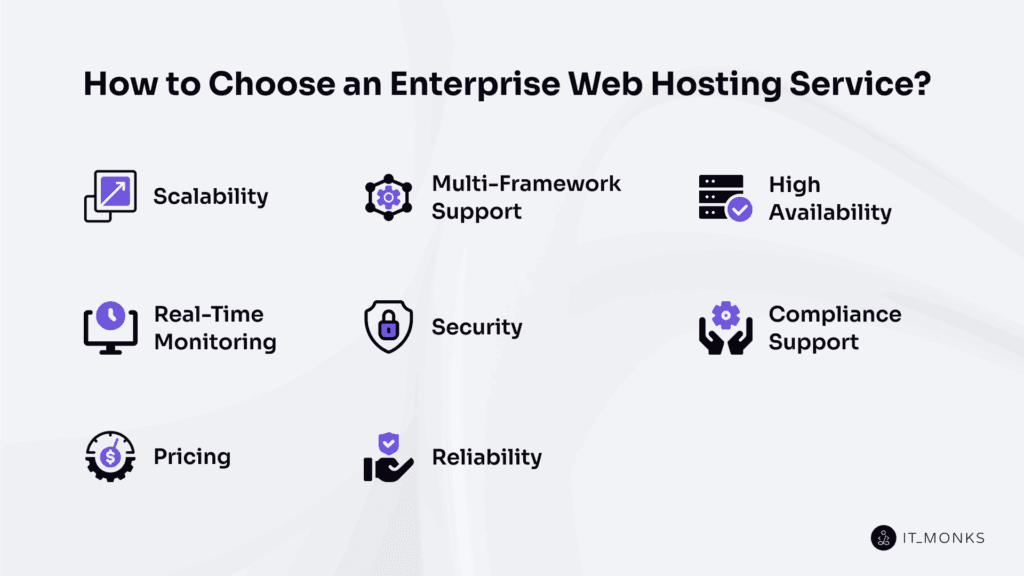

How to Choose an Enterprise Web Hosting Service?

While choosing between dedicated and cloud infrastructure defines the baseline, selecting an enterprise hosting service requires evaluating how well it supports operational needs, development workflows, and compliance obligations.

Different enterprise teams have different priorities; some need scalable infrastructure for high-traffic deployments, while others rely on multi-framework compatibility to support diverse codebases. Regulatory scope, uptime requirements, and real-time diagnostics shape deployment velocity, developer productivity, and risk management.

To evaluate an enterprise hosting provider, 8 key dimensions must be assessed: scalability, multi-framework support, high availability, real-time monitoring, security, compliance support, pricing, and reliability. Each directly affects how infrastructure handles workloads, how fast teams can ship, how issues are traced, and how data governance is enforced.

Insufficient observability undermines incident response precision and extends time-to-resolution. Rigid billing models disrupt budget alignment across fiscal cycles. Lack of verified compliance coverage jeopardizes risk posture and operational accreditation.

Reliable enterprise hosting must integrate real-time analytics, enforce SLA windows, support multiple runtimes, and align with internal access controls and deployment strategies. These criteria are the foundation for choosing infrastructure that scales, supports, and protects enterprise websites.

Scalability

Scalability is a critical technical attribute of an enterprise hosting platform, defining its ability to expand compute, memory, and storage resources based on traffic load. This dynamic adjustment maintains performance stability during usage peaks, supports efficient deployments, and helps manage infrastructure costs. Scalable platforms ensure predictable application throughput and operational continuity across fluctuating usage patterns.

There are two main approaches: vertical scaling adds resources to a single machine, while horizontal scaling replicates nodes or containers across clusters. Horizontal methods — especially when implemented via container orchestration systems like Kubernetes or ECS — offer the elastic resource provisioning required for modern enterprise workloads. Scaling can be manual, triggered by infrastructure workflows, or automated using metric-based thresholds for CPU, memory, or request volume.

The enterprise hosting platform’s autoscaling infrastructure plays a key role in DevOps workflows, keeping application latency predictable during CI/CD rollouts or live traffic bursts. Instance replication, load distribution, and capacity buffer allocation prevent outages and help platforms respond to planned or unpredictable spikes.

Scale-to-zero logic, used in some systems, removes idle resources entirely for efficiency. Scaling logic must align with deployment cadence and integrate seamlessly with application monitoring systems to enable zero-downtime scaling during production rollouts.

Different hosting platforms apply scalability with varying tools and strategies — from autoscaling groups in cloud environments to scripted provisioning on dedicated servers. For enterprises assessing how to choose scalable hosting, it’s essential to evaluate how each hosting platform provisions additional instances, manages scaling thresholds, and supports load-aware provisioning strategies — all of which impact cost efficiency and multi-region workload orchestration.

Hosting vendors may also differ in how they implement auto-provisioning, whether through elasticity layers or CPU throttling safeguards to balance performance and cost. But elasticity alone isn’t enough — for enterprise websites running hybrid stacks or using headless components, hosting compatibility with multiple frameworks is equally critical.

Multi-Framework Support

Multi-framework support is a critical hosting capability where the platform allows simultaneous support for different programming languages, frameworks, CMSs, and runtime environments through isolated containers and language-agnostic execution models, enabling cross-functional teams to deploy and maintain their services without needing separate infrastructure stacks.

In enterprise setups, this is necessary due to the common use of decoupled frontend and backend architectures, often combining React or Vue interfaces, Django or Laravel APIs, and CMS layers like WordPress or Strapi within the same application scope.

An enterprise hosting platform must support parallel framework execution via container orchestration and runtime isolation to prevent dependency conflicts. This is achieved through container-based service delivery (e.g., Docker), runtime isolation for each service, and reverse proxy routing to direct requests appropriately based on service paths and framework-specific rules.

Backend services might rely on Node.js or Python, while frontend deployments use static site generators like Gatsby — all of which are supported through isolated node processes and version-specific runtime provisioning.

Hosting providers integrate with CI/CD tooling to handle per-framework deployment pipelines, manage environment variables, isolate logs per service, and enable Git-based deployment logic and autoscaling across runtimes.

This structure supports API-first development, microservice endpoints, serverless-compatible functions, and headless CMS delivery. Without these capabilities, organizations risk siloed deployments, duplicated infrastructure, and scaling limitations.

Multi-framework support isn’t an enhancement — it’s foundational in hybrid enterprise architectures. And even when runtime diversity is supported, consistent performance across layers becomes the next demand, which leads directly to high availability.

High Availability

High availability is a measurable attribute of enterprise hosting platforms that ensures continuous website uptime, even in the event of hardware failures, software faults, or traffic spikes. It’s engineered through redundant infrastructure and fault isolation across geographically distinct availability zones.

Service-level agreements define availability as a specific uptime percentage. Common tiers include 99.9% (8.76 hours/year), 99.99% (52.56 minutes/year), and 99.999% (5.26 minutes/year). These correspond to approximately 525.6, 52.56, and 5.26 minutes of annual downtime, respectively, reinforcing how even fractional uptimes carry major operational implications. These thresholds are enforced through contractual terms and monitored for compliance.

High availability infrastructure relies on load-balanced clusters, automated failover controllers, and active-active or active-passive configurations. Traffic is routed through redundant nodes, and services are replicated across regions. Redundancy spans at least two disaster recovery zones, and failover response is engineered for sub-60-second detection and action.

Health checks and heartbeat signals, monitored via node watchdogs, trigger system responses like node restarts or traffic rerouting. Recovery Point Objectives (RPO) are typically under five minutes, with Recovery Time Objectives (RTO) between one and five minutes.

High availability enables development teams to perform non-disruptive deploys, replicate databases across fault domains, and maintain rollback-ready staging systems. Blue-green deployment models deliver zero-downtime updates by alternating between production-ready environments.

Evaluating high availability means reviewing monitoring intervals, zone distribution, and historical uptime. Monitoring systems like Pingdom or Datadog must support synthetic checks and real-time alerts. Without this, uptime claims remain unverifiable. But guaranteeing uptime is only possible when backed by real-time monitoring systems capable of detecting service degradation before failure.

Real-Time Monitoring

Real-time monitoring is the continuous, automated observation of system behavior, performance metrics, and availability states within the hosting environment, designed to trigger alerts, feed dashboards, and inform both humans and machines of anomalies as they happen.

In enterprise hosting, it ensures uptime tracking, deployment diagnostics, and incident prevention, optimizing CPU load distribution, memory usage, and disk I/O latency across nodes and services.

Monitoring systems stream high-frequency data — often every 1s to 5s — from CPU usage, memory load, disk I/O (e.g., spikes above 300 MB/s), network traffic, app response times, error rates, container restarts, SSL cert validity, and DNS latency. These signals are collected by the monitoring stack using agents like Prometheus or Datadog, backed by synthetic checks and heartbeat probes.

Each metric is measured against alert thresholds. If response time exceeds 500 ms or a pod restart pattern is detected, escalation flows trigger — routing alerts to the DevOps or incident response team. Dashboards visualize current and historical data, enabling the incident response team to detect root causes and validate system recovery timelines, while distributed tracing and log aggregation support debugging and SLA audit trails.

An enterprise hosting platform integrates with a full monitoring system to track CI/CD releases, validate failovers, detect anomalies, and maintain SLA uptime feeds. Real-time observability directly informs rollback actions, compliance reporting, and service-level availability guarantees.

Key features buyers should expect: high-resolution telemetry feeds, customizable thresholds, alert integration with ops workflows, log correlation, and retention control. The right monitoring configuration ensures real-time performance visibility and drives responsive operations across services and deployments.

Security

Security in enterprise hosting is a structured, enforceable, and testable framework that protects websites from external threats, internal misconfigurations, and data loss, while supporting development, staging, and CI/CD workflows without expanding the attack surface. The platform enforces risk boundaries through architecture-level segmentation and policy enforcement.

The system enforces layered defenses, as it applies TLS 1.3 across endpoints at the network level, filters traffic using DDoS mitigation and endpoint firewalls, and detects anomalies through intrusion detection systems (IDS). TLS handshake validation ensures authenticity at every connection.

Application security includes web application firewalls (WAF), rate limiting, code injection detection, and webhook signature validation. Infrastructure hardening uses secure containers, sandboxed environments, and OS hardening practices to reduce exploitability.

Endpoint firewalls and OS configurations establish a minimized security perimeter at runtime. Access is restricted through IAM policies, multi-factor authentication, least-privilege role-based permissions, and comprehensive audit trails. Access audits are triggered on failed authentication attempts, ensuring traceability across all login and system events. Encryption is enforced using AES-256 at rest and TLS 1.3 in transit, without exception.

CI/CD pipelines and staging environments inherit hardened configurations from Infrastructure-as-Code (IaC) manifests. Builds are scanned for CVEs, and each deployment includes a software bill of materials (SBOM) check to validate dependency integrity. Hash-based integrity checks are run before deployment, and scoped deployment keys follow strict privilege enforcement. Rollback paths apply the same access controls and policy enforcement as production, keeping all environments security-consistent.

Leading providers support this architecture with SOC 2 and ISO 27001 compliance, regular third-party audits, scheduled penetration testing, and continuous event monitoring. A security event monitoring tool detects anomalies, triggers alerts, and forwards event data to compliance officers for review. Policies are enforced with documented logic, not assumptions. Security isn’t just claimed — it’s continuously verified.

Buyers should confirm access to system logs, IAM policy transparency, certificate rotation schedules, and documented breach response procedures. This includes audit trail depth, 90-day log retention minimums, 2FA timeout settings, and verified alert thresholds for login anomalies. A secure enterprise platform is traceable, not just labeled.

Compliance Support

Compliance support is the set of technical capabilities, infrastructure controls, and documentation layers that enforce regulatory alignment across GDPR, HIPAA, SOC 2, PCI-DSS, and other frameworks for enterprise hosting environments.

This support is built into how data is handled, stored, and audited across environments. It encompasses regional data residency enforcement, encryption at rest and in transit, audit log retention, and contractual compliance guarantees through SLAs and signed BAAs.

Enterprise websites are often required to meet rigorous compliance frameworks. GDPR compliance involves EU-region data residency, consent handling, and deletion policies for PII. Enterprise hosting platforms support HIPAA compliance by encrypting PHI storage, logging access events, and enabling breach notification standards. SOC 2 Type II mandates operational controls, confidentiality, and system integrity.

PCI-DSS applies to cardholder data protection, enforcing storage isolation and fine-grained access policies. Each framework mandates infrastructure-level controls and documentation practices that directly impact how enterprise websites process, transmit, and store sensitive data.

Enterprise hosting providers enforce these requirements through policy-driven infrastructure. Data geofencing restricts regional flow; encryption protocols like AES-256 and TLS 1.3 are enforced by default; and access logs track all system-level events, retained for a minimum of 12 months, aligning with audit control point requirements.

Signed Business Associate Agreements (BAAs), documented SLAs, and regulatory support clauses are integrated into the contract stack for regulated workloads. Certified infrastructure partners may also include signed compliance appendices and attestation reports as part of their service-level compliance guarantees.

Developers still play a critical role, as secure CI/CD pipelines, staging environments, and backup systems must enforce compliance-aligned retention policies, encryption configurations, and access control even in non-production environments. PII or PHI must not leak into test workflows, and all third-party integrations must adhere to defined retention windows and audit trail enforcement. Audit officers and legal teams often review log exports from CI/CD pipelines and backups to verify compliance integrity.

To evaluate compliance scope, verify the hosting platform’s documentation that outlines regulatory zone isolation, minimum log retention (e.g., 12 months), encryption mechanisms (AES-256, TLS 1.3), and contractual clauses such as BAAs and SLA-backed attestation reports. Compliance is a structural part of enterprise hosting; while these guarantees elevate enterprise hosting reliability, they also influence pricing structures, especially for regulated industries requiring 99.99% uptime or audit-grade infrastructure.

Pricing

Enterprise hosting pricing reflects the architectural complexity of each deployment. Providers scale pricing based on CPU core count, RAM volume (e.g., 16 GB), SSD type and capacity (e.g., 500 GB NVMe), and outbound bandwidth usage (e.g., GB/month).

Dedicated servers and containerized environments are priced differently than shared hosting due to their isolation overhead and orchestration complexity. A container instance billed per second may be more cost-efficient for short-lived workloads, while a reserved 8 vCPU, 32 GB RAM instance could benefit long-term environments via flat-rate discounts.

Key cost drivers include SLA enforcement, security, compliance, and support. Providers often charge a premium for 99.999% uptime SLAs compared to 99.9%, since higher guarantees demand redundant infrastructure, failover systems, and continuous monitoring.

Security feature packages — like WAF, DDoS mitigation, and log retention — are typically offered in tiered bundles or as metered add-ons, with costs increasing based on scope and retention duration. Compliance add-ons (HIPAA, SOC 2, GDPR) often introduce separate surcharges for audit logging, encryption enforcement, and access controls. Some regions, like the EU, may trigger GDPR-specific hosting fees.

Billing models vary based on environment type and provisioning method. Hourly billing is common for elastic cloud compute, per-second billing applies to container workloads, and reserved resource pricing offers predictable monthly rates for fixed-term commitments (e.g., 1–3 years).

Usage-based billing may apply to bandwidth, storage, or user sessions. Support tier cost also differs — standard support may be included, but 24/7 engineering access with SLA-bound response times can significantly increase total cost.

Hidden charges can impact total spend. Data egress beyond the included quota is usually billed per GB, with typical rates ranging from $0.05 to $0.12/GB. Backups, multi-version snapshots, and multi-region replication are billed separately, often using per-GB storage tiering.

Overages on compute, IOPS, or storage may trigger premium multipliers beyond defined thresholds. Provisioning fees or region-based failover pricing may apply depending on the redundancy scope.

Before committing, buyers should evaluate pricing transparency and clarify all included components. It’s essential to understand how resource use maps to metered billing, what baseline features are bundled, and where scaling triggers variable costs. Monthly pricing doesn’t reveal the full structure — enterprise hosting reflects the cost of resilience, control, and support baked into the platform.

Reliability

Reliability in enterprise hosting is the consistency of operational performance, proven through redundancy models, uptime history, fault-tolerant design, and predictable recovery mechanisms. It enables the hosting infrastructure to keep running during stress, failures, or changes — such as traffic spikes, node crashes, or software rollouts — without disrupting service.

Reliability is delivered through infrastructure components such as hardware redundancy (RAID 10 arrays, dual NICs, dual power supplies), multi-zone replication, DNS failover logic, and autoscaling groups with stateful container design. These components enable fault domain isolation and self-healing behavior during node failures or regional disruptions, maintaining service continuity through redirection and automated recovery. Version rollback pipelines and consistent CDN propagation further reinforce zero-impact update cycles.

Reliability is measured through historical uptime logs, MTTR (Mean Time to Recovery), RTO (Recovery Time Objective), and vendor-published SLA dashboards. For example, enterprise-grade environments may report MTTR under 5 minutes and RTO under 15 minutes. While SLA targets claim 99.99% uptime, real-world performance is verified via status logs, incident timelines, and third-party transparency dashboards.

While vendors promote SLA compliance and “five-nines” availability, true reliability is observed in how platforms behave during stress. Platforms that withstand multiple simultaneous node failures, recover state within minutes, and trigger automated rollbacks without user impact demonstrate infrastructure-level reliability.

Development teams should validate rollback success rates, incident resolution speeds, and platform-wide node fault tolerance. Key indicators include the number of node failures tolerated without disruption, speed of DNS failback mechanisms, state retention in container restarts, and whether autoscaling preserves connection pool integrity during burst events.

Reliable Enterprise Hosting Providers

WordPress VIP

WordPress VIP is a specialized enterprise WordPress hosting provider operated by Automattic, built to support large-scale enterprise websites with complex infrastructure and governance requirements. It’s designed for organizations that demand robust compliance, editorial control, and performance predictability, especially those managing high-velocity publishing or multisite frameworks.

The platform delivers autoscaling WordPress infrastructure through containerized environments with real-time traffic adaptation, backed by SLA guarantees like 99.99% uptime. WordPress VIP partners with enterprise-grade CDNs like Fastly and Cloudflare to route traffic through globally distributed edge nodes, ensuring content stability and low-latency delivery across diverse regions.

Governance is foundational; WordPress VIP enforces content workflow integrity through role-based publishing permissions, plugin governance policies, and strict development gatekeeping.

For development teams, WordPress VIP supports CI/CD-integrated, gated deployment environments with dev-approved review pipelines and staging-to-production release workflows. It aligns closely with security and compliance teams by integrating audit logs, access controls, and content compliance frameworks.

Editorial groups benefit from tools that structure multilingual and multisite governance while retaining centralized oversight. This governance framework includes role-based content permissions, plugin governance enforcement, and audit-ready publishing logs.

To see how WordPress VIP compares with other top-tier platforms, check our detailed comparison of the best enterprise hosting providers.

WP Engine

WP Engine is an enterprise-capable hosting platform with an emphasis on developer-focused tools, high-performance WordPress deployment, and customer success support structures. While not as governance-heavy as WordPress VIP, it appeals to teams prioritizing speed, agility, and performance optimizations within scalable WordPress environments.

For enterprise-grade WordPress operations, WP Engine provisions isolated dev/stage/prod environments with Git-based deployment pipelines, SSH gateway access, and support for custom CI workflows. It supports enterprise WordPress performance through WP-specific caching layers like EverCache, build-time asset optimization, and staging push configurations.

The platform is engineered to handle high-traffic ecommerce and media-heavy deployments, aligning with teams that rely on flexible developer control panels and plugin lifecycle management. Genesis framework optimization further enhances performance tuning for frontend delivery, while WordPress runtime environments are architected around scalable database backends and isolated compute resources.

Support on the enterprise tier includes a dedicated technical account manager, 24/7 access to WordPress-specialized engineers, and SLA-backed performance monitoring. The platform automates plugin and core updates, provides real-time telemetry via performance dashboards, and reinforces operational reliability through a distributed failover system. WordPress debugging tools and uptime commitments at 99.99% are tied directly to proactive monitoring and response systems.

A full comparative breakdown of WP Engine’s performance and positioning among enterprise hosts is included in our detailed hosting comparison.

Kinsta

Kinsta is a reliable enterprise-grade WordPress hosting platform, known for its use of container-based deployments, Google Cloud backbone, and emphasis on scalability and developer autonomy. Kinsta delivers enterprise WordPress hosting through a container orchestration architecture tailored for agile, development-led teams that demand autonomy, rapid deployment cycles, and minimal platform overhead.

It stands apart from governance-heavy solutions by prioritizing developer-first environments, making it ideal for teams managing multiple microsites or operating under fast-moving iteration models.

Kinsta runs entirely on the Google Cloud Platform, provisioning deployments across 35+ GCP data centers. Each WordPress site is isolated in its own LXD container, with dedicated PHP workers, an independent MySQL database, and no shared resources across environments.

This architecture enforces a 99.99% uptime SLA, while GCP’s multi-zone redundancy ensures continuity during regional failures. Deep Cloudflare integration provides global edge routing, CDN acceleration, HTTP/3 support, firewall policies, and DDoS protection without requiring additional setup.

Developer workflows are supported through SSH access, WP-CLI integration, Git-based deployment pipelines, and dedicated development, staging, and production environments. Kinsta enables real-time debugging via application-level APM tools, exposing bottlenecks in both PHP execution and database performance.

The platform automates daily backups with retention windows of up to 30 days, and cached assets consistently achieve <100ms global TTFB. The developer-first control panel provides visibility into PHP worker scaling, reverse proxy configurations, and server-level health checks.

Support is built around 24/7 human live chat, with no reliance on bots, making it viable for high-traffic, multisite, and SLA-bound deployments that demand real-time triage.

How Does Enterprise Hosting Support Website Development?

Modern enterprise website development is a multi-threaded, always-on process involving cross-functional teams, staging validations, and version-controlled deployments — all happening concurrently across distributed environments.

Without hosting that provisions isolated environments and orchestrates deployment logistics, this development cadence breaks. That foundation is enterprise hosting, the engine that drives the entire development lifecycle. Learn more in our Enterprise Website Development Guide.

To support this continuous and collaborative structure, an enterprise hosting platform provisions pre-configured environments and orchestrates developer workflows. These environments are required for parity testing, secure isolation, and sandboxed failure points. Hosting platforms also support large team workflows through role-based access control, branching-aware deployment routing, and CI/CD integrations that can handle multiple concurrent pipelines without collision. This includes artifact versioning, container lifecycle alignment, and deployment traceability, all mapped to role-based access and branching policy control.

Enterprise hosting platforms integrate these pipelines natively, using hosting-level build runners and webhook triggers for CI/CD automation. Version control systems such as GitHub or GitLab sync directly into the hosting stack, triggering automated pipelines that compile, test, and promote builds through approval gates.

Hosting layers validate builds before live release, enforce rollback checkpoints for failed promotions, and log version metadata with each commit. This orchestration enables rollback in under 30 seconds, staging parity testing without touching live code, and release cycles that reach into double digits per day — all with traceability.

Together, these components constitute the infrastructure layer that provisions developer sandboxes, synchronizes commits, validates deployment logic, and supports versioned, rollback-ready CI/CD orchestration. At the foundation of this stack lies the environment layer — isolated, repeatable, and aligned with production.

Development-Ready Server Environments

A development-ready environment in enterprise hosting is an isolated runtime infrastructure instance that mirrors the production stack in configuration, software versions, and resource allocations. It matches PHP version, database engine, and CDN layer to ensure runtime parity and increase deployment predictability. Each provisioned environment represents a config mirror of the live tier, minimizing drift and enforcing consistent debugging expectations.

Each environment runs with separate allocations for CPU, memory, and file systems, enforcing full isolation across teams or projects. Logs, debugging hooks, and error capture tools are integrated for environment-specific fault detection during early development and testing cycles.

These environments are structured in tiers: development containers for feature implementation, staging layers for QA and CI/CD promotion checkpoints, and production as the revert-ready layer. Optional zones — such as rollback-capable sandboxes, UAT instances, or client preview environments — can be provisioned on demand. All tiers maintain runtime parity through auto-synced configuration templates, preventing environment drift across the deployment pipeline.

Access is enforced via permission-based staging policies. Teams interact through SSH, CLI, or web-based consoles, with scoped rights per environment tier. Git branches can be mapped to branch-targeted preview environments, enabling isolated feature testing without touching the shared runtime.

Provisioning is automated through scripts and templates like Terraform or Helm, creating immutable deployment systems tied to version control states. Templates support both persistent dev instances and ephemeral testing zones configured for lifecycle-aware deployment cycles. These ephemeral zones deploy in under 90 seconds and auto-expire within 24–72 hours to optimize resource allocation across teams.

Once these isolated environments are active, development syncs with version control and CI/CD pipelines — tying code to infrastructure via feature-branch deploys, hotfix staging, and revert-ready layers. With the environment lifecycle aligned to source control, the next critical factor becomes developer collaboration, and that’s where version control systems step in.

Version Control and Collaboration Support

In enterprise hosting environments, Git-based version control acts as the backbone for code integrity, governing how code moves, who reviews it, and how it reaches deployment through structured, auditable flows. Feature and release branches are mapped to isolated staging and testing environments, enabling pre-merge validation and multi-user development streams.

Hosting platforms integrate repository webhooks to trigger builds automatically on each commit, enforcing branch-to-environment linkage. Every change is logged with contributor identity, timestamp, and review status.

Most enterprise platforms integrate directly with Git providers such as GitHub (via OAuth), GitLab, or Bitbucket, enabling real-time hooks and commit metadata ingestion. Role-based access defines who can push, review, and deploy. Merge requests must pass approval gates.

Actions like approvals and rollbacks are recorded in audit logs, creating a traceable workflow from commit to release. These logs typically retain commit history and contributor actions for 90 to 180 days, supporting compliance audits and rollback events.

Pull requests serve as gating mechanisms between feature branches and production, enforcing pre-merge reviews, approval thresholds, and testing validations before promotion. Hosting environments track each promotion step and retain commit history to support rollback if needed.

By tying Git repositories to infrastructure behavior, enterprise hosting ensures development is auditable, reversible, and controlled. This version control layer becomes the base for automated delivery through CI/CD systems. With version control enabling traceable collaboration, the next step is automated delivery, where CI/CD pipelines take over code movement and enforce rollout safety.

CI/CD Pipeline Compatibility

CI/CD pipelines in enterprise hosting automate code testing and deployment to validate code integrity across environments. Continuous integration triggers automated test suites on each commit, while continuous deployment promotes code to staging or production based on pass conditions and policy gates, often within under 2 minutes for staging promotion.

Enterprise hosting platforms integrate with CI/CD tools through native support or webhook endpoints. Some offer built-in pipelines with environment-specific deploy controls, while others support external systems like GitHub Actions or GitLab CI, which define deployment workflows using YAML configurations and synchronize with commit hooks from version control repos via API connections.

Each commit moves through structured pipeline stages. Automated test suites run before code is considered for staging, typically completing in under 90 seconds per cycle. If tests pass, deployment can proceed based on release rules or manual approval. The CI/CD system logs all events with timestamps and routes failed builds to rollback staging, with rollback triggers reverting code in under 30 seconds.

Approval workflows, canary rollouts, and gated deployment policies help control risk in production environments. Branch-based policies and commit hooks trigger secure release approvals and enforce order in the deployment chain. Hosting platforms treat these controls as core infrastructure, ensuring every production promotion follows validation checkpoints and logging procedures.

CI/CD pipelines automate deployment orchestration, reduce human error, and enable 10–50 safe code pushes per day without manual oversight. But once the code is approved, it still needs to perform safely. That’s where staging, testing, and rollback systems are needed.

Staging, Testing & Rollback Systems

Enterprise hosting platforms provision staging environments as fully synchronized replicas of the production stack. These environments match the live infrastructure down to the software versioning, asset configuration, and runtime services, forming isolated pre-deployment checkpoints.

A staging environment supports QA, UAT, and performance profiling in isolation from user traffic. Staging replicas undergo integrity checks to ensure parity in system libraries and runtime dependencies before test execution. Enterprise platforms maintain staging-labeled subdomains with masked production database clones refreshed every 24 hours, making the environment stable for hotfix validation, preview generation, and feedback review without exposing unverified updates to production.

Within these staging layers, enterprise-grade testing suites execute automated test cases before any code enters the release pipeline. Unit, integration, and regression tests are embedded into the CI workflows and can also trigger across canary or proxy environments post-deploy.

Typical test cycles span over 200 cases with an average runtime of 90 seconds per batch. These test suites evaluate runtime diffs and commit hashes to identify logic errors or performance regressions, with failed tests immediately registered as rollback triggers. Testing outcomes are recorded in deployment audit logs to create a traceable verification chain.

Rollback systems in enterprise hosting operate through layered snapshot architectures. Hosted backup snapshots are created at each deployment interval, storing the full environment state — files, databases, dependencies, and plugins. These immutable deploy layers support restoration either manually via the platform UI or CLI or automatically in response to failure hooks triggered by test gates.

Platforms also offer dependency-aware rollback that accounts for asset caching and schema shifts. Recovery is executed under tightly monitored SLA thresholds, often restoring environments in under 60 seconds of trigger, preserving uptime and minimizing business interruption. Hourly snapshots are retained for up to 30 days, enabling historical recovery across rolling deployment windows.

This layered approach — staging parity, automated test coverage, and precision rollback — transforms enterprise hosting from a scaling utility into a protective framework. It mitigates deployment failure conditions, including corrupted pushes and unverified feature merges, enforces compliance with rollback traceability, and secures business continuity during live development cycles.

Immutable release tags and audit-stamped recovery paths establish a deployment architecture where safety and uptime are engineered into the release flow.

Together, these systems reinforce that enterprise hosting infrastructure doesn’t merely support development — it safeguards every deployment that keeps the business operational.

Contact

Don't like forms?

Shoot us an email at [email protected]