Enterprise Application Modernization for Web Architecture Compatibility

Table of Contents

In the context of web compatibility, enterprise application modernization is the process of upgrading legacy systems to align with the operational and architectural standards of modern web infrastructure.

Unlike prior generations of software, where enterprise applications functioned as isolated systems, contemporary digital environments require that these applications work in continuous sync with the enterprise website as a core integration surface.

The enterprise website actively consumes and presents application data, often structured through headless CMSs, API-driven frontends, and modular microservices that orchestrate backend capabilities through modern delivery protocols.

When enterprise applications fail to align with this site infrastructure, the integration collapses, breaking user-facing interfaces, fragmenting service logic, and disabling real-time content delivery.

To prevent these breakdowns, modernization initiatives must prioritize architectural compatibility: web delivery readiness, protocol standardization using modern delivery schemas such as RESTful APIs or GraphQL queries, and content synchronization across frontend/backend interfaces.

Legacy systems, often monolithic and state-dependent, obstruct these goals by lacking support for decoupled APIs, shared authentication layers, and cloud-ready deployments.

Application modernization, therefore, is an architectural requirement for digital alignment. It enables enterprise applications to expose data and functionality in ways that can be reliably consumed by the enterprise website, maintaining cohesion across the digital architecture.

To support the enterprise website application, modernization must focus not only on backend optimization but also on architectural cohesion across layers. This process begins with a focused effort to clarify what modernization means in technical terms and how it unfolds across systems.

What is Enterprise Application Modernization?

Enterprise application modernization is an architectural adaptation of enterprise applications to align their architecture with the delivery, integration, and compatibility requirements of modern web systems.

This modernization restructures how the application functions in relation to modern service layers, API-first ecosystems, and cloud infrastructure. The aim is to adapt enterprise applications to support current deployment models, data delivery protocols, and decoupled frontend architectures.

Such adaptation ensures the enterprise application operates seamlessly within API gateway ecosystems and aligns with cloud-native infrastructure patterns.

A legacy system, originally designed for monolithic environments and server-bound data access, lacks compatibility with today’s modular, API-driven web interfaces. These systems often cannot expose data through RESTful endpoints, do not support GraphQL queries, and are tightly coupled to proprietary workflows or outdated middleware.

Modernizing such enterprise applications involves evolving their backend logic and service contracts so that they can operate through an API gateway, contribute to frontend integration, and function reliably within headless CMS setups.

This realignment allows for real-time, scalable interactions between the application and the enterprise website’s components across content delivery systems, session management tools, and frontend rendering pipelines.

The result is an enterprise application that supports website performance and extensibility, rather than constraining it. Unlike replacement, which discards existing systems, modernization adapts and evolves enterprise applications, preserving their core capabilities while aligning them with modular, cloud-native environments for seamless integration into enterprise website ecosystems.

Why Does Enterprise Need Application Modernization?

Enterprises need application modernization because legacy enterprise applications were not designed to operate within today’s distributed, API-driven, and web-compatible infrastructure environments. Enterprise applications built on rigid monolithic architecture are structurally incompatible with the distributed, API-first demands of contemporary web environments.

Their synchronous data exchange models, tightly coupled frontend/backend integration, and lack of support for scalable service exposure impede their operational flexibility within modern infrastructure.

Modern enterprise websites, in contrast, require applications that can support dynamic content rendering, CMS decoupling, and scalable data delivery across multiple devices and platforms. This dependency on a decoupled architecture stems from the shift toward cloud environments and modular web systems, where CMS or content delivery systems need to fetch and present data asynchronously without relying on legacy session state constraints.

However, enterprise applications frequently fail to expose the APIs necessary for such decoupling, limiting how enterprise websites can retrieve and display real-time content. Without support for infrastructure compatibility or modular deployment, these applications constrain how effectively a cloud environment or CMS can integrate and function.

The lack of standardized integration layers and microservices compatibility further hinders the ability to connect frontend experiences with backend services.

A common issue is the absence of RESTful service layers or GraphQL endpoints, which prevents efficient communication between backend services and website components. Moreover, shared authentication systems are often unsupported, complicating identity management across application and web layers.

Enterprise application limitations — from a lack of session synchronization capabilities to service unavailability and deployment rigidity — expose the architectural gap between modern enterprise website requirements and legacy system capabilities. Bridging this gap requires a structured enterprise application modernization process designed for cloud-native, API-first, and decoupled content ecosystems.

Enterprise Application Modernization Process

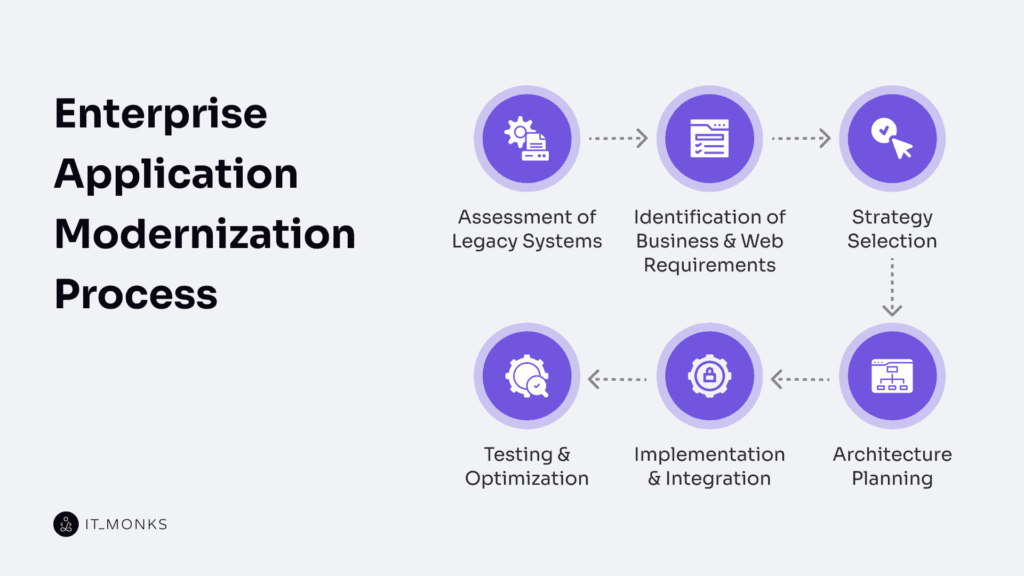

Enterprise application modernization unfolds as a structured pipeline — each phase reshaping the system for compatibility with web delivery environments. This is not a static rebuild but a staged transformation where each step supports integration, delivery, and content synchronization with the enterprise website.

Identification of business & web requirements defines operational expectations tied to web architecture. This phase maps delivery models, session handling rules, frontend data flows, and content integration endpoints required by the enterprise website. Missing this step risks misaligned scope in later architectural planning.

Assessment of legacy systems examines architectural rigidity — monoliths, missing APIs, tightly bound services, or isolated data layers that block external communication. Legacy architectures that lack service-layer modularity or API exposure prevent scalability and obstruct frontend synchronization with enterprise websites.

Strategy selection determines the transformation route — replatforming, refactoring, rearchitecting, or full replacement — based on system gaps. This decision point evaluates how well the legacy system aligns with modern deployment logic and integration pipelines. Selection hinges on the legacy app’s capacity to support API exposure, frontend data flow, and modular delivery aligned with CMS structures.

Architecture planning establishes the new system layout. It maps service layers, delivery endpoints, and content flows to web-facing frameworks. The resulting architecture blueprint ensures backend logic, service routing, and frontend synchronization operate cohesively within the CMS architecture.

Implementation & integration builds and connects the modernized modules. It executes service decompositions, inserts middleware layers, and enables interfaces for frontend synchronization and content delivery. If skipped or partial, the app remains structurally detached from the web layer and fails to execute intended deployment logic.

Testing and optimization verify system performance, security, and frontend compatibility. This includes testing data flows, session handling, and content alignment across the website and application. Unresolved issues here lead to runtime failure or desynchronized user experiences.

With the pipeline complete, the next focus is choosing a suitable strategy to execute the transformation.

Identification of Business & Web Requirements

The requirement phase in enterprise application modernization begins by identifying compatibility targets across two critical vectors: internal business logic and the enterprise website’s operational framework. This step forms the compatibility baseline that will dictate the modernization path.

On the business side, requirements typically involve defining operational objectives that the application must continue to serve post-modernization. This includes maintaining or evolving data workflows, supporting compliance frameworks, and responding to new scale targets or deployment models.

These needs are shaped by the enterprise’s current application logic, data access layers, and operational structure. The system must remain logically coherent even as its technical foundations are updated.

Web-facing requirements demand a parallel focus but come from a different architectural axis. These define how the enterprise application must integrate with the website, not just function independently.

Here, the modernization process identifies structural dependencies such as CMS content sync behavior, frontend delivery formats, and session-based integration timing for real-time availability. Requirements at this level are often defined by how integration endpoints behave, how session handling is coordinated, and what constraints exist around exposing content or functionality through APIs.

Compatibility here means the application must expose the right data models and interface logic to match the site’s architecture and content system.

Without a tightly defined web delivery requirement scope, the modernization process risks producing a technically sound application that remains disconnected from core enterprise website functionality.

Assessment of Legacy Systems

Before an enterprise application can be modernized to support current web architecture standards, its legacy backend must be assessed for structural and integration limitations that block reliable web delivery and frontend integration. This diagnostic step is crucial to determine why the existing system cannot reliably operate as part of a website-compatible delivery model.

Legacy enterprise applications often restrict integration due to a monolithic architecture that tightly couples business logic and data access into a single deployable unit. This structure lacks the flexibility to expose discrete functionality needed by web frontends.

Without a service layer, the application cannot modularize its operations or externalize capabilities via APIs. Many legacy backends rely on synchronous logic and static delivery methods, preventing dynamic rendering or asynchronous data fetch patterns required by modern web experiences.

A frequent constraint is the complete absence of an API interface. Even when present, endpoints may use outdated protocols that are incompatible with CMS platforms and cannot relay structured data to frontend frameworks.

In some cases, delivery bottlenecks arise from content structured for static templates, with no support for headless delivery or real-time content fetch. Session handling is often isolated or browser-bound, which prevents centralized authentication and blocks cross-platform state synchronization.

This assessment step does not aim to catalog every outdated component, but to isolate those technical characteristics that prevent the legacy system from serving as a reliable backend for web consumption. Findings such as missing service abstraction, tight coupling, or protocol mismatches directly shape which modernization approaches — replatforming, refactoring, rearchitecting, or replacing — can be realistically pursued.

Strategy Selection

Strategy selection in enterprise application modernization depends on how well the existing system can align with the architectural needs of the enterprise website. The goal is to match backend capabilities with the demands of enterprise website architecture — not to retrofit everything, but to select a path that fits.

One major factor is the API surface — when the enterprise website requires dynamic frontend data sync, legacy systems must expose their backend logic through accessible endpoints.

CMS compatibility is another key factor — supporting decoupled rendering demands that backend logic accommodate headless content delivery principles, which many monolithic systems weren’t designed for.

Frontend session management is another constraint. Where websites depend on shared authentication or persistent state, the application must support session continuity across layers. Without it, frontend interaction models break, and the strategy needs to shift toward deeper changes.

Content delivery models impose further constraints — when websites require dynamic or distributed rendering, backend systems must support adaptable delivery pipelines. If the current structure can’t support that, the modernization approach has to open it up.

Based on these constraints and requirements, the strategy — whether replatforming, refactoring, rearchitecting, or replacing — is selected to meet integration depth, delivery models, and frontend expectations. Depending on these constraints, enterprises typically choose among four paths: replatforming, refactoring, rearchitecting, or full replacement.

Architecture Planning

Architecture planning is the phase where the structural foundation of the modernized enterprise application is defined to serve the delivery and integration needs of the enterprise website. It translates the chosen modernization strategy into a system configuration built for frontend compatibility and backend operational support.

At this stage, the application architecture is structured to align with web-specific functional requirements. These include exposing service interfaces through structured API routing, connecting to headless CMS endpoints for content distribution, maintaining session state for user continuity, and supporting the data synchronization that underpins dynamic frontend rendering.

Every integration surface — whether for real-time content delivery or authenticated data exchange — relies on architectural decisions made here.

This phase involves orchestrating and mapping the application’s service orchestration logic, defining communication protocols that will govern data flow to the web layer, and selecting a deployment configuration that supports scalable, cloud-aligned delivery.

This includes defining service modularity boundaries to ensure independent scaling and clean separation of integration responsibilities. The objective is to provide a backend that operates as a stable data and service layer for the enterprise website, with full compatibility across request flows and session-aware behaviors.

Through this architectural alignment, the enterprise application is locked into an architectural configuration that supports content synchronization and cross-platform data interaction. Any disconnection between app and web infrastructure — such as mismatched protocol handling or non-composable interfaces — typically results from poor planning at this level.

Implementation & Integration

Implementation is the actual transformation of the enterprise application. This step isn’t complete until the modernized application connects and delivers content directly to the enterprise website, supporting real-time data delivery, session handling, and frontend requests.

Integration drives this phase; the application must expose structured endpoints through REST APIs or GraphQL schemas, delivering data in formats ready for frontend use. These endpoints respond to frontend user actions, pull dynamic content, and support workflows tied to the enterprise website.

Session continuity is critical, as the application must manage session state across web layers, syncing identity data through identity federation and maintaining active user interactions. Shared authentication logic — often tied to OAuth or SAML — ensures user access remains consistent across the enterprise website and the modernized application.

Content syncing with CMS platforms is also essential. Data structures must align with the website’s rendering logic, and updates should flow through predictable service layers that support content publishing.

The modernized application must now operate as a modular, always-available backend service. Its success is measured not by completion of internal tasks but by its ability to support the website without interruption or mismatch.

Testing and Optimization

The enterprise application, once implemented and integrated, must undergo targeted testing to confirm its delivery performance and integration quality within the architecture of the enterprise website. This step verifies the application’s ability to function as a dependable data backend for the web layer, rather than merely validating its general operability.

Testing focuses on the continuity of system integration, including API response consistency, session handoff across subdomains, and the synchronization of content between the application and the content delivery layer of the website.

Specific scenarios — such as real-time API requests from the content management system to the enterprise application, cross-domain session persistence, or fallback responses during service outages — are exercised to reveal any weak points in architectural interaction. API uptime, load behavior, and error response paths are all subject to scrutiny to guarantee the frontend receives accurate and timely data.

Optimization runs in parallel to this process. The enterprise application must be tuned to reduce latency and prevent payload bloat that could impair frontend sync speed. This involves trimming unnecessary response fields, managing cache directives for API endpoints, and preloading content where needed to accelerate user-perceived performance.

Architectural inefficiencies, such as blocking operations or serialized data transfers, are optimized or replaced to improve throughput and minimize delay.

Through this focused cycle of validation and optimization, the enterprise application is confirmed to not just function but to deliver a stable, responsive, and low-latency experience that aligns with the operational needs of the enterprise website.

Modernization Strategy

After assessing both the legacy system and enterprise website requirements, a modernization strategy must be selected to align the application with modern web architecture.

The strategy must correspond to the integration demands, frontend/backend coupling, delivery model fit, and service layers that support a scalable, API-ready website infrastructure. This alignment begins once the legacy system’s constraints and the enterprise website’s requirements have been assessed.

At this juncture, a modernization strategy is selected to define how the enterprise application will adapt to meet the enterprise website’s architectural and integration requirements.

Four primary approaches structure this phase: replatforming, refactoring, rearchitecting, and replacing. Each represents a distinct approach for restructuring application internals, adapting system behavior, and exposing services in ways that enhance backend compatibility and support integration.

Replatforming involves migrating the application to a new runtime environment without altering its codebase. Refactoring focuses on reworking internal code components while preserving functionality. Rearchitecting alters the core system structure to accommodate broader capabilities like decoupled services and advanced API layers. Replacing discards the legacy application in favor of rebuilding a new one entirely from scratch.

Each strategy has a different level of friction when it comes to CMS support, API availability, authentication workflows, and architectural decoupling. The choice of approach will shape the compatibility between the modernized application and the web delivery model — whether the site uses static rendering, dynamic SSR, or client-heavy frontend architectures. It also affects CMS support models and the potential for cloud migration readiness.

The following sections describe these 4 approaches and evaluate how they affect the architectural compatibility, integration readiness, and web-facing behavior of the enterprise application. The objective is not just technical improvement, but a reconfiguration that aligns the application with the system architecture and service model of the enterprise website.

Replatforming

Replatforming is moving an application to a new runtime environment (cloud, containers, VMs) without modifying internal architecture. This runtime migration focuses exclusively on changing the hosting layer while preserving the application’s core behavior and backend architecture.

The benefits of replatforming are centered on the infrastructure layer. It often improves deployment efficiency, resource scalability, and runtime stability. A cloud-ready hosting environment may offer elastic capacity, reduced hardware dependency, and streamlined maintenance cycles, especially for enterprises seeking operational cost reduction or improved failover management.

However, replatforming introduces a clear limitation in terms of enterprise website integration. Because the application code remains rigid, there is typically no added support for RESTful or GraphQL APIs, no transformation of backend data into decoupled frontend-consumable formats, and no meaningful exposure of content for CMS-level sync.

The application continues to operate as a monolithic service that fails to coordinate sessions or data flows with modern delivery layers. As a result, the delivery infrastructure of the enterprise website cannot coordinate with the replatformed application in any modular or session-aware manner.

Replatformed applications may support minimal compatibility needs — such as basic data output for static content or limited service availability — but fall short when the enterprise website requires dynamic content sourcing, real-time user session continuity, or modular component-based rendering.

In most enterprise scenarios, replatforming functions more as a temporary hosting fix, insufficient for structured modernization or meaningful enterprise website integration.

Refactoring

In the context of enterprise application modernization, refactoring is a modernization strategy where the internal code structure is improved without altering the application’s external behavior, and to assess how this affects integration with the enterprise website architecture.

The enterprise application remains functionally consistent while its internal code structure is improved, focusing on simplifying logic flow, modularizing components, and separating service boundaries.

This backend-focused refinement enhances maintainability and sets the foundation for integration with the enterprise website’s delivery layer and broader web architecture compatibility.

By restructuring internal logic, the application becomes more aligned with modular design principles. This improves backend optimization and increases the integration potential with the enterprise website. Service interfaces can be clarified or exposed more effectively, supporting API surface expansion and refining content delivery logic.

These internal improvements open up possibilities for connecting with frontend frameworks through API surface expansion or structured session management — key prerequisites for supporting headless CMS or dynamic web interfaces.

Still, refactoring doesn’t break away from the legacy architecture. Without rearchitecting, the system’s underlying design remains unchanged. Integration layer compatibility remains constrained by the original monolith or tight coupling, meaning service exposure may still require external orchestration or middleware layers.

When an enterprise application already has a degree of modularity, refactoring can be enough to support web integration. In such cases, internal improvements support interaction with the enterprise website’s delivery layer, even if broader architectural changes aren’t introduced.

Rearchitecting

Rearchitecting is the modernization strategy that involves altering the application’s architectural structure — not just internals or infrastructure — to enable full compatibility with enterprise website architecture. This is the first strategy in the list that can genuinely support decoupling, service exposure, and real-time integration with web systems. Instead of tweaking internal code or infrastructure layers, this structural redesign reshapes how the application is composed, breaking monoliths into modular services with defined service layers and clear data flows.

By adopting an API-first structure, the application exposes functionality through structured endpoints, including content delivery APIs, that serve frontend systems directly. RESTful APIs, GraphQL schemas, and other synchronous interfaces become core delivery mechanisms, not optional integration add-ons.

This shift supports real-time interactions and reduces reliance on middleware or workarounds. It’s critical for frontend frameworks that rely on dynamic, structured data feeds during runtime.

Rearchitecting also introduces session orchestration. Applications are structured to manage state and identity across systems, making shared authentication and user sessions consistent between the app and the website. When paired with a headless CMS, the application exposes content endpoints optimized for structured delivery — ready to supply the frontend with what it needs, when it needs it.

For enterprises moving toward reactive web frameworks and decoupled frontend layers, rearchitecting is often the only viable strategy. It aligns the backend’s architecture with the frontend’s delivery model, creating an integration boundary that supports deep compatibility across systems.

Replacing

Replacing is a modernization strategy where the legacy application is entirely rebuilt to meet modern requirements, particularly full integration with enterprise website architecture. This is the most comprehensive option, often used when the existing system is structurally incompatible with frontend delivery models, API-based communication, or CMS architecture.

Replacing is typically selected when the existing system is structurally incompatible with current delivery models, such as those relying on decoupled frontend architectures, API-based data exchange, or CMS integration.

Legacy systems that are closed, monolithic, rigid in logic, or architecturally outdated often lack the flexibility needed for frontend endpoint design, headless CMS compatibility, or microservice-level orchestration. In such cases, incremental updates or restructuring cannot bridge the foundational gap.

Replacing circumvents these limitations by discarding the existing structure and constructing a new application designed to match the enterprise website’s operational and delivery requirements from the start.

This rebuilt system can natively support CMS synchronization, structured API content exposure, real-time session logic, and modular backend services — all aligned with current web architecture needs. Compatibility with RESTful or GraphQL interfaces and seamless authentication integration across frontend components becomes part of the foundational design, rather than an afterthought.

Although the process demands significant investment in planning, development, and transition, it delivers an outcome where the enterprise application becomes natively aligned with the modern web stack, eliminating the architectural bottlenecks of legacy technology. This makes replacing the only viable path forward when scalability, modularity, and frontend integration are not optional features but required characteristics of the system’s core.

Application Synchronization with Website Architecture

Application synchronization with website architecture is the process of ensuring the application’s behavior, structure, and interfaces are architecturally compatible with the enterprise website.

It’s about aligning backend logic and frontend consumption — especially across content delivery, session continuity, authentication, and data exposure.

The application must deliver content payloads through APIs structured for the website’s rendering model. This includes managing delivery latency and content structure to ensure the enterprise website can consume data without additional reshaping. Content sourced from internal content delivery systems must remain consistent between the enterprise application and website without delay or mismatch.

Session coordination ensures that a user moving between the app and website retains identity and state. This requires shared session architecture and identity propagation, typically through tokens or session IDs, to maintain a continuous user experience.

Authentication logic must also be federated. A single authentication handshake should cover both systems to avoid redundant logins or broken access logic. The application must expose authentication flows that align with frontend availability and runtime expectations.

Real-time data — such as inventory, dashboards, or user profiles — must be exposed through fast, predictable API delivery. The application needs to respond at the speed and structure the frontend expects. This includes error fallback and performance tuning to match frontend rendering cycles.

Without synchronization, the website becomes disconnected from application logic, leading to outdated data, broken sessions, and login failures.

Modernized App Website Integration

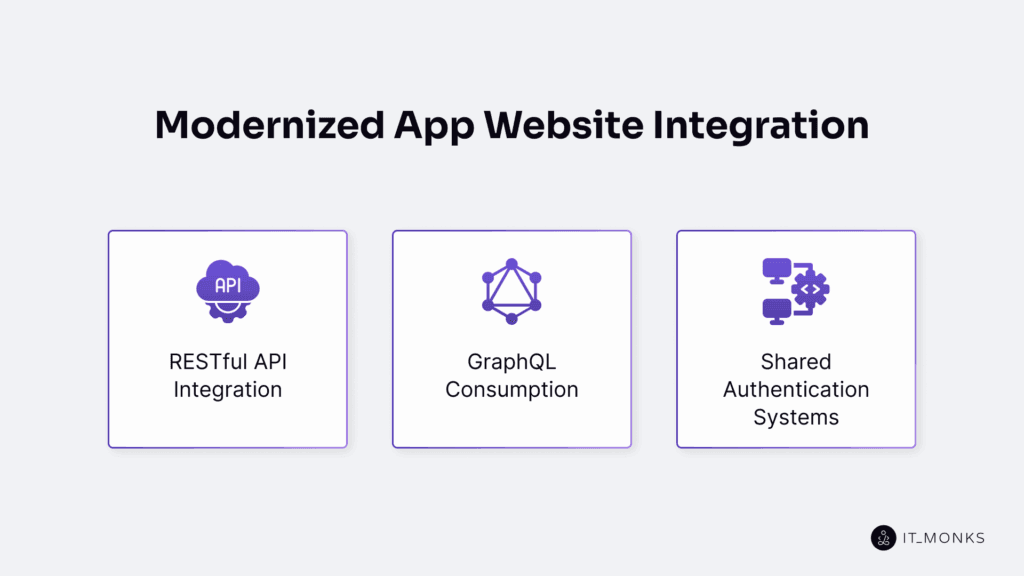

The integration between the modernized enterprise application and the enterprise website represents the execution layer of synchronization, translating previously aligned logic into active service delivery. At this stage, the modernized enterprise application must expose modular, independently consumable service interfaces that align with the architectural requirements of the enterprise website.

Modern web environments demand persistent access to data and services. To support this, the enterprise application exposes RESTful APIs for discrete operations and transactional logic, aligning with CRUD-based service consumption models. Alongside this, GraphQL schemas serve flexible data payloads to accommodate frontend-specific requirements in a single query execution.

Session continuity and authentication handshake mechanisms form the integration glue. These systems support shared access layers, propagating consistent user states across the application and website. The session layer becomes an operational bridge, and the authentication systems support role consistency and secure access propagation.

This integration strategy is modular by design. No component should depend on a monolithic structure or tightly bound logic blocks. Instead, integration flows through clearly defined endpoints and state synchronization layers, allowing systems to scale, update, and evolve independently.

RESTful API Integration

A modernized enterprise application is a method where the backend exposes resource-oriented endpoints over HTTP, typically in JSON format.

Through this model, the application exposes resource endpoints — typically formatted in JSON — allowing the enterprise website to consume and manipulate content and service data in a consistent way. These endpoints represent structured data objects such as /users, /content, or /products, aligning with the web architecture’s modular requirements.

For the enterprise website, REST serves as a predictable and stateless bridge to backend resources. Frontend systems — including CMS platforms, single-page applications, and component-based frameworks — use RESTful calls to access necessary business logic, content, or transactional services from the backend.

This architecture benefits caching mechanisms and load distribution by avoiding persistent session states, allowing for more scalable request handling.

The HTTP-based interaction model supports frontend rendering pipelines that operate asynchronously or based on reactive user actions. It also simplifies integration with frontend build systems and third-party platforms that expect clear, path-based content endpoints.

Through REST, each piece of website content or functionality — from a product list to a customer dashboard — can be independently retrieved and managed, aligning with the enterprise’s move toward component-driven rendering and modular frontend architecture.

However, REST’s rigidity can become a limitation in scenarios where frontend components have variable or fine-grained data needs. When the shape or structure of data differs across pages or user flows, a more flexible querying mechanism — like GraphQL — may better support dynamic data access without over-fetching or under-serving content.

Despite this, REST remains the baseline method for most enterprise application data delivery to the website, supporting structured content exposure and scalable integration between backend and frontend layers.

GraphQL Consumption

The enterprise application is a query-based API model that allows the frontend (the enterprise website) to specify exactly what data it needs, and get all of it in one request.. Instead of retrieving fixed data blocks from various REST endpoints, an enterprise website can issue a single GraphQL request and receive a custom response matching the exact shape of its components.

This frontend-driven query model lets components define their own data shapes, reducing unnecessary data transfer. Such an approach serves dynamic rendering needs efficiently, particularly for modular and CMS-backed layouts that require tailored payloads.

Enterprise websites with content variations across regions, users, or product configurations often depend on micro frontends or headless CMS layers. In these contexts, GraphQL consumption aligns directly with frontend layout engines that prioritize performance and specificity.

For instance, a page component may need a combination of metadata, user profile details, and tagged article snippets. The enterprise application fulfills this need by resolving nested GraphQL queries in a single transaction without overfetching unrelated data.

Unlike REST, where the application must expose multiple rigid endpoints, a GraphQL-integrated enterprise application exposes a single, adaptable interface. This schema customization, defined and maintained on the application side, customizes access and enforces content rules.

The schema supports CMS compatibility by matching structured backend content fields with frontend queries, minimizing payload size, and accelerating rendering speed across devices.

In supporting enterprise website operations, the application responds to GraphQL queries with a consistent structure, matching schema-defined types and respecting permission logic.

This backend discipline is critical: schema management, access control, and response shaping all add to system complexity. Still, for frontend developers, it reduces integration friction, unifies content sourcing, and contributes to a modular content delivery approach that scales across enterprise brands and business units.

Shared Authentication Systems

Shared authentication in modernized enterprise systems is the coordinated identity and session logic used by both the modernized app and the enterprise website.

Instead of managing separate authentication processes, the enterprise system distributes a common identity logic that synchronizes access control and user validation on both ends.

This unified approach supports SSO logic across the digital surface, allowing a single identity handshake to propagate across both the frontend and backend. Session tokens issued by the centralized authentication logic can be consumed and validated in both environments, maintaining a shared session state.

Such alignment extends to permission validation: access scope and role assignments are verified consistently, whether the request comes from the browser or an internal application module.

Shared authentication operates through standard architectural expectations. The identity and session logic are typically built on token-driven mechanisms. These can be transmitted via browser cookies or HTTP headers and verified against centralized providers categorized under OAuth2 or OpenID Connect models. The app and site both interface with these models, sharing access to identity claims, token validity, and permission attributes.

When the enterprise application lacks this shared model, identity boundaries fracture. A user’s session might persist in the app but expire on the site, or role definitions may differ between interfaces, breaking permission parity. Duplicate logins, fragmented identity state, and misaligned access scopes are inevitable outcomes when authentication logic isn’t federated.

For an enterprise application to integrate with a modern web architecture, it must expose authentication logic as part of its interface, not just serve business data. Identity propagation and token-based session control are not optional features but structural requirements for continuity between backend and website operations.

How App Modernization Improves Web Architecture?

The enterprise website functions through the application, identity, content, and logic, all of which originate from it. When the enterprise application is modernized, the enterprise website architecture becomes structurally capable of scaling, adapting, and delivering faster.

Modular backend services replace rigid processing flows, distributing tasks across lightweight components. This shift reduces latency and increases throughput, especially under traffic pressure. Websites backed by scalable services handle more requests without degrading performance.

API-driven delivery improves speed and responsiveness. RESTful and GraphQL interfaces deliver only the required data, allowing the frontend to render pages faster. When combined with headless CMS support, content becomes dynamic and manageable without deploying full-page updates.

Security improves through shared authentication systems. Instead of handling identity in separate layers, the website uses unified credentials from the application, reducing complexity and aligning with compliance structures.

Service-based integration introduces clear boundaries between the frontend and the backend. Interfaces are versioned and stable, so frontend changes no longer risk backend disruptions.

Modernization transforms the application into a scalable backbone that supports the continuous evolution of the entire website architecture — structurally, functionally, and integratively.

Contact

Don't like forms?

Shoot us an email at [email protected]