How to Choose Scalable WordPress Hosting?

Table of Contents

Scalable hosting is the operational backbone that ensures enterprise websites can adapt, persist, and perform under shifting digital pressures. Scalable hosting supports high-concurrency processing, dynamic workload balancing, and system-wide elasticity, eliminating the need for manual intervention or delayed provisioning cycles. It operates as a resource-adaptive infrastructure that adjusts compute, memory, and I/O channels in response to live demand, serving as the performance substrate beneath development workflows.

For enterprise websites (especially those deployed via CMS frameworks like WordPress), scalability must align with organizational structure and engineering intent. The hosting layer is not isolated; it integrates into CI/CD pipelines, version-controlled deployments, multiregional CDN meshes, and ERP- or CRM-tied operations. The expectation is not just uptime. It’s architectural resilience and operational predictability.

Selecting scalable WordPress hosting means filtering out environments that treat elasticity as a feature toggle, rather than an infrastructure principle. It requires aligning hosting capabilities with enterprise-grade development cycles, including modular deployments, container orchestration, horizontal scaling with auto-healing, and observability layers that mirror development-stage logs across production mirrors. Compatibility with multisite WordPress deployments, REST API workflows, and custom hook performance tuning becomes a table-stakes requirement.

Constraints emerge from both sides: the technical (runtime adaptability, edge delivery, isolated compute) and the organizational (role-based access, vendor SLA binding, jurisdictional data compliance). Scalable hosting for enterprise websites is therefore selected not on benchmarks alone but on conformance, whether it can actively enable enterprise velocity without trading off security posture or system integrity.

When choosing the right hosting model, enterprise teams are not comparing web hosts. They’re aligning their infrastructure strategy with business continuity thresholds. Scalable hosting becomes a strategic input, not just a supporting layer. Whether it’s a high-availability Kubernetes mesh or a finely tuned managed WordPress stack, the decision path starts with what the infrastructure must enable, not what it claims to support.

What is Scalable WordPress Hosting?

Scalable hosting is a server-side architecture that dynamically provisions infrastructure resources in direct response to workload variations. In the context of WordPress, scalability signifies the platform’s ability to maintain performance and reliability during spikes in concurrent PHP executions, high-volume database interactions, and dynamic content rendering operations across multiple user sessions.

Scalable WordPress hosting addresses CMS-specific runtime pressures, including simultaneous plugin execution, AJAX-intensive dashboard interactions, and complex theme hierarchies. It accommodates these factors by orchestrating PHP worker pools, isolating database query lanes, and deploying adaptive memory allocations that align with WordPress’s process-heavy request structure.

For enterprise-grade deployments, scalability is reframed. Traffic is distributed. Workflows are departmentalized across content teams, DevOps, and legal review gates. Infrastructure must support multi-zone redundancy, regional replication of media assets, and integration concurrency with systems such as ERPs, SSO layers, and external APIs.

In this tier, scalable WordPress hosting intersects with enterprise web hosting, where request tolerance exceeds 10,000 concurrent dynamic calls, and deployment orchestration is pipelined through GitOps or CI-managed release channels.

Scalability becomes functional only when the hosting layer sustains WordPress’s full operational graph without bottlenecks, delivering consistent wp-admin response times, load-balanced REST API endpoints, and fault-isolated asset delivery.

Hosting Type Compatibility for WordPress Enterprise Sites

Pricing tiers or feature sheets do not determine scalable hosting models suitable for enterprise WordPress deployments. They’re defined by their ability to meet systemic demands of concurrency, modular scalability, and CMS-specific execution behavior.

Enterprise compatibility depends on infrastructure types that support resource isolation, dynamic workload distribution, and version-controlled deployment pipelines, all while maintaining operational continuity under load.

Only hosting environments capable of aligning with the full WordPress runtime — from PHP worker management to database throughput under plugin-heavy templates — qualify as enterprise-ready. This excludes any static or semi-isolated tier that lacks orchestration, observability, and infrastructure extensibility.

In a WordPress-based enterprise context, hosting types are not interchangeable. Compatibility refers to the ability to handle thousands of simultaneous dynamic requests while providing granular control over runtime parameters. It means supporting containerized deployment models, elastic autoscaling, and performance monitoring that maps directly to CMS actions—cron triggers, REST API calls, and theme rendering paths.

Scalable hosting, when filtered through the operational lens of enterprise web architecture, must operate as a programmable substrate. It should integrate with CI/CD, distribute across zones, tolerate regional failovers, and support parallelized staging environments.

Hosting types that fail to provide horizontal elasticity, fine-grained provisioning, and CMS-aware resource allocation are functionally excluded from this category. Only those that enable infrastructure-driven WordPress performance at scale meet the bar for enterprise use.

Managed WordPress Hosting

Managed WordPress hosting qualifies as a scalable hosting architecture by delivering infrastructure-managed environments that automatically provision, isolate, and tune resources for CMS-specific workloads.

Instead of offering raw access to infrastructure layers, this model handles PHP worker scaling, page caching integration, and database performance allocation through system-controlled triggers, eliminating the need for engineering teams to manually script resource shifts.

In enterprise deployments, the predictability of managed resource orchestration is critical. These environments support multi-environment pipelines with automated deployment hooks, enabling version-controlled staging and production transitions. By offloading infrastructure tuning, they allow enterprise teams to focus on development workflows rather than infrastructure hygiene.

More importantly, managed hosting for enterprise scenarios delivers CMS-native scalability, handling concurrent requests across dynamic themes, plugin-heavy templates, and high-frequency content operations. It provisions isolated execution pools to avoid cross-site performance bleed, supports CI-based publishing routines, and guarantees runtime compatibility with PHP hooks and persistent caching logic.

By auto-scaling database query pools, object cache layers, and request threads based on runtime behavior, managed WordPress hosting sustains high throughput under load while maintaining deterministic response times.

Cloud Hosting

Cloud hosting qualifies as a scalable hosting model by providing elastic infrastructure that provisions compute, memory, and IOPS channels in direct correlation to traffic fluctuations and workload volume. In WordPress-based enterprise architectures, this flexibility allows cloud-hosted environments to expand PHP execution layers, clone database instances, and extend object caching nodes based on live system metrics.

This hosting model supports high-concurrency traffic and horizontal scaling of CMS workloads via automated load distribution and instance orchestration. With multi-zone deployment availability and service abstraction, cloud environments ensure continuity under failover conditions while allowing resource governance at the vCPU and container level. Auto-scaling policies bound to metrics, such as PHP worker queue depth or HTTP request rate, make cloud platforms ideal for executing complex CMS execution paths.

In enterprise DevOps workflows, cloud hosting for enterprise deployments integrate with GitOps-based rollout models, IaC provisioning templates, and full-stack observability pipelines. Ephemeral staging environments, CI-linked promotion gates, and region-specific media distribution are core capabilities.

Cloud hosting supports parallel build branches, dynamic scaling of page rendering pipelines, and fault-isolated service execution across distributed compute nodes. It becomes enterprise-viable when every component of the WordPress stack — PHP, MySQL, Redis, NGINX — can scale independently, respond to orchestration signals, and recover without human intervention.

Kubernetes-Based Container Hosting

Kubernetes-based container hosting delivers scalability through automated orchestration of containerized WordPress services across distributed compute nodes. By abstracting each runtime component (web server, database, cache, background workers) into isolated, independently scalable containers, it enables fine-grained resource control and deployment agility under real enterprise conditions.

Container hosting provisions pods dynamically in response to load metrics such as CPU usage, HTTP request rate, and memory thresholds. This ensures that WordPress instances can scale horizontally in real time, maintaining consistent performance across traffic surges without overprovisioning idle capacity.

Each container can be tuned with dedicated resource limits and live-readiness probes, supporting uninterrupted delivery pipelines and failover-ready rollouts.

Within enterprise development workflows, Kubernetes aligns with GitOps, Helm-based rollout strategies, and infrastructure-as-code provisioning through tools like ArgoCD or Terraform. It supports blue/green and canary deployment models, staging isolation via namespaces, and secure injection of environment-bound secrets, which are the key elements in hardened CMS deployment lifecycles.

In WordPress contexts where multi-regional failover, plugin orchestration, and theme-level customization are required at scale, container orchestration solves the complexity gap. It transforms WordPress from a monolithic runtime into a modular, distributed system.

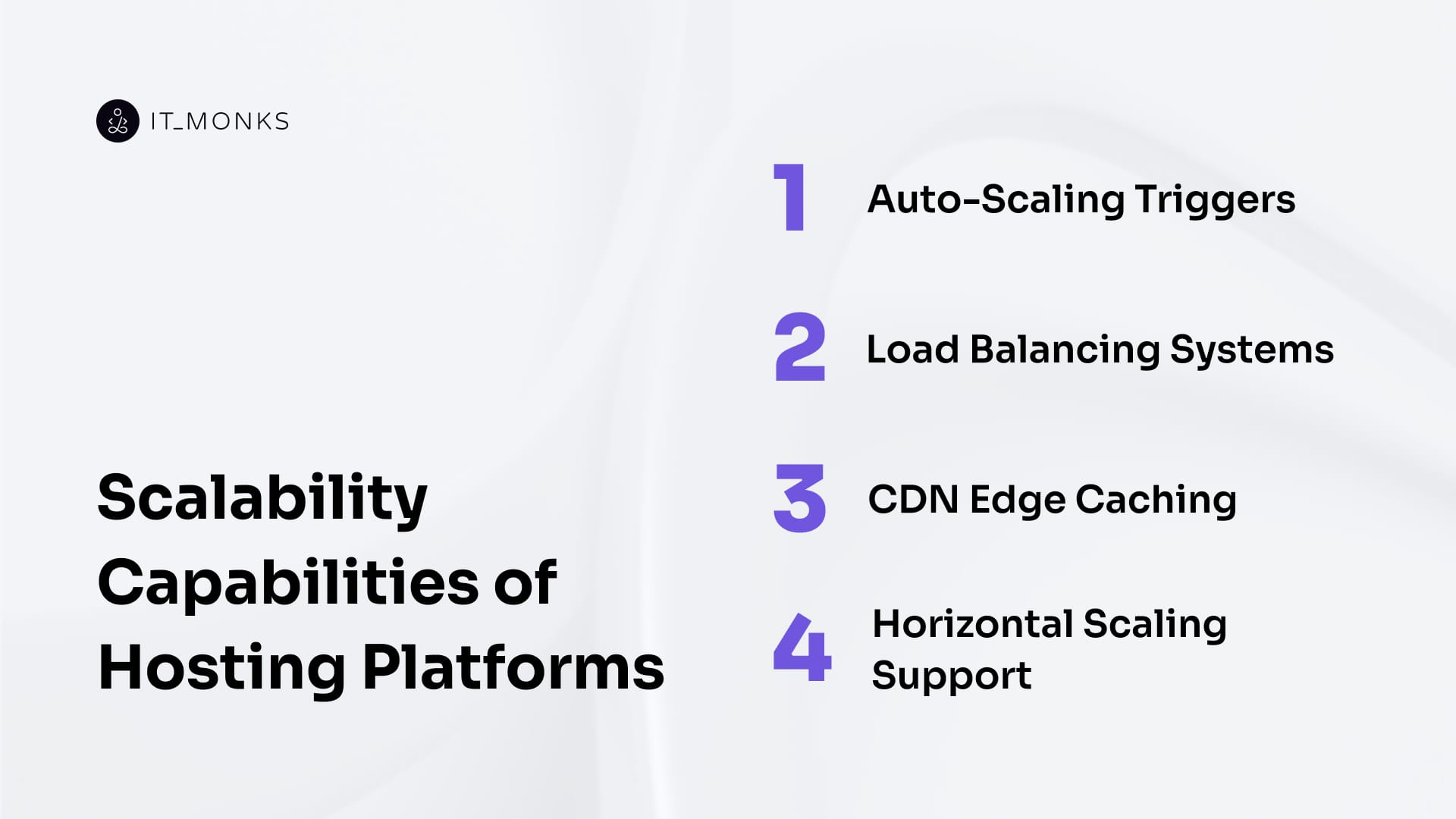

Scalability Capabilities of Hosting Platforms

Scalable hosting is a function of specific infrastructure mechanisms that adapt, distribute, and expand system resources in direct response to load and execution demand. For enterprise-grade WordPress websites, these mechanisms are fundamental requirements that prevent latency degradation, system bottlenecks, and deployment inflexibility under traffic stress.

A hosting platform qualifies as scalable only when it executes core behaviors that enable real-time infrastructure adaptability. These include triggering automated resource provisioning during concurrency spikes, distributing HTTP and HTTPS requests across load-balanced frontend nodes, and offloading static and dynamic content delivery to global edge networks.

Each mechanism must actively engage with live runtime conditions — CPU thresholds, memory exhaustion, request concurrency — to ensure consistent CMS performance under enterprise-level load.

In WordPress environments, scalable behavior is tightly bound to the way the CMS operates, including plugin-driven query density, session-based user logic, scheduled background jobs, and frequent interaction with the admin panel. They create systemic stress that scalable platforms must mitigate through automated, orchestrated behaviors. Platforms incapable of responding to these dynamics at scale are fundamentally unfit for enterprise hosting.

Auto-Scaling Triggers

Auto-scaling triggers are the execution logic behind real-time infrastructure elasticity in scalable hosting. In enterprise WordPress environments, these triggers respond to live telemetry signals (such as CPU saturation, memory thresholds, or backlog in request queues) by provisioning new compute containers or replicas to preserve performance continuity.

This behavior is tightly mapped to WordPress runtime patterns. When PHP worker pools approach concurrency caps, often due to dynamic page loads from logged-in users or high-frequency admin interactions, the system initiates horizontal scaling. Similarly, queue latency exceeding 100ms for scheduled tasks, such as CRON jobs or webhooks, can trigger container replication to maintain backend responsiveness.

These triggers are often configured via metric-based policies exposed through orchestration tools (e.g., Kubernetes Horizontal Pod Autoscaler, HPA) or IaC-driven dashboards, allowing operations teams to predefine actions like “scale out at 75% CPU over 180 seconds” or “add a replica if 300+ concurrent requests exceed 250ms TTFB.” In high-concurrency scenarios, predictive scale-out can be integrated with CI/CD to deploy new runtime nodes preemptively before peak traffic events occur.

By decoupling resource allocation from manual oversight, auto-scaling triggers ensure that scalable hosting adapts not reactively, but as a dynamic part of the enterprise WordPress application flow. They form the foundation for concurrency tolerance, runtime stability, and cost-controlled elasticity in infrastructure-aware hosting environments.

Load Balancing Systems

Load balancing systems serve as the orchestration layer, dynamically distributing inbound HTTP/S traffic across replicated WordPress service instances. In scalable hosting models, this mechanism ensures high-availability execution by routing requests to healthy backend nodes, mitigating overload, and enabling fault isolation without performance degradation.

For enterprise WordPress sites, load balancers do far more than distribute volume. They separate traffic types, maintain sticky sessions for logged-in users, and distinguish between static and dynamic content streams. They absorb request floods during marketing campaigns, separate wp-admin traffic from anonymous browsing, and balance plugin-induced execution load across PHP worker clusters.

Most platforms integrate enterprise load balancer architecture that operates at Layer 7 (HTTP-aware), enabling content-type–based routing and geo-distribution logic. This includes session affinity, cache-aware routing, and blue/green deployment traffic splitting. Load balancing also integrates with CI/CD flows, draining specific nodes during rollout to prevent interruptions to active requests.

With health checks tied to response latency and service readiness probes, load balancers maintain high throughput across global delivery zones, offering sub-150ms TTFB consistency at the 95th percentile and <2s failover response on node loss.

By shielding backend WordPress infrastructure from direct volume spikes and isolating performance anomalies, load balancing systems are fundamental to sustaining dynamic enterprise workloads at scale.

CDN Edge Caching

CDN edge caching is an infrastructure-level decoupling layer that offloads static and cacheable WordPress content to geographically distributed edge nodes, thereby reducing origin load, accelerating delivery, and enabling global concurrency at scale. It is a mandatory component for achieving scalable hosting in an enterprise WordPress context.

By serving full-page caches, static assets, and even semi-dynamic content, such as blog index pages, directly from edge locations, this mechanism prevents backend PHP execution for the vast majority of anonymous user requests. During peak events, edge nodes can absorb 85% or more of total traffic, including repeat page loads and bot crawls, without waking backend services.

CDN caching integrates tightly with publishing workflows, utilizing webhooks or CI/CD hooks to purge caches upon deployment. It supports fragment caching patterns — injecting live data blocks into pre-rendered shells — and applies smart rules to bypass caching for authenticated sessions or form submissions.

For multi-region delivery, CDNs also implement geo-routing, directing users to the closest edge node and reducing TTFB by up to 150ms for international traffic.

Horizontal Scaling Support

Horizontal scaling support is the core mechanism by which scalable hosting platforms replicate compute environments, spawning identical, stateless execution containers to handle increased traffic without introducing bottlenecks.

This mechanism works by replicating services, such as PHP-FPM, NGINX, and background job workers, across node pools or containers, all of which are coordinated through orchestration layers like Kubernetes. Requests are routed by a load balancer to available replicas, with traffic distributed based on health, region, or request type. Each node remains stateless, with session data externalized to shared stores like Redis and file assets detached via object storage or NFS.

WordPress, when scaled horizontally, offloads read operations to DB replicas, caches object-level calls, and synchronizes file changes via CI/CD. This structure enables deployments to scale from 2 to 50+ PHP containers dynamically, handling upwards of 10,000 concurrent users while maintaining consistent page rendering times under 200 milliseconds.

For enterprise DevOps workflows, this mechanism integrates with autoscaling groups, supports rolling updates, and enables zero-downtime deployments through service registries and probe-based health gating.

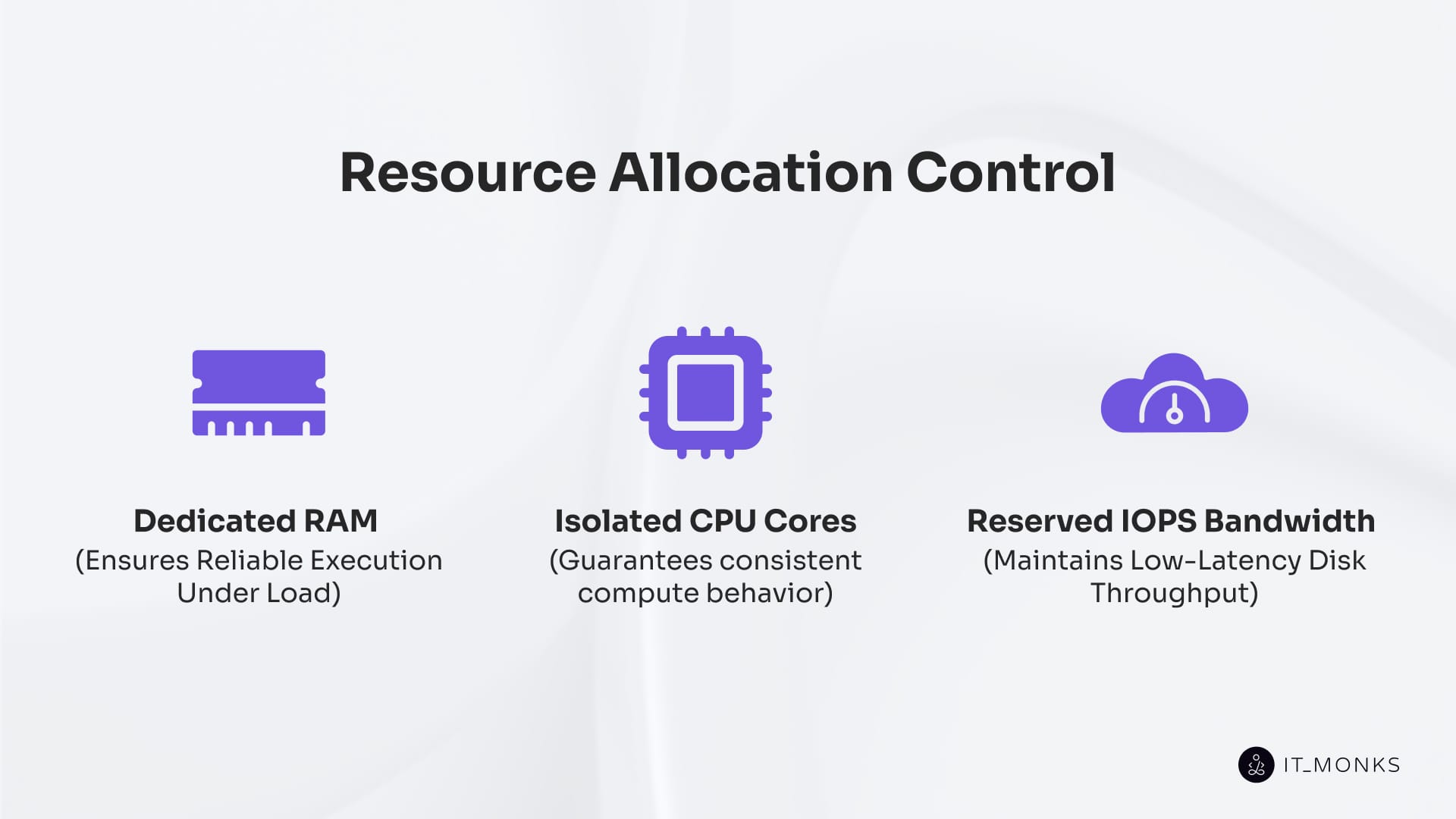

Resource Allocation Control

Scalable hosting doesn’t exist without direct control over compute resources. Elasticity, redundancy, and scaling logic are all irrelevant if the execution environment suffers from noisy-neighbor effects, shared CPU bottlenecks, or memory starvation. For WordPress to function predictably under enterprise load, the platform must enforce deterministic access to RAM, CPU, and I/O bandwidth at runtime, per instance, and under stress.

In WordPress, where each request spawns a PHP worker, plugin calls can unpredictably stack memory demand. Where background jobs, admin sessions, and cache rebuilds compete for compute time, uncontrolled resource environments lead directly to performance collapse. For enterprise deployments, this is the operational floor.

The following mechanisms — dedicated RAM, isolated CPU cores, and reserved IOPS bandwidth — determine whether a platform can deliver consistent throughput at scale or whether its elasticity is merely shared capacity rebranded in marketing language.

Dedicated RAM Allocation

Dedicated RAM allocation determines whether a WordPress application can execute reliably under load or collapse due to memory contention. In scalable hosting architectures, memory must be explicitly reserved per container, VM, or node, ensuring that concurrent PHP workers, object caching systems, and plugin chains can operate without cross-tenant interference or unpredictable memory reclaim events.

WordPress doesn’t gracefully degrade under memory pressure; it fails. Each PHP request is memory-bound, and even with horizontal scaling in place, replicas mean nothing if they’re starved for RAM. Especially in environments with plugin-heavy stacks, dynamic admin panels, and background job queues, guaranteed memory blocks are what sustain runtime integrity.

Scalable platforms enforce container-level memory limits and requests, define OOM prevention policies, and allow proactive scaling based on memory exhaustion trends. Object caches are retained across replicas only when memory persistence is guaranteed. Configuration via IaC and observability through telemetry (e.g., Prometheus) are non-negotiable for enterprise systems.

Whether it’s a 2 GiB memory limit per container or a 500 MiB object cache replicated across 12 nodes, the critical factor is not the number — it’s the non-shared, enforced, and monitored nature of memory access: no RAM isolation, no scalable WordPress.

Isolated CPU Cores

CPU contention prevents scaling from even beginning. Isolated CPU cores are the guarantee of execution that distinguishes actual scalable hosting from resource-lottery environments. Without pinned vCPU threads per container or reserved compute allocations per pod, autoscaling logic becomes guesswork, and PHP threads fight for cycles alongside unrelated workloads.

WordPress isn’t CPU-intensive per request, but when multiplied by thousands of concurrent logged-in sessions, plugin chains, and cache rebuilds, the execution path saturates quickly. Platform-induced throttling or noisy-neighbor CPU starvation can lead to backend latency, failed page loads, and delayed administrative actions.

Isolated compute environments ensure that PHP-FPM workers have deterministic access to execution cycles. Scaling policies triggered by CPU thresholds (e.g., 70% over 3 minutes) only hold value when the measured load isn’t distorted by shared usage. Whether enforced via Kubernetes CPU limits, cgroups, or VM-level core pinning, the key is consistent compute behavior.

Reserved IOPS Channels

No WordPress environment scales under I/O starvation. Reserved IOPS channels are the gatekeepers of consistent CMS behavior during peak read/write activity. Whether it’s plugin data writes, media loading, cache rebuilds, or database interactions, WordPress demands constant, low-latency disk throughput. And that demand increases significantly during periods of high concurrency.

In shared I/O environments, horizontal scaling is a trap: more replicas just mean more instances queuing on the same saturated channel. Reserved IOPS — per pod, per volume, per node — guarantee baseline disk performance, measured and enforced. With provisioned volumes delivering 1000+ IOPS and maintaining latency under 5ms, WordPress operations avoid cascading failures from blocked file access.

This matters in real-world workloads, such as WooCommerce product updates, background job bursts from WP-Cron, or simultaneous media uploads from multiple regions. Without reserved throughput, performance becomes a coin toss.

Enterprise-grade hosting platforms integrate IOPS guarantees into IaC templates, autoscaling triggers, and observability stacks. They enforce per-container quotas, log disk wait states, and forecast I/O saturation as part of the deployment lifecycle.

CMS-Specific Optimization for WordPress Performance

Scalable hosting is about understanding what’s running on that infrastructure. For WordPress, performance stability under enterprise load depends not only on how fast hardware responds, but on whether the platform is behaviorally optimized for the CMS’s unique execution path. CMS-specific optimization is the difference between infrastructure that runs WordPress and infrastructure that runs WordPress well at scale.

This means hosting environments must implement targeted performance logic tailored to how WordPress processes requests, instantiates objects, chains hooks, and interacts with its database layer. These are execution-layer interventions that address repeat bottlenecks in plugin-heavy, user-dense deployments.

In high-traffic enterprise contexts, general-purpose tuning isn’t sufficient. Plugin chains introduce stacked latencies, dynamic admin panels hit concurrency ceilings, and post meta queries under WooCommerce crush the database layer. The only scalable response is a hosting platform that caches intelligently, bypasses redundant logic, and persists objects across replica nodes.

Persistent Object Caching

Persistent object caching is a critical layer in enterprise-grade WordPress scalability. It enables the platform to intercept and reuse execution-layer data — like WP_Query objects, get_option() returns, and post meta payloads — across multiple requests and containerized WordPress instances.

By integrating directly into WordPress’s wp_cache_* functions and wiring into memory-backed stores such as Redis or Memcached, this mechanism bypasses repeated database hits, reduces latency under plugin-heavy loads, and stabilizes performance during traffic bursts.

What makes this caching persistent is its cross-session and cross-replica visibility. Unlike in-memory caches, which are limited to a single PHP process or node, a shared object store ensures that query results, configuration lookups, and transient data survive autoscaling events and are accessible across all nodes. This consistency is vital in horizontally scaled WordPress environments where plugin logic relies on predictable option resolution and meta field reuse.

Real implementations allocate environment-specific Redis clusters, define object TTLs that align with publishing cycles, and integrate eviction-aware cache sizing.

Metrics such as >85% hit ratio or sub-50ms query latency on cache hits aren’t embellishments — they’re operational baselines for stable enterprise WordPress. The result isn’t just faster responses; it’s a platform that absorbs pressure without collapsing under read-write chaos.

Server-Level Page Caching

Server-level page caching eliminates the need for WordPress to execute at all on eligible requests. By capturing and serving full HTTP responses for cacheable pages directly from the reverse proxy layer (e.g., NGINX, Varnish), the platform removes PHP, MySQL, and WordPress runtime from the path. This is not about improving performance — it’s about shielding the backend from millions of redundant page requests.

Enterprise platforms implement this as part of their core architecture, wiring in microcaches that serve static HTML for anonymous traffic: blog archives, homepage views, taxonomy listings, and landing pages. Requests are matched, served, and completed without ever waking a PHP process.

With path-aware TTLs, cookie-based bypass logic, and automated purging on content updates, this system allows platforms to scale for burst traffic while reserving backend compute for dynamic workloads.

Effective deployments pair this with webhook-driven invalidation and route-aware cache partitioning — e.g., purging only URLs tagged “announcement” on post publish. Metrics like 90%+ cache efficiency and <100ms TTFB on hits aren’t luxuries; they’re operational requirements. Without this mechanism, even the best horizontal scaling collapses under the volume of traffic that could’ve been answered from memory.

PHP Runtime Tuning for WordPress Hooks

WordPress is a hook-driven system — every plugin you install, every action or filter, stacks execution logic on top of core functions. When these chains compound across checkout flows, dashboards, or admin panels, they introduce deep, memory-intensive execution stacks that standard PHP configurations weren’t built to handle. PHP runtime tuning for WordPress hooks is the hosting layer’s response to that load.

Enterprise platforms tune PHP-FPM workers with elevated limits for memory, execution time, and nesting depth. They preload hook-heavy files into the opcode cache, extend opcache object limits, and configure consistent worker behavior across container replicas. This allows filter chains with dozens of plugin callbacks to complete without timeout or memory exhaustion — even under high concurrency.

This optimization isn’t about supporting PHP 8 or “running fast.” It’s about profiling hook stack behavior, allocating enough buffer to process the full chain without interruption, and preventing cascading failures when one plugin adds a few too many callbacks.

Platforms that monitor hook latency, adjust worker thresholds in CI/CD pipelines, and preserve consistent runtime behavior across autoscaled containers ensure that WordPress doesn’t just respond — it completes execution predictably, every time.

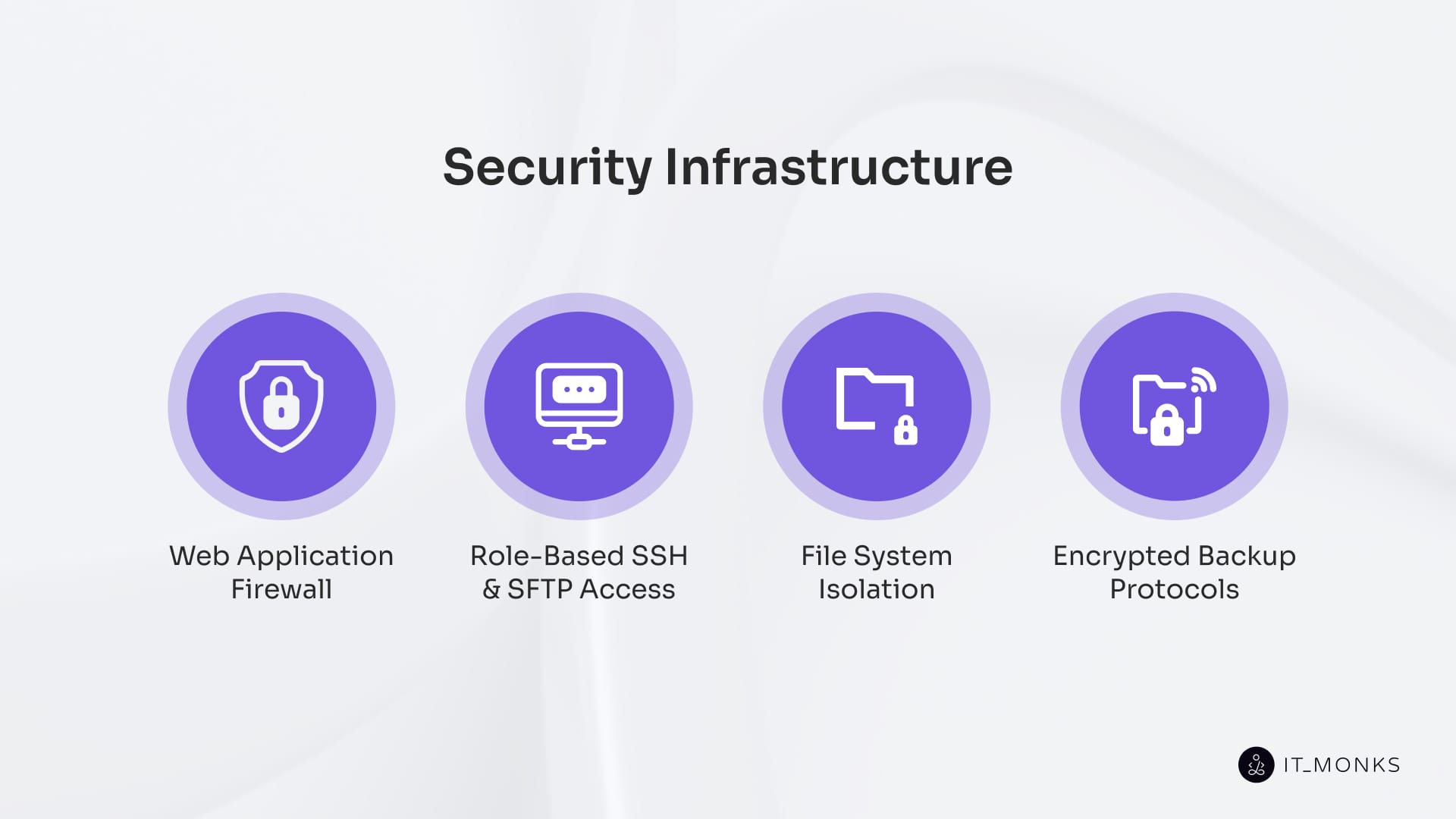

Security Infrastructure for Enterprise Hosting Environments

Security infrastructure is a structural prerequisite for scalable WordPress hosting in enterprise environments. As the execution layer expands across containers, nodes, and regions, the threat surface grows proportionally. To be considered scalable at the enterprise level, hosting must enforce threat mitigation at the infrastructure layer, rather than patching it at runtime with plugins or afterthoughts.

This includes per-instance file system isolation, immutable encrypted backups, and zero-trust access enforcement that prevents lateral movement across tenants. These are the baselines for uptime continuity under attack, CI/CD pipeline integrity, and compliance with standards such as SOC 2 and GDPR.

Scalable platforms intercept and neutralize malicious input before it hits PHP, log and restrict every access session with auditable roles, and decouple recovery logic from the infected runtime. When executed correctly, this architecture ensures that no plugin vulnerability, misconfigured file, or privilege escalation attempt can cascade beyond its origin. The result is a stable, resilient hosting system capable of scaling without compromise.

Web Application Firewall (WAF)

A platform-native WAF is the non-negotiable first barrier in scalable WordPress hosting, designed to inspect and block malicious traffic before it ever touches PHP or CMS logic. It sits at the edge of the infrastructure and enforces strict layer 7 filtering based on request origin, payload structure, and known exploit patterns. Its role isn’t passive. It doesn’t observe. It stops.

In the context of WordPress, this means neutralizing brute-force attacks against login endpoints, sanitizing injection attempts targeting query strings and form handlers, and enforcing strict filters on paths such as xmlrpc.php, wp-json, and admin-ajax.php. It protects against OWASP-class threats — SQLi, XSS, CSRF, file inclusion — not by relying on plugin logic but by refusing bad payloads outright.

At an enterprise scale, the WAF must maintain per-tenant policy alignment, synchronize threat signatures in real-time, and generate audit trails for all blocked actions. Its job is to guarantee that no malformed input ever reaches WordPress execution paths, regardless of how clever, automated, or distributed the attack might be. Scalability without this layer is just exposed surface area.

File System Isolation per Site Instance

In a secure hosting environment, each WordPress instance must operate in total file system isolation. That means no cross-directory visibility, no shared volume exposure, and no accidental or malicious leakage across containers or tenants.

True isolation prevents plugin exploits or injected payloads on one site from traversing to another. It locks down symbolic link abuse, jails runtime processes within strict namespace boundaries, and ensures backups and automation workflows can’t cross security lines. In multi-tenant architectures, this is the only barrier standing between a local compromise and a platform-wide disaster.

At scale, this isolation is executed through OS-level jailing, container-mounted volumes, and per-namespace access controls defined in orchestration templates. Without it, every WordPress environment shares risk, making security reactive instead of preventative. Segregated file systems make exploits local, containable, and recoverable.

Encrypted Backup Protocols

Backups are not just a recovery mechanism; they’re a security asset. If they’re readable, they’re vulnerable. If they’re stored alongside runtime infrastructure, they’re exposed. In scalable WordPress hosting, backups must be encrypted end-to-end, stored off-host, and locked behind platform-level access controls with verifiable integrity.

This includes full AES-256 encryption at rest, TLS 1.3 in transit, and IAM-scoped permissions for every restore operation. Snapshots must be immutable (i.e., no tampering, no overwrites, and no hidden payloads) and validated with checksum verification before being deployed to live environments. Restoration must be possible without reinfection, even in the middle of an active compromise.

In enterprise contexts, these backups are versioned, regionally redundant, and integrated with RBAC policies. Platform-managed encryption keys are rotated automatically. Forensic logging traces every snapshot lifecycle event.

Role-Based SSH and SFTP Access

Shell and file access are among the most powerful and dangerous vectors in any hosting platform. Role-based SSH and SFTP access enforces strict privilege boundaries, ensuring that no one can access more than their function requires. This is not about “giving developers access.” It’s about confining, auditing, and revoking access with zero margin for error.

Every session is scoped by role, environment, and container. A developer might have staging shell access, but production is locked behind CI automation. SFTP mounts are jailed per tenant, not per user. SSH keys are issued through identity-governed workflows, time-bound, and fully logged. Nothing is granted globally. Everything is auditable.

This prevents credential leakage, accidental overwrites, and silent privilege escalation. It enforces the separation of duties between dev, ops, and support. It aligns with enterprise access policies, integrates with identity providers, and allows forensic replay of every session.

SLA and Uptime Guarantees for Enterprise Reliability

Scalable hosting for enterprise WordPress environments isn’t complete without enforceable uptime commitments codified as SLAs and backed by resilient infrastructure, real-time monitoring, and accountable escalation paths. These are contractual thresholds that define platform reliability under production pressure.

SLAs establish measurable availability targets, typically 99.99%, and are supported by multi-zone architecture, automated failover, and continuous health verification. When an incident occurs, the platform must respond within strict timeframes, provide traceable reporting, and apply service credits or penalties without delay or friction. In this context, availability is engineered, not promised.

For enterprise WordPress workloads — whether WooCommerce checkouts, LMS syncs, or real-time publishing pipelines — downtime isn’t an inconvenience. It’s lost business, broken automation, and potential data loss. SLA enforcement ensures that the platform not only scales under load but survives infrastructure failures without breaching operational continuity.

99.99% Uptime SLA

A 99.99% uptime SLA is a contractual ceiling of failure tolerance, translating to no more than 4.38 minutes of unplanned downtime per month. This threshold demands platform architecture that actively absorbs faults through redundancy, zone-aware deployment, and failover orchestration designed for live continuity, not just reboot recovery.

The SLA is environment-specific, measured externally in 60-second intervals from geographically distributed nodes, and tied to remediation terms that include automatic service credits for breach conditions. There’s no ambiguity: uptime is calculated, logged, published, and enforced — per container, per site, per region.

For enterprise WordPress workloads, this guarantee ensures that checkout flows don’t hang, scheduled jobs don’t fail silently, and content delivery continues uninterrupted, even if a zone goes offline. It’s not about best-effort uptime. It’s about engineered continuity at the infrastructure layer, verifiable by both humans and contracts.

Dedicated Support Channels

Dedicated support channels in enterprise hosting are not about being “available” but rather about being escalation-ready. These channels exist outside generic queues, wired directly into SLA workflows, and staffed by engineers who understand container topologies, runtime diagnostics, and how WordPress behaves under platform-level pressure.

Each enterprise environment is assigned access to high-priority paths (such as Slack Connect, dedicated hotlines, or secure portals) mapped to specific roles, severities, and workloads. P1 incidents trigger live human response within defined SLA windows (e.g. 15 minutes), and support agents are already pre-briefed on the site’s operational context, not guessing in the dark.

This is about resolving incidents under time constraints with platform-side visibility. From cache-level regression to PHP worker saturation, support must act as a runtime extension of DevOps, not a disconnected helpdesk. Enterprise reliability demands that when something breaks, the response is fast, informed, and operational, not polite.

Cost Efficiency of Hosting Service

Cost efficiency in enterprise WordPress hosting is about how the platform design aligns infrastructure spend with runtime behavior and operational scale. It means every dollar spent is traceable to a compute action, a traffic burst, a deployment event, or a resource demand, not to idle infrastructure or vendor flat-rate markup.

Scalable hosting achieves cost efficiency through elastic orchestration, usage-based metering, and intelligent workload shaping. Containers scale with traffic, not calendar time. PHP workers are spun up during checkout spikes and spun down after. CDN edge layers suppress backend traffic, reducing origin IOPS and compute load without affecting delivery speed.

Enterprise teams gain visibility through per-environment spend dashboards, with granularity down to the level of CPU cycles, memory use, and storage bandwidth. This enables forecasting, cost modeling, and team-level accountability. Instead of wasting budget on always-on VMs or over-provisioned instances, the cost becomes a function of the performance delivered.

When integrated with developer workflow automation, caching strategy, and architectural discipline, cost efficiency becomes more than a billing feature—it becomes a measurable component of platform scale, resilience, and operational clarity.

Integration Readiness

Enterprise-grade hosting isn’t integration-ready just because it allows HTTP requests to reach WordPress. It’s integration-ready when it exposes, secures, and governs those interactions at scale, under load, and with full observability. Actual readiness means the hosting platform functions as an extension of the enterprise network, not a black box behind a plugin.

This includes API ingress that enforces per-tenant authentication policies, webhook listeners that respond to runtime triggers like user_register or order_complete, and outbound delivery mechanisms that handle retries, failures, and structured payload shaping. Integration points are treated as first-class platform interfaces — rate-limited, audited, and identity-scoped.

Whether syncing user data with a CRM, triggering ERP workflows on order status change, or pushing event logs into a SIEM, the hosting stack must offer both the elasticity and security to operate in real-time, high-frequency environments without degrading WordPress performance.

When integration is native to the platform — not bolted on through middleware or exposed via raw CMS routes — it becomes an operational asset. Enterprise teams gain architectural control, consistent identity propagation, and assurance that their automation won’t break when traffic surges or security policies evolve.

CRM and ERP Integration Support

Enterprise-grade hosting must enable direct, bi-directional integration between WordPress and systems like CRM and ERP platforms, without relying on plugins, manual exports, or brittle middleware. This requires native orchestration that transforms WordPress events (e.g., new user registrations, completed purchases) into structured payloads delivered through authenticated, rate-managed, and retry-safe channels.

Inbound updates — such as pricing rules, user entitlements, or role mappings — must flow through secured endpoints governed by per-tenant IAM policies and tied to clearly scoped platform APIs. All traffic is observable, logged, and governed by schema validation and authentication boundaries (OAuth2, JWT, client certificates).

Real-time data syncs between WordPress and enterprise systems are handled through container-side listeners or platform-managed webhook infrastructure, ensuring delivery integrity even under load. Event queues buffer bursty operations, while retry logic ensures reliability without compromising SLA latency targets or flooding downstream systems.

API Access and Webhook Triggers

In modern hosting infrastructure, API access and webhook triggers are structured, platform-governed interfaces for secure and scalable system communication. Hosting platforms must expose WordPress APIs through rate-limited, identity-scoped ingress layers such as gateways or proxy routers, ensuring that every request is authenticated, observed, and controlled per tenant.

On the outbound side, platform-integrated webhook systems must translate WordPress events into delivery-ready payloads—signed, queued, and routed with retry safety. Whether it’s a user_login event triggering a security audit log, or a form_submission routed to a CRM, webhook dispatch happens via platform infrastructure, not improvised code inside the theme folder.

Authentication is enforced at every layer: JWTs or OAuth2 tokens with role-based scopes, shared HMAC secrets for webhook validation, and per-tenant isolation for event routing and failure logging. No endpoint is exposed without governance, and no delivery is left unaudited.

Why Is Scalable WordPress Hosting Important for Enterprise?

Enterprise websites are system-connected operational platforms. Whether serving as eCommerce engines, identity portals, content hubs, or integration bridges, they operate as interface nodes in larger digital ecosystems. Their uptime, responsiveness, and integration behavior have a direct impact on revenue, automation, and compliance. And none of those demands tolerate infrastructure that stalls under load.

Scalability is the difference between continuity and collapse during traffic spikes, API floods, or parallel deployment cycles. Platforms built for scalability enforce concurrency limits, isolate execution environments, and provision resources dynamically across container pools.

In practice, this means an enterprise WordPress site can handle sudden traffic bursts, feed data to CRM systems, and process thousands of webhook events per minute, without human intervention or runtime degradation.

Without this infrastructure readiness, the enterprise website becomes a liability. API routes choke under concurrency. Form submissions queue up and fail silently. System-wide rollouts hit resource ceilings mid-deploy. Marketing campaigns often stall during peak conversion periods. The issue isn’t “downtime,” it’s systemic fragility at scale.

Every major enterprise team feels the cost of unscalable hosting:

- DevOps can’t maintain SLAs without dynamic scaling and per-environment resource shaping.

- Marketing loses conversion during latency spikes and caching breakdowns.

- Security faces risk from shared resource pools and uncontrolled multitenancy.

- Data teams lose observability and event fidelity as they scale under entropy-driven conditions.

- Finance is impacted by overprovisioned baselines and budget volatility during traffic bursts.

By contrast, scalable hosting enables role-specific elasticity and fault isolation. It delivers API-integrated orchestration, not just faster pages. It enforces horizontal scaling on demand, keeping PHP workers, database reads, and object storage aligned with request pressure. It ensures system interactions remain resilient under dynamic conditions.

In contexts like enterprise website development, hosting isn’t just a deployment decision. It’s an architectural dependency. The CMS alone cannot carry the load of enterprise automation, user concurrency, or system interoperability. Only infrastructure that treats WordPress as a deployable workload — not a standalone app — can deliver on uptime, security, and operational accountability.

Scalable WordPress hosting transforms a CMS into an enterprise service tier. Without it, every other layer — performance, observability, cost control, integration — becomes a bottleneck. In enterprise systems, bottlenecks become significant business risks.

Contact

Don't like forms?

Shoot us an email at [email protected]