Server Side Testing

Table of Contents

Server side testing places experimentation decisions on the server within the request/response lifecycle, so the decision point determines which variant is rendered before content is delivered. The workflow moves through server-side decisioning, rendering, delivery, measurement, rollout, and caching, shaping consistency, latency, attribution, and data quality before users receive any output.

Server-side A/B testing serves as the reference point, and client-side testing is then contrasted to show how the decision point location affects flicker, consistency, and control. In this comparison, the benefits of server-level decisioning are evaluated against constraints such as implementation complexity, latency impact, and operational overhead.

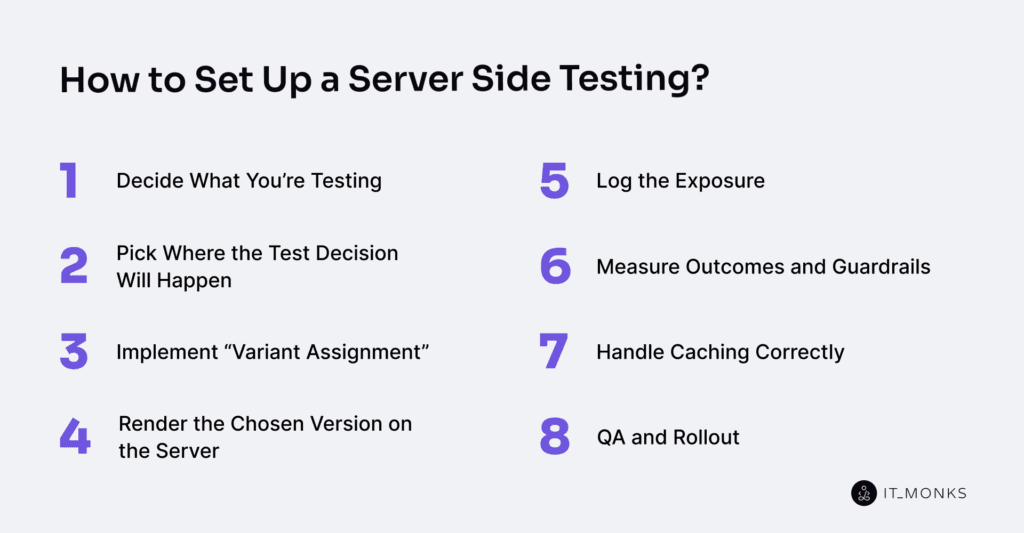

The workflow then translates into setup steps: choosing what to test, defining where the decision happens, assigning variants and traffic, rendering output on the server, logging exposure events, measuring outcome and guardrail metrics, managing caching and cache invalidation, and validating changes through QA and controlled rollout. These requirements align with experimentation platforms and tools that support assignment, logging, and measurement.

Only after this flow is established does the context extend to WordPress website development, where server-rendered PHP output, theme and plugin rendering paths, hosting stacks, CDN and object caching, and analytics instrumentation make these dependencies visible in everyday site work.

In this environment, rendering paths, caching layers, and instrumentation choices constrain assignment consistency, measurement accuracy, and rollout safety under real server and deployment conditions.

What is Server Side A/B Testing?

Server side A/B testing is an experimentation method in which the server decides which test variant to show a visitor before rendering and delivery. The decision point exists in the server’s request/response lifecycle.

For each request, the server assigns a control or treatment using a stable assignment key (e.g., a user, session, or request identifier), renders the chosen output, delivers the response, and logs an exposure event to measure outcomes and guardrails. Its defining attributes are decision_location=server, decision_timing=pre-response, assignment_unit=user/session/request, render_location=server, and exposure_logging=required.

“Server side” means the decision is made in server-controlled logic such as application code, edge or server functions, reverse proxy logic, or an experimentation service called by the server.

In WordPress website development, server-side A/B testing is defined by placement in the delivery pipeline. Because WordPress output is typically PHP-rendered and affected by hosting stacks, caching plugins, CDNs, and analytics instrumentation, variant assignment, rendering, and exposure logging must remain aligned with cache keys, TTL values in seconds, and latency in milliseconds to maintain consistent and reliable measurement.

What are the Differences Between Server Side and Client Side Testing?

The differences between server-side testing and client-side testing are mainly defined by where the decision is made and when it happens in the request/response lifecycle. This difference shapes how variants are rendered, what users see, how exposure is logged, how results are measured, and how performance, caching, and operations behave.

In server side testing, the decision is made on the server during the incoming request, before the page is rendered. The server assigns a user to a variant (control or treatment) using a deterministic key such as a cookie, session ID, or user ID, and renders the selected variant directly into the response HTML.

In client-side testing, the decision is made in the browser after the response is received. The browser first loads a baseline version of the page, then client-side logic assigns a variant and modifies the DOM after page load. This difference affects rendering and user experience.

Exposure logging also differs. With server-side testing, exposure is logged when the server decides and renders the variant, so the logged exposure always matches what was delivered. With client side testing, exposure is logged only after scripts run and update the DOM, which makes logging dependent on browser conditions, JavaScript execution, and blockers.

These differences impact measurement and attribution. Server-side testing keeps assignment, exposure, and outcome tracking within a single controlled flow, leading to more reliable attribution and guardrail metrics. Client-side testing relies more on front-end execution, which increases the risk of missed events, partial attribution, or data loss.

There are also performance and caching differences. Server side testing can add small latency during request handling and requires careful cache key setup to avoid serving the wrong variant. Client side testing usually serves the same cached page to everyone and applies changes in the browser, which simplifies caching but adds work during rendering.

Finally, ownership and failure modes differ. Server-side testing is typically handled by backend or platform teams and often fails when it falls back to a default variant. Client side testing is usually owned by frontend teams and can fail silently when scripts do not load, execute, or log correctly.

These differences also matter on WordPress because the platform is typically built around server-rendered pages, caching layers, and predefined analytics configurations. In this environment, whether the test decision happens on the server or in the browser directly affects how consistently variants are delivered and how reliably data is measured.

What are the Advantages of the Service Side A/B Testing?

The advantages of server-side A/B testing include a stable first-paint experience, more reliable measurement, and stronger control over experiment delivery.

When the variant is selected at the server decision point before rendering, the response is sent in its final form. This stabilizes the page at first paint and reduces flicker risk compared to post-load changes, preventing hydration mismatch, layout shifts, and added interaction latency (ms).

This server-side assignment also enables deterministic bucketing that remains consistent across requests and, where applicable, across devices. The result is reduced re-bucketing and allocation drift (%) and a more accurate comparison of outcome metrics, such as conversions or error rates, which you can explore further in our conversion rate optimization guide.

Since the server knows which variant was delivered, it can emit an exposure log or event when the response is served. This improves attribution reliability by directly linking exposure to outcomes and reduces data loss caused by ad blockers, client-side failures, or privacy constraints.

Clearer attribution allows guardrails to be monitored with higher confidence. Server-side measurement enables earlier detection of regressions in latency, error rate, or backend load, helping teams limit impact and reduce rollout risk.

As delivery passes through the cache/CDN layer, server side A/B testing supports cache-aware delivery. With proper cache key segmentation and TTL (seconds) configuration, variants remain consistent while still benefiting from caching, avoiding cross-variant leakage that would distort measurements.

With measurement and delivery stabilized at the infrastructure level, rollout becomes an operational decision. Because experiments align with backend deployment practices, rollout strategy, QA validation, and monitoring are easier to manage. Server side A/B testing supports segmentation rules, gated releases, canary rollouts, and fast rollback paths, improving control during experimentation.

In WordPress website development, pages are typically server-rendered and delivered through layered caching, so these advantages improve delivery consistency and measurement integrity without framing server-side A/B testing as a WordPress-specific feature.

What are the Disadvantages of the Service Side A/B Testing?

The disadvantages of server-side A/B testing include increased implementation complexity, higher caching risk, and greater measurement overhead.

Server-side A/B testing requires decision logic, variant assignment, and a consistent rendering path to be handled on the backend. This adds more backend components to manage and tightly couples decision services, data storage, and templates, increasing maintenance effort and slowing development changes.

Since the server decides and renders each response, every request may involve a decision service or a feature flag evaluation. This adds latency and creates an infrastructure dependency. If the decision service slows down or fails, variant assignment can break or fall back incorrectly, increasing the risk of rollout and rollback.

Accurate measurement in server-side testing relies on a reliable exposure log/event, which increases the instrumentation burden in the analytics pipeline. Exposure events must be logged at the correct point in the rendering path and deduplicated per user or session.

If logging is missed or duplicated, attribution becomes inaccurate, and both outcome metrics and guardrail metrics degrade, especially during partial rollouts or high traffic volumes.

When responses pass through a caching layer and a CDN, server-side testing risks cache contamination if cache keys and TTLs (seconds) are not assigned deterministically.

Misconfigured cache segmentation can mix variants or serve inconsistent experiences across requests, breaking assignment consistency and data validity. Cache configuration and invalidation, therefore, become mandatory operational requirements.

As server-side experiments span decisioning, rendering, logging and caching, observability/monitoring, debugging, and incident response/rollback, these tasks become more difficult.

Variant-specific regressions are harder to detect without detailed logs, metrics, and alerts, and failures take longer to isolate. Ownership is split across backend, frontend, and data teams, which increases coordination cost and slows incident response.

In WordPress website development environments, these disadvantages are often amplified. Server-rendered HTML, theme and plugin variability, and layered caching or CDN setups increase sensitivity to rendering consistency, cache correctness, and logging discipline unless setup, QA, and rollout controls are carefully managed.

How to Set UP a Server Side Testing?

Decide What You’re Testing

Start by naming a single test objective and locking it to a clear control and variant before any technical work begins. Identify the change surface, the exact server-rendered element that differs between control and variant (content block, layout fragment, pricing rule, CTA, template component, or response logic), and constrain it to a specific route or page type, as commonly required in landing page optimization.

Set explicit audience eligibility rules and a traffic allocation (%) so variant assignment is deterministic from the first request. If the change surface, eligibility, or metrics are unclear here, later steps cannot reliably assign variants or interpret exposure.

Analyze success using one primary outcome metric that the experiment measures and attributes to exposure (such as conversion rate %, revenue per request, or completion rate within a defined measurement window). Add at least one guardrail metric that guards against regression (for example, latency in ms or error rate %), with stated limits.

Name the exposure event that logs when a variant is seen, the attribution window, and clear stop/rollback criteria that stop or roll back the test if guardrails breach or results invalidate the baseline. Treat sample size awareness only as a check that traffic volume can support the metrics.

Finally, close with a feasibility check: the change must be deliverable on the server, assignable deterministically, and measurable via exposure logging and outcome tracking despite caching layers common in WordPress website development environments (templates, themes, plugin-rendered components).

Pick Where the Test Decision Will Happen

The experiment decision point is where a variant is decided and enforced throughout the request/response lifecycle for the entire response. This single decision location determines assignment, rendering, exposure logging, and caching behavior, so it must be chosen first.

The decision can occur on the application server, at the edge, in a reverse proxy/load balancer, or via an external decision service. Each option runs on a different server layer, reads identifiers differently, and interacts with the cache/CDN in distinct ways.

Choose the decision point using a constraint-driven checklist: identify where the request first reaches infrastructure you control; where you can read and write a stable identifier (cookie, session, or header); where logic can run within the latency budget; where the chosen variant can be enforced before rendering and caching; and where you can log exposure reliably.

The decision point should also be owned by the same team responsible for rendering, logging, and cache behavior to avoid silent consistency breaks.

On the application server, the decision runs during backend execution, reads cookies or sessions, persists the assignment, renders the full response, and logs exposure in the same runtime. This is often the most practical choice when PHP rendering and theme/template output define the response, as in WordPress website development, but it must be compatible with any caching plugin or CDN in front of the origin.

In a reverse proxy or load balancer, the decision occurs during routing. The proxy reads headers or cookies, selects a variant, forwards the request to a variant-specific upstream, and segments cache keys, but only works if it can also emit exposure signals.

With an external decision service, the application or edge calls out for an assignment. The call must still happen at a layer that can enforce the result before rendering and caching, with defined fallback behavior on timeout.

The decision point must be consistent across all pages in the test scope. Mixing decision layers breaks deterministic assignment, causes cache drift, and corrupts measurement. Pick one location that can see the request, access a stable identifier, decide within the latency budget, enforce the full response before caching, log exposure reliably, and keep it fixed for the entire experiment.

Implement “Variant Assignment”

Variant assignment maps each incoming request to either the control or a variant through a stable mapping between an identifier, a bucket, and a variant. The goal is consistency across requests: the same user, session, or request should always be assigned to the same variant.

First, pick the assignment unit, such as user, session, or request, based on how long the experiment must persist. Then choose the identifier for that unit, typically a cookie-based anonymous ID for logged-out users or a logged-in user ID when available. The server reads this identifier on each request and uses it as the deduplication key.

Next, define the allocation percentage (%) and bucket traffic deterministically. Deterministic bucketing ensures that the same identifier always selects the same variant, stabilizing experiences across pages and visits.

The server must persist the assignment using a cookie (with explicit domain and path scope), a response header, or a server-side store, and apply an expiry or TTL so assignments expire when the experiment ends. The server writes the assignment once and retrieves it on subsequent requests, creating a single source of truth.

Before assignment, define eligibility rules and exclusion rules to include only the intended audience and exclude bots, internal users, or conflicting tests. Establish fallback behavior so the server falls back to control or creates a new assignment if the identifier is missing or changes. For QA, allow an override to force a specific variant without changing the core logic.

The assignment must be decided before rendering, because rendering reads the chosen variant. Persisted assignments must also align with cache or CDN keys, or caches may mix variants.

Render the Chosen Version on the Server

Once the assigned variant exists, the rendering pipeline must use it as an explicit input to produce the server response/HTML returned on first delivery. The server reads the assignment at request start, selects the corresponding variant branch, and branches deterministically before composing the output.

Rendering then includes or excludes the correct template/component/block, as a full-page render or partial rendering, so the control output and variant output differ only where intended.

The assignment applies to the entire request context and must be kept consistent across all internal sub-requests that contribute to the page (for example, theme templates and plugin-rendered blocks in WordPress development).

The server prevents mixing by centralizing branch logic, isolates variant decisions to a single source of truth, and validates that all rendered fragments align before composing the final HTML.

Rendering must remain stable within the request scope. Do not flip variants mid-request; define clear fallback behavior to the control on error; and handle edge cases (such as logged-in vs. anonymous users) only if they are part of eligibility.

Keep within a small performance budget as a guardrail, but prioritize deterministic output so exposure logging, caching, and QA can rely on a stable, variant-specific response.

Log the Exposure

In server-side testing, exposure logging provides a reliable signal that a specific user was served a specific variant, protecting attribution from missing, duplicated, or delayed events. An exposure event confirms that the server selected a variant and delivered it in the response.

The logging trigger must occur after the server has committed to the variant and after that variant is rendered. The dependency is explicit: the server assigns a variant, renders it in the response, and emits the exposure event only then. Logging outside this point risks incorrect or incomplete counts.

Each exposure event should include a minimal, consistent schema: experiment identifier, variant identifier, a stable identifier (user ID, session ID, or hashed cookie), and a timestamp. Optional attributes include request context, assignment unit, correlation or request ID, and a deduplication key.

The server emits the exposure event to a durable destination such as an analytics event pipeline, experimentation platform endpoint, server logs, or a data warehouse. Deduplication is required: log once per exposure window, using a key derived from the experiment ID, variant ID, and stable identifier, to prevent inflated counts from retries or refreshes.

Finally, validate delivery, as the logging layer should retry on transient failures, handle latency explicitly, and minimize PII through hashed identifiers. In WordPress environments, where instrumentation varies by theme, plugin, hosting, and caching, server-side exposure logging provides a stable baseline independent of client execution and cache behavior, enabling accurate outcome measurement and guardrails for the next steps.

Measure Outcomes and Guardrails

Start by clearly separating outcomes from guardrails: outcomes measure improvement, while guardrails prevent harm. Before analysis, confirm that exposure logging exists and is used as the denominator for attribution. No outcome should be evaluated without being linked back to the exposure event.

Next, select one primary outcome metric directly tied to the test objective and define the outcome event precisely (e.g., conversion, revenue/order value, or a specific engagement event). Attribute outcomes to exposures using a defined attribution window (hours or days) and state how deduplication and data lag (minutes or hours) are handled, following principles commonly outlined in a web analytics guide. A secondary metric may be tracked only if it helps interpret the primary result without adding noise.

Define 2-4 guardrail metrics alongside the primary outcome. Guardrails represent measurable user or system risk and may include error rate, latency, bounce or exit rate (%), refunds or cancellations (%), or failed requests. Each guardrail must have an explicit threshold and a stop or rollback rule that triggers alerts and limits or reverses the experiment.

Set monitoring and alerting expectations before ramping traffic: who monitors, how often results are reviewed, and who owns incident response. During the run, continuously monitor guardrails to detect regressions early, while outcomes are reviewed on a fixed cadence to avoid reacting to statistical noise. Include lightweight checks, such as a sample ratio mismatch, to validate exposure consistency.

Finally, require segment breakdowns (device type, logged-in state, geography) to catch uneven impact. All outcomes and guardrails must be measurable using existing instrumentation in the WordPress website development environment, such as analytics events, server logs, and performance monitoring, so definitions match how data is actually emitted across themes, plugins, caching, and hosting layers.

Measurement definitions must be finalized before scaling traffic, as caching and rollout practices can otherwise distort attribution.

Handle Caching Correctly

Caching can override the experiment decision if it is not aligned with server-side assignment and rendering. If a cache reuses a rendered response without varying it based on the assignment indicator, it can serve the wrong variant, mix variants, or cause sticky assignments.

First, identify every caching layer in the request path: browser, CDN or edge, reverse proxy, page, and object cache. Determine where each layer caches the rendered response and whether it respects cookies or headers. Then decide the cache policy for the experiment scope: either bypass caching for experiment pages or responses, or allow caching only if it varies based on a stable assignment indicator (e.g., a cookie or header).

If caching is allowed, the cache key must vary by the variant or assignment indicator. Use cookie- or header-based cache variation and confirm that the CDN forwards and honors these values, since many CDNs ignore cookies by default unless configured.

Where supported, configure a vary header on the assignment indicator to isolate variants at the edge. Set a controlled TTL in seconds or minutes and define purge or invalidation rules when an experiment starts, changes, or ends.

Handle traffic states explicitly, as logged-in users often bypass page caches while anonymous users are heavily cached. Both paths must be checked to prevent variant leakage.

Validate before and during rollout by testing repeated refreshes and multiple users to confirm consistent variant delivery and correct cache hit/miss behavior. Correct caching is a prerequisite for reliable exposure logging and outcome measurement.

QA and Rollout

QA always comes before ramping traffic, because errors in experiment configuration, variant delivery, logging, or caching scale with exposure. Before rollout, the experiment is validated end-to-end to ensure the control and variant return the correct server-rendered output for the assigned cookie or header.

Assignment consistency is then verified across refreshes, repeat visits, and sessions, including both anonymous and logged-in flows. Where supported, a forced-variant override is used to directly compare responses and confirm stable assignment and rendering.

Measurement validation follows, as the exposure event/log must fire once per eligible user, match the expected schema, and correctly apply deduplication. Outcome events/metrics and guardrail metrics are confirmed by reproducing user actions and checking that events log the correct experiment and variant identifiers across key devices and browsers.

Cache safety is checked before traffic increases. The caching layer (application, hosting, or CDN) must not mix variants, cache keys must include the assignment signal, and a cache purge must return clean responses.

In WordPress website development, these checks are repeated across both the staging and production environments, where theme or plugin changes, as well as layered caching, can alter behavior.

Once QA passes, rollout proceeds as a canary or staged rollout. Traffic allocation starts at a small percentage with a defined monitoring window. Monitoring and alerting track exposure counts, outcome metrics, guardrail thresholds, error rate, latency, and data lag, after which traffic ramps in predefined steps.

Rollback criteria are defined upfront; any guardrail breach, logging failure, cache mixing, or error spike triggers rollback, disabling the experiment and falling back to control via a kill switch, ensuring measurement integrity remains stable as exposure increases.

Server Side TestingTools

Server-side testing tools provide the implementation layer that follows the setup process defined earlier. After teams decide what to test, where decisions happen, how variants are assigned, and how exposure and outcomes are logged, these tools standardize the pipeline so it does not need to be rebuilt for every experiment.

An experimentation platform centralizes server-side decisioning, variant assignment, delivery coordination, logging, measurement, and rollout controls, reducing redundant engineering work and the risk of inconsistent assignments, missed events, or unsafe releases.

Server-side testing relies on tightly coupled steps. Consistent server-side decisioning requires deterministic bucketing and assignment persistence, reliable exposure logging, outcome measurement with guardrail metrics, and controlled rollout under real caching and CDN behavior.

Server side testing tools bundle these dependencies into repeatable infrastructure, so experiments behave consistently across deployments and environments instead of relying on custom, test-specific code.

Tools can be evaluated using a shared set of practical criteria aligned with the experiment lifecycle:

- Integration surface: how the server SDK or API integrates with the hosting stack and deployment workflow.

- Decisioning model: where server-side decisions run, such as the application server, edge, or proxy.

- Assignment capability: deterministic bucketing and assignment persistence using a stable user or session identifier.

- Logging and events: exposure logging support, event schema control, and deduplication behavior.

- Measurement and guardrails: outcome measurement, guardrail metrics, and alerting for regressions.

- Rollout controls: feature flags, staged rollout, canary releases, and kill switches.

- Caching compatibility: cache-safe delivery, cookie or header-based variation, TTL awareness, and CDN behavior.

- Operational fit: observability, ownership, and ongoing maintenance within the team’s workflow.

Optimizely Feature Experimentation

Optimizely feature experimentation supports server-side experimentation through server SDK integration in backend services and APIs (integration_surface). It decides at request time using centralized server-side decisioning and assigns users via deterministic bucketing with percentage allocation and fallback-to-control (decisioning_model, assignment_capability).

Assignments persist using user or device identifiers to ensure consistency across requests (assignment/persistence). Each server renders logs a deduplicated exposure event for reliable attribution (logging_and_events).

Outcomes are measured against primary metrics with guardrail thresholds to surface regressions and data lag (measurement_and_guardrails). Features roll out through staged deployment with kill switches and instant disable controls (rollout_controls).

Cache-safe variation via headers or cookies, and TTL awareness, allow CDN layers to remain variant-safe (caching_compatibility). Decisions and events are monitored through SDK telemetry within tight latency budgets (operational_monitoring).

This makes Optimizely Feature Experimentation a practical choice for teams that need consistent server-side assignment, reliable exposure logging, and tightly controlled rollouts in environments with caching layers.

In WordPress hosting contexts, server-rendered output paths, cache/CDN behavior, and instrumentation should be validated before adoption.

LaunchDarkly Experimentation

LaunchDarkly Experimentation integrates with server-side stacks through dedicated server SDKs, allowing experiment decisioning to run inside backend application code.

Variant assignment uses deterministic bucketing tied to a stable identifier, with allocation and segmentation rules persisted so users are not re-bucketed across sessions. Each decision logs a single exposure event through LaunchDarkly’s event pipeline, with deduplication to preserve measurement accuracy.

Outcome measurement connects exposure events to defined metrics and guardrail thresholds, accounting for data lag. Rollout controls are built into the same workflow, supporting staged ramps, canary releases, kill switches, and fallback-to-control paths to mitigate risk during live experiments.

Because decisions occur on the server, caching and CDN layers must preserve variant-safe delivery via header or cookie variation, cache bypass, or controlled TTLs.

In WordPress website development environments, where server-rendered pages often pass through layered caching and uneven analytics instrumentation, maintaining consistent assignment and reliable exposure logging is critical when evaluating LaunchDarkly as an experimentation platform.

VWO Feature Experimentation

VWO Feature Experimentation supports server-side experimentation by integrating into backend services through an SDK-based integration surface. Its decisioning model runs on the server, selecting variants before response rendering to ensure deterministic delivery.

Variant assignment and persistence rely on deterministic bucketing tied to a stable identifier, with allocation and segmentation rules maintaining consistency across requests. Exposure logging records server-side exposure events via a defined event schema with deduplication, enabling reliable attribution despite data lag.

Measurement and guardrails allow outcome tracking alongside guardrail metrics, while rollout controls, such as segmentation, ramp steps, canary releases, kill switch, fallback-to-control, gating risk, and enabling rapid rollback.

Caching/CDN interaction must be configured as variant-safe, either by bypassing caches or by enforcing cache variation via a cookie or header with a controlled TTL (seconds). Operational monitoring focuses on observability and keeping decision-making within strict latency budgets.

In WordPress website development environments, where server-rendered pages are delivered through caching/CDN layers and mixed analytics instrumentation, these checks preserve assignment consistency, exposure logging integrity, and cache-safe delivery without treating VWO as a plugin solution.

Contact

Don't like forms?

Shoot us an email at [email protected]