A/A Testing: Guideline

Table of Contents

A/A testing is a controlled experiment where 2 identical variants are compared to confirm that the testing setup works correctly across assignment, tracking, and caching. An A/A test looks for no real difference between variants, making it a validation method in its own right.

Teams use A/A testing during WordPress website development because frequent changes in themes, plugins, caching layers, and tracking scripts can silently introduce bias, drift, or measurement errors that affect test integrity.

A/A testing is used to confirm that a testing setup is stable before running optimization tests. It differs from A/B testing in terms of when it should be used and the expected outcome. An A/A test is used before optimization to verify that measurement, assignment, and traffic splitting work correctly, while an A/B test is used to compare different variants to detect performance changes.

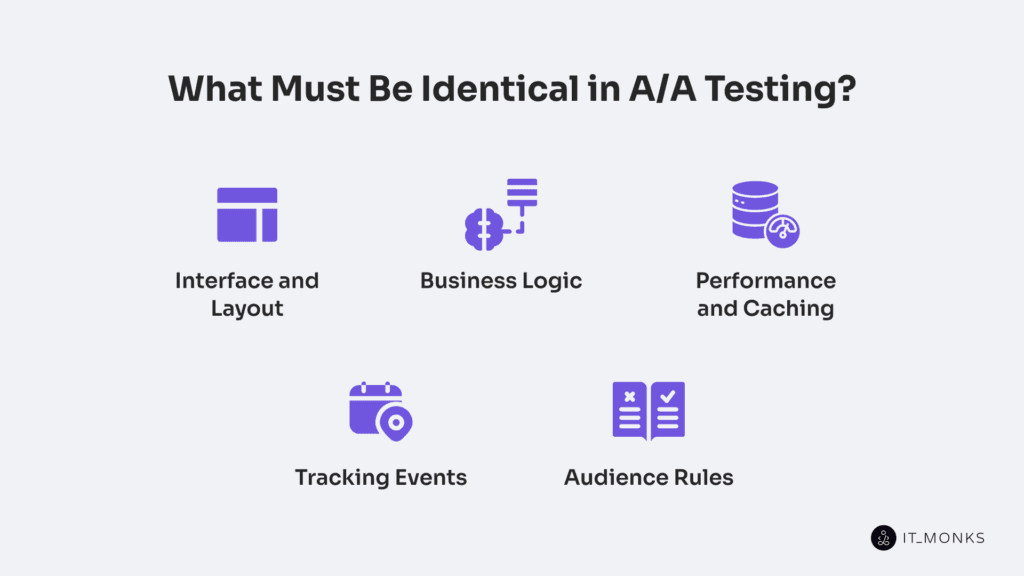

In A/A testing, variants must remain identical in interface, business logic, performance, caching behavior, tracking events, and audience rules. Users are assigned to variants, and traffic is split evenly to avoid artificial differences. Results are evaluated using a primary metric, guardrail metric, and stability metric, where the expected outcome is no difference, and any detected change may indicate a false positive.

What is A/A Test?

A/A test is a type of experiment in which 2 or more variants are intentionally identical and compared to validate the experiment setup. In this test, the variant does not introduce any design, content, or logic change; equivalence is the core condition. Because the variants are the same, any observed difference is interpreted as arising from how the experimental system assigns, tracks, and measures users.

This type of test validates the integrity of the assignment, traffic split, tracking events, and metrics. When these components behave consistently, results should show no meaningful difference. When differences appear, they indicate noise, bias, or technical drift, often surfacing as false positives.

A/A testing functions as a diagnostic baseline to detect setup issues and estimate normal variance. In WordPress website development, this step is especially important because themes, plugins, caching layers, and analytics integrations can silently affect assignment consistency and event integrity. The expected outcome is stability and “no difference,” which makes this test a reliable checkpoint before running A/B tests.

What is the Difference Between A/A and A/B Test?

An A/A test and an A/B test differ by intent, variant relationship, and the type of risk they are designed to surface.

An A/A test compares identical variants to validate the experiment setup itself, by checking assignment consistency, traffic split, tracking events, and metric stability. The expected outcome is no difference, and a problem appears as a false positive due to instrumentation noise, caching, or audience rules in the experiment setup.

An A/B test, by contrast, compares different variants to detect and measure the effect of a change. The expected outcome is a measurable difference, and a problem appears when system noise hides, exaggerates, or misattributes that effect.

Because both tests use the same assignment and tracking infrastructure, an A/A test is run first to verify system integrity. Any difference in A/A indicates noise or setup errors, while differences in A/B are attributed to a change.

This distinction is foundational to A/B testing basics and how to read A/B results, because it separates measurement reliability from actual performance impact. This is especially important in WordPress, where caching, plugins, and theme rendering can create false variation.

When You Should Use A/A Testing?

A/A testing is used when you need to validate the measurement system before trusting experiment results. Its role is to confirm that identical experiences produce identical outcomes, so any future difference can be attributed to real change, which is a prerequisite in our cro guide.

Run A/A testing after changes to tracking events or instrumentation, when measurement integrity may no longer be guaranteed. In WordPress website development, updates to analytics plugins, tag managers, themes, or custom event code can alter how events fire or how sessions are counted. A/A testing verifies tracking integrity and establishes a stable baseline before interpretation.

Apply A/A testing when the experiment setup changes. New experiment configurations, first-time launches, or refactored test frameworks should be validated to confirm that the setup can measure “no difference” without introducing noise or false positives.

A/A testing can be used when the assignment method, audience rules, or traffic split changes. Modifications to user-, session-, or device-based assignment, as well as audience-targeting logic, can create mismatches in who sees what. A/A testing checks assignment consistency and audience determinism.

Run A/A testing after changes to caching or performance layers. CDN rules, server-side caching, object caching, or WordPress performance plugins can affect response timing or content delivery. A/A testing confirms that performance differences are not leaking into metrics.

Use A/A testing when metrics show unexplained drift, anomalies, or instability. Sudden shifts without a product change often signal measurement noise, data inconsistency, or increased false positives. A/A testing helps detect and isolate these issues.

What Must Be Identical in A/A Testing?

Interface and Layout

Interface and layout must remain identical across A/A testing variants, as A/A testing assumes there are no user-visible differences. Each variant must render the same interface and layout so that any measured difference cannot be attributed to UI rendering. Even minor interface changes can alter user behavior or event firing, creating a confounder and producing false positives.

At the first layer, the rendered structure must match exactly: the same DOM structure, the same element hierarchy, and the same content placement. Theme and template output must align so headings, blocks, containers, and component order remain equivalent. Structural drift alters visibility and scan patterns, potentially affecting interaction paths and tracking.

At the second layer, visual styling must remain identical as well. CSS rules, fonts, spacing, colors, and layout grids should match across variants. Responsive behavior must also be preserved so layouts adapt the same way across viewports and devices. Styling differences break visual consistency and can change user perception.

At the third layer, interactive behavior should remain consistent. Interactive elements must initialize and behave stably, including hover and focus states and the default component state at load. An identical client-side state ensures that users see the same interaction surface, supporting stable event tracking.

Within WordPress website development, interface and layout drift commonly comes from theme templates, block rendering, plugin-injected UI, or front-end asset loading order. Controlling interface and layout removes this major confounder, allowing A/A testing to validate system stability so later metric comparisons reflect assignment, tracking, or audience rules.

Business Logic

Business logic must be identical across functionally equivalent A/A test variants. Business logic is the rule-driven layer that controls data retrieval, rule and condition evaluation, content and personalization selection, permission checks, integration responses, and backend side effects.

Identical variants require that the same inputs produce the same outputs, that rules execute in the same order, and that state changes remain equivalent. Data retrieval results, personalization decisions, roles and permissions, integration and API responses, and backend side effects such as writes or triggered processes must match across variants.

In WordPress website development, business logic can differ without visible interface changes. Plugin settings or versions, theme functions using hooks or filters, feature flags, role-based rules, and environment-specific configuration can cause logic drift and non-deterministic behavior.

When business logic differs, logic differences become an uncontrolled confounder. Variants may produce different data outputs or tracking events, creating a false positive in a test intended to show no difference.

Performance and Caching

Performance and caching must match across test variants, as A/A testing assumes no meaningful differences in delivery. This requirement is especially strict in server side testing, where variant selection and rendering happen before the response is cached or delivered. Performance covers response time, render time, and server response behavior; caching defines what is stored and served again.

Identical variants must deliver the same assets, execute the same backend work, and show equivalent latency and timing. At the caching layer, this requires identical cacheability, identical cache keys and variation rules, and identical invalidation behavior.

Caching can create unintended variation when a cache layer serves different content or timing. Page cache, object cache, CDN, and browser cache rely on cache keys; if those keys vary by variant, cookie, header, device, or URL parameter, one variant may receive a cache hit while another receives a cache miss. This changes delivery speed or rendered output, introducing a delivery-level confounder.

Performance differences can also distort event timing. Faster rendering can increase interactions, while slower responses can delay, reorder, or suppress events. These effects reduce measurement integrity, weaken metric stability, and may appear as a false positive in an A/A result.

In WordPress website development, performance and caching commonly drift due to page and object cache configuration, CDN behavior, browser cache headers, and changes to asset delivery from minification, compression, image optimization, lazy loading, and server configuration.

When variant delivery remains consistent across all cache layers, observed differences are easier to attribute to assignment, tracking, or audience rules.

Tracking Events

Tracking events should match exactly across A/A testing variants because they are the measurement layer that feeds all metrics. Tracking events are the recorded signals used to measure behavior, including page views, clicks, form submits, purchases, and custom interactions.

In an A/A test, identical variants must generate identical tracking events: the same event name, event schema, event trigger conditions, event parameters/properties, event timing, deduplication rule, and delivery method through the analytics pipeline and data layer. This level of precision reflects the event standards described in a web analytics guide.

Any mismatch at this layer breaks measurement integrity and can create a false positive, even when the user experience is unchanged, because metrics differ due to event loss, delay, or double-counting.

Event consistency has 2 required parts: event definition determines what the event is and which data it carries for attribution, while event execution determines when the event fires, how it is sent, and how duplicates are handled.

In WordPress website development, event tracking often drifts due to plugin-injected scripts, theme changes that move trigger elements, tag manager updates, consent rules that block or delay scripts, and front-end loading order that alters timing. Keeping tracking events stable removes a major measurement confounder, so later A/A results meaningfully reflect metric stability.

Audience Rules

Audience rules must be identical across A/A variants so both sides compare equivalent user populations. In A/A testing, these rules control who can enter the test and how users are grouped, making them a core requirement for valid “no difference” results.

The eligibility rule qualifies users based on logged-in vs logged-out state, returning vs new users, or user role. In a WordPress website development environment, membership plugins and role-based access commonly affect eligibility, so both variants must apply the same criteria.

The exclusion rule filters out bots, internal traffic, administrators, and QA accounts. Any difference in exclusion logic introduces sample bias by allowing non-representative traffic into one variant.

Each segment divides qualified users by device type, geo or language rules, login state, or role. Identical segmentation is required to prevent population mismatch that can surface as a false positive in an A/A test.

Cookie and consent state gate participation and tracking. In WordPress setups, consent banners and personalization plugins can block measurement for some users; matching consent gating across variants keeps inclusion and tracking consistent.

Aligned eligibility, exclusion, segmentation, and consent logic remove a major confounder from A/A testing and support stable assignment, stable metrics, and a valid “no difference” interpretation.

A/A Test Variants Assignment

A/A test variants assignment defines the allocation rule that maps each visitor to a variant, typically Variant A or an identical Variant A, and keeps that mapping consistent across repeat visits.

If assignment switches or drifts, imbalance or hidden bias can appear and mimic a real effect. In WordPress website development, where users return across sessions and devices, assignment consistency is critical for test reliability.

The purpose of the variants assignment in an A/A test is to validate the assignment system itself: ensure balanced allocation, prevent switching, and avoid bias tied to the chosen identity unit.

A/A testing evaluates assignments through distinct assignment types. User-based assignment persists allocation per user across visits. Session-based assignment keeps allocation consistent only within a session. Device-based assignment persists allocation per device.

User-Based Assignment

User-based assignment is the variant allocation method in which the user serves as the stable anchor in an A/A test. The system assigns a variant once per user identity and reuses that assignment on subsequent visits, ensuring cross-session consistency and preventing reassignment on each request.

User-based assignment is used in A/A testing because validating “no difference” depends on consistent assignment over time. When the same user maps to the same variant, assignment stability persists, switching and mixed exposure are reduced, and assignment-driven noise is minimized without introducing bias.

User identity determines correctness: a logged-in user or stable visitor identity supports persistence, while a missing or fragmented identity can cause drift, switching, and measurement noise.

By reducing assignment-driven variance, user-based assignment improves A/A test reliability, especially in WordPress website development, where users return and interact across multiple pages.

Session-Based Assignment

Session-based assignment uses the session as the assignment anchor in an A/A test, meaning a bounded visit window. A variant is assigned at the start of the session and must remain stable throughout the visit to ensure within-session consistency; upon session end, a new assignment may occur.

Session-based assignment is used when long-term user identification is weak or unavailable, which is common in WordPress website development with many anonymous visitors. The tradeoff is cross-session switching: a return visit from the same person may receive a different variant, increasing assignment noise for metrics influenced by repeat visits.

Device-Based Assignment

Device-based assignment ties the variant to a device, so the same device receives the same variant across repeat visits. In an A/A test, “no difference” expectations rely on consistent exposure, and a device identity signal provides that anchor when user identity is anonymous or unreliable.

Such a condition is often encountered in WordPress environments with mixed login states. The assignment remains stable across repeat visits in the same browser or device, without requiring accounts or logins.

This approach is used when repeat-visit consistency is needed, but user-level continuity cannot be guaranteed, such as mobile vs desktop or cookie-based browsing. Cross-device behavior allows the same person to receive different variants on different devices, increasing noise, while shared devices can mix multiple people into one assignment, introducing bias at the person level.

For A/A test reliability, device-scoped stability supports “no difference” interpretation for device-level metrics, but cross-device switching and shared-device use define the boundary where assignment noise affects results and measurement interpretation.

A/A Test Traffic Splitting

Traffic splitting defines how eligible traffic is distributed between identical variants in an A/A test, usually expressed as a percentage split of total exposure. In A/A testing, the expectation of no meaningful difference depends on balanced variant exposure over time; uneven or time-skewed traffic can introduce time-based bias, increase noise, and raise the risk of false positives, even when variants are identical.

An equal split allocates the same traffic share to each variant from the start to maintain immediate balance and comparability. A ramped split gradually increases traffic share over time to control exposure and validate stability as volume grows. Both approaches mirror traffic allocation patterns commonly used in a split test, even though the variants themselves remain identical.

In WordPress website development contexts, where traffic can vary over time, across devices, and across caching layers, choosing the appropriate split helps keep exposure fair and measurements consistent.

Traffic splitting controls how much traffic each variant receives, while variant assignment controls who goes to which variant; keeping these roles distinct supports unbiased exposure and reliable interpretation.

Equal Split

An equal split defines the default traffic distribution in an A/A test, where each identical variant receives the same share of traffic throughout the test. An equal split distributes traffic share evenly and keeps variant exposure balanced across time, devices, and request paths.

Balanced distribution improves comparability and metric stability by reducing time-based bias and other sources of noise. Because an A/A test expects no meaningful difference, an equal split helps ensure that any observed movement reflects system variability, lowering the risk of a false positive.

Equal split supports the A/A goal of assessing measurement integrity by maintaining stable exposure balance in WordPress website development environments, where traffic patterns and caching layers can shift behavior. Equal split controls how much traffic each variant receives, while assignment determines who receives which variant and must keep exposure consistent to preserve balance.

Ramped Split

A ramped split defines a traffic distribution pattern in which the traffic share allocated to the second, identical variant increases gradually over time.

In an A/A test, ramped split controls exposure through stepwise increases, which supports early monitoring and validation in WordPress website development environments where caching, plugin scripts, analytics, and site load can introduce instability.

Ramped split reduces early noise and limits false positive risk by keeping initial exposure small, but it also creates a time pattern in traffic share that can introduce time-based bias if conditions change during the ramp.

Ramped split affects how much traffic each variant receives over time, while assignment logic continues to control which users receive which variant, and this boundary must be maintained to preserve stability and keep results comparable.

A/A Test Measurements

A/A test measurements define how an A/A test is evaluated to confirm that identical variants produce no meaningful difference. Measurements compare results against an expected baseline to verify consistency and ensure that any observed variance is explainable as noise. In A/A testing, measurements validate system integrity by confirming that tracking, assignment, and data collection behave as expected.

Measurements are required because identical variants should align closely, and only defined metrics can indicate whether differences stay within expected bounds. Without measurements, noise can be misread as a signal, leading to false positives, especially in WordPress website development environments where plugins, caching, or analytics changes can affect data quality.

A primary metric tracks the main outcome to confirm alignment across variants. A guardrail metric monitoring system tracks system health to detect unintended shifts that signal broken tracking or configuration issues. A stability metric confirms that results remain consistent over time and detects drift.

Measurements depend on reliable tracking events, consistent exposure, and stable assignment rules defined earlier, and they support correct interpretation by reducing noise and protecting against false positives.

Primary Metric

The primary metric represents the main measurement attribute used to validate an A/A test. In this context, it checks whether identical variants show no difference beyond normal noise, confirming a stable baseline expectation and overall measurement integrity.

Across variants, the primary metric must stay aligned and comparable. Minor fluctuation is normal, but sustained gaps flag setup noise. Such misalignment typically points to issues in event tracking, variant assignment, audience rules, or delivery differences such as caching, and it increases the risk of false positives in subsequent A/B tests.

Because the metric is calculated from recorded actions, it depends directly on tracking events. Within WordPress website development, changes to themes, plugins, or caching layers frequently affect how events fire, making the primary metric a reliable signal for detecting inconsistencies or drift in measured user behavior.

Typical primary metrics for WordPress sites are event-based and stable by design: conversion rate, form or checkout completion rate, click-through rate on the main CTA, revenue per visitor or average order value (when revenue tracking is reliable), and key-event completion rate. The selected primary metric acts as the headline A/A check, while guardrail metrics capture side effects and stability metrics monitor longer-term drift.

Guardrail Metric

The guardrail metric defines the safety and sanity checks that protect an A/A test from silent failures. It monitors whether identical variants behave identically across the system, even when the primary metric shows no difference. The baseline expectation is strict alignment: guardrails should remain stable and consistent across variants.

Guardrail metrics protect interpretation by detecting side effects that the primary metric cannot reveal. While the primary metric validates the main signal, a guardrail metric validates measurement integrity and data quality. Tracking integrity depends on guardrail metrics to confirm that events, delivery, and attribution behave consistently, preventing noise, drift, or confounders from invalidating results.

In A/A testing, a guardrail difference does not indicate a winner or loser. A guardrail gap signals setup or execution problems, such as tracking errors, performance or caching drift, audience rule mismatches, or event definition issues, that can create false positives while the primary metric appears stable.

Within WordPress website development, guardrail metrics are critical because plugins, themes, caching layers, consent logic, and third-party scripts can introduce unintended side effects between identical variants.

Typical guardrail metric categories include:

- Error rate (HTTP, JavaScript, form, or checkout failures)

- Latency/page load (response time or key load timing)

- Event loss or volume sanity (unexpected drops or spikes)

- Bounce/exit behavior (only when event-backed and consistent)

- Checkout or form failure rate

- Consent or blocking signals

Alignment validates system reliability; divergence flags instability. Stability metrics then assess drift over time, while guardrails enforce one rule: do not break measurement.

Stability Metric

A stability metric is a measurement used in an A/A test to check consistency over time and detect drift. In an A/A test, identical variants should produce stable, repeatable results across variants and time windows; a stability metric checks variance, distribution shape, and event timing to confirm the “no meaningful difference” expectation.

Trend breaks, spikes, or uneven distributions indicate drift and typically reveal instrumentation changes, assignment instability, audience rule shifts, caching or performance effects, or data latency. In WordPress website development, stability metrics help identify time-based bias introduced by releases, plugin updates, caching changes, traffic shifts, or tracking delays.

Typical stability metric categories include:

- Event volume stability: key event counts remain steady over time.

- Assignment balance stability: variant exposure stays consistent across time windows.

- Metric distribution stability: result distributions do not shift unexpectedly.

- Tracking latency stability: event arrival timing remains consistent.

- Data completeness stability: no sudden drops or spikes from missing or duplicated events.

A stability metric validates A/A test interpretation by confirming data quality and assignment consistency, reducing false positives, and ensuring that “no difference” reflects a reliable system state.

A/A Test Interpretation

What “No Difference” Means?

In A/A testing, no difference means there is no meaningful gap between variants in the selected metric beyond normal noise when results are compared against the same baseline. Because variants are identical, aligned metrics are expected, and small variations reflect random variance.

From an A/A test interpretation perspective, no difference indicates system stability. Stable results suggest that assignment consistency, tracking events, and audience rules remain aligned and are not creating artificial separation between variants. This outcome supports measurement integrity by showing the system does not generate false signals on its own.

This result depends on several conditions staying consistent: identical variants, reliable assignment, stable audience rules, consistent tracking events, and predictable performance and caching behavior. In WordPress website development, small metric shifts can still occur due to plugins, caching layers, or scripts, but these shifts remain random and non-repeatable when the system is behaving correctly.

Importantly, no difference does not mean zero change in every number. It means the metric does not form a stable, repeatable separation over time, reflecting consistent system behavior rather than hidden bias or measurement error.

What is a False Positive?

A false positive in an A/A test means the test reports a difference between identical variants even though no real effect exists. Because identical variants should produce no difference, any apparent gap is a fake and misleading signal.

A false positive comes from noise or bias. Noise is a normal random variation that can create unexpected differences in limited or uneven data. Bias can come from setup issues such as unstable assignments, inconsistent tracking events, mismatched audience rules, or differences in performance/caching. These factors act as confounders, inflating differences and reducing measurement integrity.

In interpretation, a false positive signals caution. The result suggests instability or drift in the setup. In WordPress website development, themes, plugins, caching, and injected scripts increase bias risk, making strict controls over assignment, tracking, audience rules, and caching/performance essential.

Contact

Don't like forms?

Shoot us an email at [email protected]