Enterprise Dedicated Server Hosting

Table of Contents

Enterprise dedicated server hosting establishes the operational backbone of high-performance enterprise websites by replacing generic shared environments with virtualization-free, bare-metal servers engineered for exclusive resource control.

In enterprise website development, where uptime stability, security integrity, and configurability are non-negotiable, dedicated infrastructure replaces shared resource models to meet the demands of mission-critical hosting operations.

As enterprise-grade hosting, this server model provides root access and complete hardware-level isolation, allowing technical teams to configure ECC memory, RAID-backed storage arrays, and hyper-threaded CPU cores without hypervisor interference.

Each component, whether a dedicated Intel Xeon processor cluster operating at 3.6 GHz or a scalable 512 GB DDR4 RAM layout, is allocated based on performance thresholds, functioning within a performance-locked configuration, fine-tuned to sustain complex application execution, multi-regional traffic surges, and integration-heavy backend services.

The architectural framework of enterprise dedicated server hosting supports not only maximum throughput but also strategic alignment with security-first compliance requirements. DDoS mitigation layers (L3/L4), physical firewalls, and private VLAN segmentation work in tandem with root-level administrative controls to deliver defense-in-depth for sensitive enterprise data.

Compliance alignment for enterprise dedicated server hosting includes aligning with standards such as ISO 27001 or SOC 2 Type II, where physical isolation and layered threat mitigation are mandated for certified operations. Such measures are governed by SLA-backed uptime guarantees, with 99.9% minimum thresholds and optional premium tiers delivering up to 100% SLA coverage.

Enterprise dedicated server hosting scales both vertically, by expanding CPU/RAM/storage resources, and horizontally, through distributed node integrations, to support elastic growth patterns of enterprise websites.

The structure is modular, enabling component-level adjustments to hardware, bandwidth, and security layers, granting enterprises the customization and performance certainty needed for predictable deployment pipelines, high-frequency CI/CD operations, and traffic-intensive service availability. As such, its value is foundational to enterprise website development, ensuring full control over latency, compliance, and continuous service availability.

What is Enterprise Dedicated Server Hosting?

Enterprise dedicated server hosting is defined by its single-tenant server architecture, where a physical server is exclusively allocated to a single organization. This configuration ensures full hardware exclusivity and complete isolation across CPU, memory, and storage subsystems.

Dedicated server hosting consists of bare-metal infrastructure with no resource sharing, granting enterprise users uninterrupted access to compute power, data throughput, and configuration controls essential for high-load operational continuity.

The hosting environment provides root-level administrative access, which facilitates unrestricted control over the operating system, middleware stack, and security configurations.

Dedicated server hosting for enterprises supports custom deployment flexibility by enabling tailored kernel modifications, automated provisioning scripts, and environment-specific configurations. This flexibility allows enterprises to align hosting environments with proprietary software architectures and regulatory compliance frameworks.

The platform operates with strict SLA-based performance guarantees, reinforcing stability for workload execution under sustained demand. These SLAs typically commit to 99.9% uptime minimums, ensuring consistent accessibility for enterprise websites under production loads.

The hosting model integrates fault-tolerant redundancy at the data center level, preserving data integrity during hardware migrations and unexpected system failures.

These infrastructure features collectively enable secure, performance-optimized deployment of enterprise websites by ensuring stable workload execution, regulatory compliance, and operational continuity at scale.

Enterprise dedicated server hosting supports business-critical workloads by structurally eliminating multi-tenant interference. It enables horizontal and vertical scalability for resource-demanding, distributed enterprise applications. This server hosting ensures regulatory isolation that aligns with enterprise website development and deployment frameworks.

Performance Capacity of Enterprise Dedicated Hosting

Enterprise dedicated server hosting delivers performance capacity as a deterministic and quantifiable attribute that governs the operational resilience of enterprise websites and supports the high-volume compute operations required in enterprise website development at scale. This capacity is established through exclusive access to physical compute resources, free from virtualization overhead, and facilitates the execution of compute-intensive workloads with consistent precision. Such workloads are executed through dedicated compute cycles, eliminating scheduling conflicts common in shared environments. The resource isolation ensures predictable execution windows, which are critical for maintaining processing continuity under enterprise load.

As a composite performance property driven by hardware provisioning, it directly regulates application responsiveness, concurrency throughput, and operational continuity under high demand. Performance capacity is defined by two primary resource vectors: CPU allocation and RAM availability. In EAV terms, it represents a hardware-defined attribute of enterprise dedicated server hosting, where the values, CPU and RAM allocations, are provisioned per deployment unit to meet specific performance tiers.

CPU allocation determines the host’s ability to process simultaneous threads through multi-threading, sustain high clock speeds, and leverage elevated core counts. This combination maximizes thread concurrency, which directly improves enterprise-grade application throughput during critical load windows and enhances overall processing efficiency.

RAM availability, measured through memory bandwidth and RAM latency, directly governs memory throughput. Within enterprise dedicated server hosting, this availability minimizes latency in active data retrieval and maximizes bandwidth for simultaneous caching operations. It ensures hardware-level control over data processing and regulates the server’s responsiveness to active data calls and process caching.

Together, these hardware-bound dimensions form the core performance profile of dedicated hosting. Their allocation ratios determine concurrency support, task isolation, and the efficiency with which the server handles enterprise processes under load, enabling uninterrupted delivery of high-load digital operations.

Performance capacity of enterprise dedicated server hosting, therefore, regulates the foundational compute behavior that enterprise websites depend on to enable deterministic responsiveness, improve compute efficiency, and sustain operational stability across high-load usage tiers.

CPU Allocation

CPU allocation is a core hardware attribute of enterprise dedicated server hosting that directly influences the system’s ability to execute concurrent processes and maintain low-latency performance for enterprise websites. This means the enterprise client doesn’t share processor time, threads, or instruction cycles with other tenants, removing the virtualization overhead that fragments compute stability.

CPU allocation in this context refers to discrete processor core counts, thread concurrency ratios, and operational frequency measured in gigahertz, each assigned directly and exclusively to one hosting environment.

Dedicated CPU cores, commonly ranging from 8 to 32 per instance, map computational loads to uninterrupted execution slots. When these cores are paired with symmetric multithreading (e.g., 16 threads from 8 cores), the architecture handles parallel request queues without thread starvation.

Clock speeds, typically between 2.4 GHz and 3.8 GHz, depending on the processor model, define how quickly each instruction cycle is processed across those threads. The outcome is a consistent backend response time under transaction-heavy workloads.

Thread allocation is particularly critical for enterprise websites that perform intensive API orchestration, ingest continuous data flows, or execute batch logic in real-time. By locking threads to specific processing lanes, the hosting environment sustains process isolation, mitigating latency spikes during concurrent task executions.

Load distribution mechanisms leverage the core-to-task mapping to balance resource strain across simultaneous input streams. This setup directly impacts backend execution time and guarantees low variability in load response windows.

CPU allocation types often use high-throughput configurations like Intel Xeon Scalable or AMD EPYC chips, which support large L3 caches and high instruction-per-cycle throughput. These chip families operate under virtualization-free execution models in dedicated server environments, where no hypervisor arbitrates CPU time.

As a result, enterprise websites built on this foundation benefit from predictable concurrency handling, even under surging user interactions or constant data requests.

Within the broader structure of enterprise dedicated server hosting, CPU allocation controls the mechanical capacity to compute, process, and respond at scale. Its alignment with thread-level precision and stable clock governance creates the execution baseline on which enterprise website performance depends.

RAM Availability

RAM availability in enterprise dedicated server hosting is a critical attribute and enables consistent runtime performance, caching efficiency, and application responsiveness for enterprise websites. It’s a provisioned, tenant-exclusive system memory, commonly 32 GB, 64 GB, or 128 GB DDR4 ECC, physically allocated to a server instance without interference from shared virtualization layers or kernel-managed ballooning.

This allocation model guarantees memory isolation at the hardware level, preventing performance dilution from neighboring workloads and providing deterministic runtime conditions for enterprise-grade applications.

By designating fixed gigabyte provisioning, RAM availability establishes the upper memory ceiling an enterprise website can rely on for sustained operations. This allocation serves as a dynamic memory buffer that supports session object handling, temporary storage for cacheable elements, and heap allocation for backend services. These in-memory operations enable seamless backend script execution, session token persistence, and memory-resident query responses under high load.

RAM availability processes concurrent user threads, manages persistent sessions, and executes memory-intensive operations without interrupt-driven resource contention.

In-memory operations such as real-time caching, transactional state management, and volatile data staging derive direct benefit from consistent RAM availability. This ensures cached query results, real-time analytics payloads, and dynamic content elements remain memory-resident during traffic surges.

For enterprise websites delivering high-concurrency services like CRM platforms, inventory dashboards, or analytics panels, this memory ceiling reduces data latency and supports predictable response cycles under session saturation.

Content delivery acceleration also hinges on RAM’s capacity to preload and store render-ready assets, reducing repeated disk access and promoting high-speed rendering during user navigation bursts.

Enterprise dedicated server hosting that supports RAM availability as a hardware-level commitment maintains operational predictability and content delivery integrity during peak concurrency windows. For enterprise websites, RAM availability maintains cached query data, ensures uninterrupted session logic, and supports fail-safe scalability during high-demand cycles.

Storage Type and Size of Enterprise Dedicated Hosting

Storage type and size in enterprise dedicated server hosting define the storage configuration that determines how the hosting environment handles data-driven operations at scale. This attribute splits into two primary storage media, solid-state drives (SSDs) and hard disk drives (HDDs), each offering distinct throughput performance and latency profiles.

SSD hosting, available in SATA and NVMe interfaces, supports high IOPS throughput and low latency metrics, making it suitable for enterprise websites that rely on real-time database queries, frequent read/write cycles, and static content delivery. In contrast, HDD hosting is mechanically driven and used for cost-efficient cold storage or bulk data repositories with sequential access patterns.

Storage size refers to the provisioned data capacity, commonly measured in GB or TB, and is allocated exclusively to the tenant without shared distribution. This non-virtualized allocation enables stable disk throughput during peak demand cycles. Storage size can scale vertically through additional drive integration or RAID configurations such as RAID 10. RAID 10 also introduces fault tolerance and data mirroring for improved persistence. In dynamic configurations, RAID 10 enhances write endurance and ensures data integrity through mirrored redundancy.

For enterprise environments with mixed needs, hybrid storage arrays blend SSD and HDD to balance IO-bound applications with archival retention. These hybrid arrays allow enterprise websites to manage both latency-sensitive transactions and long-term bulk data in a unified configuration.

The specific combination of storage type and size determines how enterprise websites manage persistence workloads, ranging from transactional DB queries to static asset replication. A hosting configuration using NVMe SSDs and 2 TB mirrored arrays delivers sub-millisecond response times for user interactions. At the same time, 8 TB HDD-based setups handle archival logs or media libraries with deferred access needs.

Together, these storage dimensions define the enterprise website’s ability to store, access, and maintain content structures with predictable latency and scalable expansion.

SSD Storage

SSD storage is a multidimensional attribute of enterprise dedicated server hosting, establishing both storage architecture and capacity as performance-critical elements for enterprise websites. Unlike mechanical drives, SSDs operate without moving parts, which eliminates seek delays and mechanical failures, contributing to improved reliability under continuous enterprise-level workloads. This is enabled by a flash architecture that minimizes physical latency, ensuring stable performance across intensive workloads.

At the architectural level, SSD storage operates on flash memory cells and interfaces such as SATA and NVMe. SATA SSDs typically offer throughput in the range of several hundred MB/s, while NVMe SSDs reach multi-GB/s transfer rates through PCIe lanes, drastically improving data access speeds. This distinction affects enterprise workloads directly.

NVMe SSDs process random I/O workloads with sub-millisecond latency and sustain tens of thousands of IOPS, which is crucial for real-time database access, frequent session writes, and rapid content delivery. This performance stems from fast sequential read/write capabilities, allowing high-volume content loads without introducing access bottlenecks.

SSD storage supports enterprise website performance by accelerating high-throughput read/write operations, reducing access time during peak traffic, and maintaining consistent I/O performance across simultaneous sessions. As a result, SSD storage directly improves website loading speed, especially during concurrent user access or data-driven interactions. It enables content caching at the storage layer, helping enterprise websites serve frequently requested assets with reduced disk read operations.

In terms of system optimization, SSD storage reduces synchronization delays in mirrored SSD arrays by ensuring consistent write operations and supports caching layers that enhance application responsiveness. NVMe SSDs also offer microsecond latency and high drive endurance, making them ideal for systems that demand persistent uptime and data integrity under load.

Enterprise dedicated server hosting benefits from SSDs through minimized I/O bottlenecks, improved uptime reliability, and scalable concurrency handling, especially in data-driven deployments where speed and resilience converge as baseline requirements.

SSD storage sustains the data throughput and sub-millisecond latency required for enterprise websites to maintain real-time responsiveness, especially during traffic spikes or transactional surges

HDD Storage

HDD storage in enterprise dedicated server hosting is a high-capacity, cost-efficient storage type, built on spinning magnetic platters and mechanical read/write heads used for data retention and retrieval. This hardware configuration introduces rotational latency, typically in the 5 to 12 millisecond range, and limits IOPS due to mechanical motion, but it enables multi-terabyte capacity at a significantly lower cost per terabyte compared to solid-state alternatives.

Typical 7,200 RPM drives introduce access delays and write speed limitations, making them less suitable for high-frequency, transactional applications. However, HDD storage delivers sequential throughput of approximately 150 MB/s, supporting cold-access tiers where high bandwidth isn’t mission-critical.

HDD storage supports cold data environments by handling large, static datasets that require infrequent access. These include archival storage for compliance records, backup array integration for long-term data protection, and static content repositories for digital assets with minimal interaction. When integrated into enterprise dedicated server hosting, HDD arrays serve as a cold-access layer aligned with regulatory retention policies and internal archival standards.

Within enterprise website infrastructure, HDD configurations are typically deployed through SATA interfaces and organized in RAID setups to enhance redundancy and reduce disk fragmentation risks. Due to mechanical read/write operations, HDD storage presents write speed limitations, reinforcing its role as a non-primary tier for content delivery.

HDD storage archives enterprise website data that must be retained for compliance or historical purposes, supporting long-term capacity needs without occupying SSD-backed operational tiers.

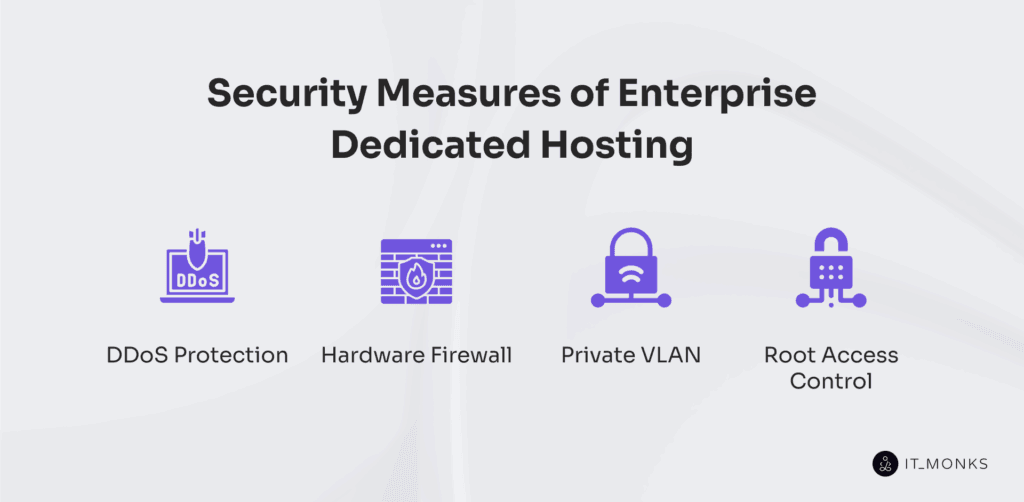

Security Measures of Enterprise Dedicated Hosting

Security measures of enterprise dedicated server hosting implement a structured defense architecture tailored for environments where risk control, system resilience, and operational integrity are non-negotiable. Designed to filter malicious traffic, restrict unauthorized intrusion, and segment sensitive data channels, these enforced protocols implement foundational defense layers that restrict intrusion vectors and prevent service disruption for enterprise websites.

Such security measures in enterprise environments are system-enforced controls that apply threat mitigation logic across critical infrastructure access points. Each protocol applies a distinct layer of perimeter defense, access restriction, or traffic isolation, contributing to a multi-tiered systemic safeguard.

DDoS protection blocks volumetric attacks through L3/L4 traffic filtering and packet inspection. Hardware firewalls enforce perimeter controls via port-level policy enforcement. Private VLANs isolate tenant networks by segmenting traffic and preventing lateral movement. Root access control restricts administrative access using credential gating, login throttling, and IP-based whitelisting.

In enterprise website environments where uptime, data integrity, and compliance are non-negotiable, such integration becomes operationally essential. Together, these mechanisms create a cohesive security architecture where each function reinforces another.

This layered model secures enterprise websites by reducing the attack surface, preserving data confidentiality, and upholding regulatory compliance standards such as HIPAA or PCI-DSS. Within such high-stakes environments, this configuration is structurally required for maintaining business continuity and safeguarding mission-critical workflows.

DDoS Protection

DDoS protection in enterprise dedicated server hosting is a critical, active security mechanism. It’s a real-time, multi-layered security mechanism that filters, mitigates, and absorbs high-volume distributed denial-of-service attacks before they impact the hosted enterprise website.

DDoS protection operates at both the network perimeter and upstream carrier infrastructure, with automated detection systems calibrated to identify anomalous traffic behavior, such as irregular packet rates or unrecognized IP clusters.

The mitigation process begins with real-time traffic analysis driven by threat detection algorithms that continuously monitor traffic flows, using a combination of IP reputation lists, packet inspection rules, and anomaly-based thresholds. Once flagged, suspicious patterns are redirected to traffic scrubbing nodes, where volumetric traffic control mechanisms, including rate-limiting systems, are deployed to contain the threat.

These systems throttle abnormal request surges and redirect suspicious payloads to scrubbing centers, effectively preventing bandwidth saturation at the server edge. Typical enterprise-grade deployments maintain scrubbing bandwidth capacities exceeding 10 Gbps, with automated mitigation latency under 5 seconds, ensuring minimal disruption even during multi-vector attacks.

This L3/L4 protection layer neutralizes attacks at the protocol level before they can degrade infrastructure response or consume enterprise bandwidth. Protection spans Layer 3 and Layer 4 protocols, offering active defense against common volumetric vectors such as SYN floods, UDP amplification, ICMP reflection, and malformed TCP streams.

HTTP GET/POST floods are further neutralized through behavioral rate analysis, often paired with hardware firewalls operating at the premises level to add localized filtration. This coordinated defense preserves throughput stability and ensures that application-layer requests from legitimate users are processed without delay.

Packet filtering rules are continuously updated using attack signature databases to respond to evolving botnet behaviors. By absorbing surges and maintaining throughput stability, DDoS protection within enterprise dedicated server hosting prevents SLA degradation and guarantees uninterrupted service delivery. DDoS protection directly supports the uptime objectives of enterprise websites, especially under sustained attacks that would otherwise compromise user access, application performance, and service continuity.

As part of the broader security framework, DDoS protection functions as a systemic control layer, stabilizing the hosting environment under adverse network conditions and reinforcing multi-tiered network-level defense strategies.

Hardware Firewall

A hardware firewall is a core physical-layer security component within enterprise dedicated server hosting. It’s a physical security appliance deployed at the network boundary in enterprise dedicated server hosting, functioning as the first layer of defense before any traffic reaches the hosted infrastructure.

A hardware firewall core role is to inspect packets in real time, apply rule-based filtering, and enforce traffic control policies at OSI layers 3 and 4, including direct enforcement of TCP, UDP, and ICMP protocols. This placement ensures that all ingress and egress flows are subject to perimeter scrutiny prior to OS-level exposure, reducing risk and preserving server integrity.

Unlike software-based firewalls integrated within the server operating system, the hardware firewall remains completely external, operating independently from hosted workloads and virtual machines. This physical separation allows it to execute filtering logic without resource contention or software-layer vulnerabilities.

Using stateful inspection and access control lists (ACLs), the firewall actively analyzes packets to enforce allowlist configurations, deny policies, and port-level access control. For instance, unused services like Telnet or FTP can be denied at the port level, while specific IP geographies, often associated with malicious activity, can be restricted via static or dynamic blocklists.

In enterprise hosting environments, particularly those handling payment processing, user authentication, or sensitive intranet portals, the hardware firewall acts as a deterministic policy executor. It filters malformed packets, enforces rate limits on targeted protocols, and blocks unauthorized access attempts without requiring mediation from the host OS.

Once a rule is defined, such as rejecting inbound TCP traffic on port 23, the appliance enforces it consistently, even under volumetric attack scenarios or during periods of high load. In such cases, its fail-closed behavior ensures that no unauthorized sessions are initiated, preserving platform stability.

The firewall’s rule set also supports protocol-specific logic: administrators can disable ICMP entirely, restrict UDP traffic except for DNS and NTP, or enable TCP SYN validation to preserve session integrity. These functions form an intrusion prevention boundary that shields the enterprise server from brute-force attacks, protocol exploitation, or malformed packet injections. Firewall actions are also logged in real time, with auditing modules providing actionable insights into blocked events and traffic anomalies, critical for compliance and incident response.

By operating as an enforcement mechanism rather than a passive filter, the hardware firewall plays a central role in upholding SLA-bound uptime guarantees. Its deterministic rule execution, hardware-isolated control logic, and real-time analysis capabilities ensure that enterprise websites remain insulated from network-level threats, preserving service availability, platform integrity, and data security at scale.

Private VLAN

Private VLAN is a traffic isolation mechanism implemented within enterprise dedicated server hosting to segregate data streams, minimize broadcast exposure, and enforce intra-network privacy between server instances or services. It functions as a Layer 2 traffic segmentation mechanism that restricts intra-host communication between server nodes sharing the same switch infrastructure.

This segmentation method is hardware-enforced through switch-level configurations and directly supports enterprise websites by maintaining the separation of backend services from publicly routed interfaces.

Private VLAN isolates east-west traffic by assigning port roles, promiscuous, isolated, and community, via switch-level configuration, thereby reducing the broadcast domain and enforcing tenant-level data separation. For enterprise websites, this role assignment ensures backend APIs or database services remain invisible to other tenant workloads, reducing exposure risk.

These communication boundaries in enterprise dedicated server hosting are enforced at the switch level, ensuring hardware-defined rules control tenant partitioning and internal traffic behavior. Such isolation limits intra-host communication to defined port relationships and prevents lateral exposure between departments, services, or workloads hosted on the same dedicated fabric.

By applying VLAN tagging (802.1Q) and port isolation rules, a private VLAN enforces strict communication policies where isolated ports cannot transmit data to other isolated or community ports, and community ports only interact within their defined group. In the context of enterprise website infrastructure, this segmentation prevents exposure of backend services, such as internal APIs, admin portals, or database clusters, to publicly accessible interfaces.

Enterprise dedicated server hosting utilizes private VLANs to implement tenant segmentation for organizations managing multi-department applications or isolated workloads under compliance mandates. This switch-enforced topology supports frameworks like PCI-DSS or HIPAA by preventing unauthorized lateral traffic and defining controlled data flow between application tiers.

Private VLAN configurations often integrate with network ACLs to extend policy enforcement beyond port rules and into application-aware segmentation. For enterprise websites that operate in environments where internal communication integrity and backend service protection are critical, private VLANs deliver port-level isolation with reduced attack surfaces and minimized broadcast traffic overhead.

Root Access Control

Root access control is a critical administrative safeguard implemented in enterprise dedicated server hosting to regulate privileged operations, restrict unauthorized configuration changes, and preserve system-level integrity. It governs administrative privileges at the system level by applying strict segmentation, authentication, and audit enforcement to restrict unauthorized configuration changes and preserve operational integrity.

It applies to the superuser permission layer, whether Linux’s root or Windows’ Administrator, which controls full authority over server configurations, services, and execution rights. In enterprise environments, this level of access is never granted by default; it is provisioned through clearly defined administrative access policies and enforced using conditional permission frameworks.

To restrict privileged access, enterprise dedicated hosting implements role-based access control (RBAC), where tiered sudo permission tiers are assigned based on user roles and functional scope. Access paths are authenticated through SSH key-based login combined with IP whitelisting protocols, limiting root-level authentication to verified machines and users. Every session initiated with elevated credentials is logged into a structured audit trail, capturing command-level interactions for forensic review. These logs are retained per policy-driven lifecycle rules, ensuring full alignment with compliance audits.

In multi-user environments, root access control segments permission scopes via sudoers file configurations, granting operational boundaries tied to each administrator’s responsibility. For time-sensitive or event-driven tasks, privilege elevation is time-boxed through credential expiration mechanisms or session token validation frameworks, reducing exposure during deployment or emergency events.

The operational risks of unregulated root access include unauthorized system alterations, insecure script propagation, and misconfigurations that breach compliance frameworks such as ISO 27001, HIPAA, or SOC 2. Root access control governs the conditions under which superuser rights are granted, segmented, and elevated, ensuring only authorized personnel execute privileged actions. It restricts scope, segments roles, logs usage, and enforces temporal thresholds to protect system-level integrity.

Root access control ensures that elevated privileges within enterprise dedicated server hosting are enforced under strict authentication and role-based governance. For enterprise websites, where uptime, platform resilience, and backend accountability are mandatory, this structural enforcement keeps administrative actions traceable, conditionally elevated, and isolated to accountable stakeholders. It supports operational continuity, reduces internal threat vectors, and aligns infrastructure management with enterprise-grade security policy.

Uptime Guarantee of Dedicated Server for Enterprise

The uptime guarantee of enterprise dedicated server hosting functions as a measurable commitment within a formal service-level agreement (SLA), defining the minimum operational time a server must sustain across monthly or annual intervals. Unlike generalized assurances, it specifies exact availability thresholds, such as 99.9% or 100%, that convert directly into defined windows of allowable downtime.

For example, a 99.9% SLA allows for approximately 43.8 minutes of downtime per month, while 99.99% reduces this to under 4.4 minutes, values that frame operational risk tolerance for enterprise platforms. These numerical thresholds serve as the operational baseline for enterprise websites where real-time availability governs financial transactions, health data exchanges, and mission-critical application execution.

Enterprise dedicated server hosting commits to enforcing service availability metrics through infrastructure redundancy, including dual power sources, hardware-level failover protocols, and load-balanced networking paths. The architectural design integrates redundant power systems, fault-tolerant hardware, and failover networks to eliminate single points of failure, thereby reducing downtime events below the SLA’s defined threshold.

Monthly uptime calculation models combine data from network monitoring nodes, NOC incident tracking systems, and third-party auditing agents to deliver compliance verification. These monitoring systems are essential for SLA enforcement, providing verifiable uptime data that underpins remediation clauses and tier-based guarantees.

These guarantees are central to maintaining business continuity for enterprise websites, ensuring uninterrupted access to financial platforms, healthcare applications, and customer-facing services. The subsequent SLA tiers define differentiated levels of uptime, including premium offerings and remediation clauses, which directly correlate with the infrastructure’s capacity to sustain uninterrupted service.

99.9% SLA Uptime Standard

The 99.9% SLA uptime standard is a baseline contractual and operational threshold offered within enterprise dedicated server hosting. It limits total allowable downtime to approximately 43.8 minutes per month or 8.76 hours per year. This availability percentage is formalized through a Service-Level Agreement (SLA) and specifies a foundational uptime expectation for enterprise websites that require high availability without demanding absolute continuity.

The standard is enforced through a tightly integrated hosting architecture combining redundant infrastructure, 24/7 real-time monitoring systems, automated incident logging, and failover protocols.

These components feed into the uptime calculation model and collectively align the infrastructure with the SLA-defined availability target. Load-balanced network configurations and parallel power systems mitigate service interruptions caused by hardware faults or localized failures.

Within the enterprise context, the 99.9% SLA tier supports business-critical applications that demand sustained accessibility, such as internal portals, customer account dashboards, or B2B ordering systems, while tolerating minimal scheduled maintenance exclusions. This makes it particularly suitable for industries like finance, healthcare, and SaaS, where uptime during operational hours is essential, but limited downtime windows can be operationally acceptable.

The SLA serves not only as a service benchmark but also as a performance guarantee. It binds the hosting provider to maintain service continuity within the downtime allowance, tracked and recorded via availability reports as part of a standardized service continuity metric. Violations of this threshold trigger contractual consequences, such as performance credits, which are tied to the deviation from the agreed uptime percentage guarantee.

100% SLA with Premium Enterprise Plans

The 100% SLA offered through premium enterprise plans is a zero-downtime performance tier available in enterprise dedicated server hosting, guaranteeing complete infrastructure and network availability with zero-minute downtime tolerance.

This contractual, zero-tolerance SLA is enforced through enterprise dedicated server hosting configurations engineered to exceed standard availability thresholds. To maintain uninterrupted service, the hosting environment integrates active-active server clustering, real-time DNS failover, redundant carrier links, dual power systems, and HA (High Availability) infrastructure.

BGP multi-carrier routing is layered into the network core, ensuring continuous path availability even during upstream provider degradation. These systems are reinforced by an uptime enforcement system with continuous SLA monitoring and sub-15-minute incident escalation protocols.

The architecture supporting this SLA tier eliminates single points of failure by default. Multi-zone failover ensures that any disruption in a node or data center triggers real-time traffic rerouting with no impact on service delivery. Hot-swappable hardware components, spanning storage and network interfaces, are deployed across the stack to minimize manual intervention in live environments, ensuring failover continuity even under active workload pressure.

Power redundancy is extended through battery backups and diesel failover generators in N+1 or 2N configurations, completing a network design hardened for mission-critical operations.

This level of SLA enforcement is selected for enterprise websites that cannot tolerate operational failure, operating in revenue-critical or compliance-regulated environments, such as healthcare data portals, global SaaS platforms, fintech infrastructures, and e-commerce transaction engines. For these platforms, a single failed request may cascade into monetary loss, regulatory breach, or reputational damage.

Unlike general uptime guarantees, the 100% SLA includes strict penalty clauses for violation, tied to a real-time service credit system and enforced through verified third-party monitoring.

The zero-minute tolerance threshold elevates this SLA from aspirational to contractual, structuring hosting behavior around obligation, not best effort. This structured commitment links SLA enforcement to every functional layer of the infrastructure.

Downtime Compensation Policies

Downtime compensation policies in enterprise dedicated server hosting are the contractual enforcement layer tied to uptime guarantees. These policies are structured to issue service credits when actual uptime dips below the SLA-defined threshold, typically measured within a rolling monthly uptime window.

Each verified instance of unscheduled downtime exceeding the SLA breach threshold initiates a predefined credit ratio, such as a 10% deduction per 30-minute increment, directly applied to the client’s next monthly invoice.

The policy is activated upon documented SLA breach, substantiated by monitoring logs, incident timestamps, and RFOs. Claims must be submitted through formal channels, usually within a specified window via a support ticketing system, accompanied by the corresponding claim ticket ID. Compensation is limited by tier-based credit caps defined per billing cycle and explicitly excludes scheduled maintenance or force majeure events.

This enforcement protocol substantiates enterprise website reliability with quantifiable accountability. By linking uptime shortfalls to direct financial consequences, enterprise dedicated server hosting aligns its infrastructure commitments with the operational needs of business-critical applications.

By enforcing clearly defined credit tiers for verified downtime incidents, downtime compensation policies function as a contractual safeguard mechanism that protects enterprise websites from hosting infrastructure failures. These policies authorize a reliable fallback layer, not only to compensate for service disruption but also to validate the SLA framework backing high-availability enterprise platforms.

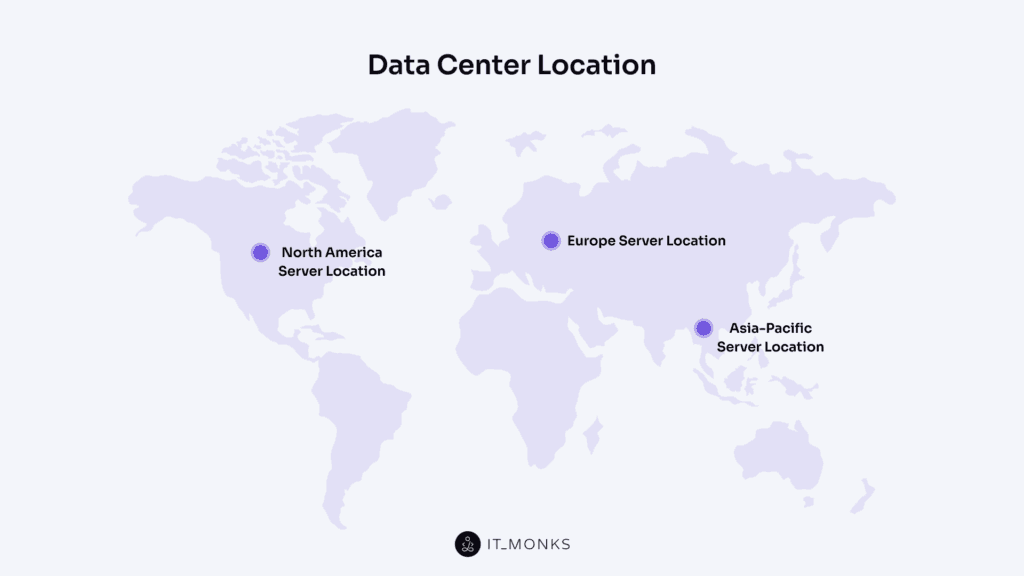

Data Center Location

Data center location in enterprise dedicated server hosting determines the physical point-of-presence where the hosting infrastructure is deployed, and this spatial configuration directly affects latency patterns, regulatory compliance, and failover architecture, delivering consistent enterprise-grade outcomes. From routing efficiency to jurisdictional enforcement, the geographic positioning of servers directly impacts how enterprise websites operate under performance-critical conditions.

Proximity to users reduces round-trip time (RTT), resulting in faster page rendering and minimized latency spikes. For enterprise websites that serve region-specific audiences or operate under strict SLA agreements, location-based latency optimization becomes a performance mandate rather than a convenience. ISO-certified facilities in strategic regions support edge-routing performance through proximity-based load balancing, mitigating service degradation during peak access hours.

Jurisdictional data residency compliance is determined by the geographic site of data processing and storage. Regulations such as GDPR in Europe and CCPA in the United States enforce strict geofencing policies, requiring that enterprise data remain within legal boundaries unless explicit governance mechanisms are in place.

For sectors like finance, healthcare, and defense, geographic infrastructure alignment is essential to avoid breach of SLA terms and sector-specific regulatory frameworks.

From an availability standpoint, distributing infrastructure across multiple zones supports regional failover capability. This multi-zone architecture aligns with enterprise-grade SLA requirements by ensuring that localized failover nodes activate without service degradation. Operational segmentation prevents service-wide outages and reinforces enterprise website resilience under adverse conditions.

Hosting providers offering enterprise-dedicated environments build their infrastructure around a global hosting footprint. This footprint aligns deployment zones with business objectives such as regulatory adherence, user proximity, and SLA-based service targeting.

Hosting deployments are commonly distributed across North America, Europe, and Asia-Pacific to maximize reach and compliance coverage. Each zone is optimized with its own regional SLA, latency model, and data sovereignty scope. Such deployment regions offer unique latency models, compliance capabilities, and infrastructure traits, which will be detailed in the following subsections.

North America Server Location

The North America server location in enterprise dedicated server hosting is a geographically strategic deployment zone for enterprise websites targeting U.S. and Canadian users. North America server locations operate within key metro regions like Virginia, California, Texas, and Ontario, where proximity to major business and tech ecosystems strengthens both operational and legal alignment.

Connected through Tier 1 network providers and carrier-neutral exchanges, they support multi-path routing, optimizing content delivery with low round-trip time across all principal urban hubs in North America.

Regional peering zones span both domestic and cross-border IXPs, enabling stable bandwidth paths with low jitter for latency-sensitive applications. Average RTT across U.S. metro areas remains under 20ms, supporting SLA-bound delivery thresholds for enterprise websites.

Enterprise dedicated server hosting in the North America server location fortifies routing efficiency through high-speed peering agreements across national and cross-border nodes, creating a low-latency delivery zone ideal for platforms with demanding performance thresholds.

Dedicated server hosting supports uninterrupted performance through disaster-resistant architectural zones and on-site failover systems. These data centers operate under climate-controlled environments with redundant power grids and meet compliance with ISO and SSAE frameworks, ensuring physical infrastructure readiness for continuous uptime. SLA monitoring centers embedded in these zones reinforce contractual performance guarantees.

Enterprise dedicated server hosting in the North America server location complies with regulatory demands across U.S. and Canadian jurisdictions, aligning with HIPAA, SOC 2, and CCPA protocols. Data residency enforcement mechanisms are in place for Canadian provinces requiring localized data storage and access policies. This compliance scope makes the North America server location particularly effective for regulated data applications in healthcare, SaaS, and financial operations.

Enterprise websites with primary user bases in the U.S. and Canada benefit from this location’s regional SLA alignment, jurisdictional coverage, and low-latency delivery network. Whether supporting multinational e-commerce with U.S.-centric fulfillment or corporate platforms handling sensitive customer data, the North America server location strengthens legal defensibility and operational reliability across both federal and provincial scopes.

Europe Server Location

The Europe server location in enterprise dedicated server hosting is a strategically deployed infrastructure zone that supports GDPR-compliant, low-latency operations for enterprise websites serving European markets. It’s a geographically distributed network of Tier III and Tier IV data centers operating within countries like Germany, the Netherlands, Ireland, and the United Kingdom.

These infrastructure nodes host enterprise websites under region-specific legal enforcement and interconnectivity policies, forming a sovereign hosting zone engineered to enforce EU data residency mandates and deliver latency-optimized content across continental networks.

Each data center supports enterprise-level operations through redundant grid-level power feeds, ISO 27001 and EN 50600 certifications, EU-based Network Operations Centers (NOCs), and carrier-neutral access. This setup maintains operational availability and enforces regional data segregation through intra-European delivery protocols.

Enterprise dedicated server hosting in the European zone supports full GDPR jurisdiction enforcement and satisfies in-country data residency mandates, ensuring that all data is processed and retained strictly within EU legal borders under verifiable audit conditions.

Such regional data processing scope complies with ePrivacy regulation constraints and explicitly restricts extraterritorial data transfers unless authorized under EU adequacy decisions.

By maintaining EU-based NOC operations and infrastructure audits under EN 50600, the Europe server location delivers a controllable operational surface that supports verifiable GDPR compliance and enterprise-grade service continuity.

Latency-sensitive applications such as real-time analytics dashboards, multilingual content platforms, and high-frequency trading systems benefit from sub-20ms round-trip latencies across metro regions, supported by proximity-based routing, regional peering agreements, and direct IX interconnects across Frankfurt, Amsterdam, and London. DNS-level resolution also adheres to intra-EU jurisdiction routing rules, minimizing cross-border latency and legal exposure.

Europe server location supports enterprise websites operating under legal obligations in sectors like EU-regulated financial services, multinational SaaS with cross-border SLAs, and pan-European e-commerce ecosystems. Deployment in this zone supports content delivery aligned with user jurisdiction, system availability thresholds, and enterprise-grade compliance reports required for jurisdiction-bound operations.

Enterprise websites targeting the European customer base often pair Europe server locations with complementary deployment zones, such as North America or Asia-Pacific, for global redundancy while retaining strict intra-EU data routing for compliance-driven services.

Asia-Pacific Server Location

The Asia-Pacific server location in enterprise dedicated server hosting is a latency-optimized, compliance-aware, and traffic-scalable infrastructure zone. It hosts a network of high-capacity, low-latency data centers across major metropolitan zones including Tokyo, Singapore, Sydney, and Mumbai.

The Asia-Pacific infrastructure integrates a fiber mesh backbone with international multi-IX peering, submarine cable access, and geo-redundant routing protocols to deliver resilient performance for enterprise websites requiring cross-region availability without latency degradation.

As a latency-optimized segment of the global hosting architecture, the Asia-Pacific Server Location routes traffic through regional edge nodes to maintain sub-50ms delivery thresholds across dense user clusters in Southeast Asia and Oceania. This is reinforced through CDN alignment strategies, enabling consistent content load times regardless of device class or local network condition.

The Asia-Pacific server location complies with India’s data localization mandates, aligns with Singapore’s PDPA for personal data governance, and adheres to Australia’s Privacy Act and APPs. These legal frameworks allow enterprises to operate in-country hosting while preserving cross-border redundancy.

Enterprise websites leverage the Asia-Pacific server location to segment traffic by jurisdiction, serve country-specific portals, and balance eastern hemisphere demand during western low-traffic cycles. This region also functions as an RTO-aligned failover zone within broader availability frameworks, enabling multi-point failover and inter-APAC latency bridging to support service continuity under high availability standards.

Enterprise dedicated server hosting positions the Asia-Pacific Server Location as a compliance-synchronized, latency-optimized infrastructure layer, purpose-built to serve regulated and performance-critical enterprise use cases across Japan, South Korea, Australia, India, and Southeast Asia.

Scalability Options for Enterprise Dedicated Server Hosting

Scalability options in enterprise dedicated server hosting refer to the structured capacity-expansion mechanisms that enable enterprise websites to grow by increasing dedicated physical resources, CPU, RAM, storage, and server nodes, without disrupting performance or violating uptime guarantees.

This is a non-virtual scaling model, unlike elastic cloud platforms, scalability here requires hardware reconfiguration and manual provisioning instead of automatic abstraction. Such an approach orchestrates dedicated node expansion to support the stable growth of enterprise websites through predictive resource allocation and infrastructure alignment with evolving workload patterns.

These scalability options are essential for enterprise websites that experience fluctuating usage patterns, workload surges, or sustained transactional growth. They ensure the platform scales without architectural disruption and are foundational for scalable website development, where infrastructure evolves hand-in-hand with application complexity and performance demands.

A scalable website is delivered through structured architecture models that align expansion methods with performance needs and service guarantees. There are three main scaling paths: vertical, horizontal, and custom configuration.

Vertical scaling capacity addresses resource limitations within a single server, typically by increasing memory or processing power. It suits applications with centralized workloads that need more performance but no distribution. Horizontal node distribution expands across multiple servers, using load balancing design to distribute traffic and support services that benefit from parallel processing. This model fits websites expecting traffic bursts or distributed transactions.

Custom provisioning models cover needs that don’t align with standard scaling logic. These setups are defined per workload and often include specific hardware orchestration or software tuning for regulated environments or proprietary systems. All models function under a scalable architecture, engineered to preserve SLAs and ensure service continuity under expansion, through resource isolation and deployment controls.

Scaling actions are governed by a capacity planning framework, including provisioning windows, threshold forecasts, and infrastructure checks. Dedicated hosting doesn’t scale instantly; every expansion requires manual orchestration that preserves service-level guarantees. Scalability in this context emphasizes long-term continuity over short-term elasticity, preparing infrastructure to handle sustained growth without compromising system integrity.

Vertical Scaling

Vertical scaling in enterprise dedicated server hosting is a resource-expansion mechanism that increases the capacity of a single server node. It increases the performance capacity of a single physical server by expanding its core hardware components, typically through CPU core expansion, memory module upgrades, or disk capacity scaling within the same server chassis. Unlike distributed or virtualized setups, vertical scaling maintains a unified, non-virtual performance architecture that directly augments the compute density of the original node.

This model becomes operationally significant for enterprise websites built on centralized frameworks or those with tightly coupled application layers. When these workloads intensify, due to growing databases, higher session concurrency, or compute-heavy processes, vertical scaling supports the performance demand by allocating more local resources instead of fragmenting the system across multiple nodes.

To support hardware continuity, enterprise servers often include modular infrastructure designed for incremental expansion. Provisioning is handled through resource headroom planning. Some enterprise dedicated servers are pre-configured with additional CPU sockets, vacant DIMM slots, or unoccupied drive bays to allow future upgrades without downtime.

Where pre-allocation is absent, vertical scaling may require scheduled hardware installation windows, involving hot-swappable drive integration or server chassis reconfiguration.

This single-node performance augmentation preserves hosting consistency through a resource consolidation policy. Enterprise websites that depend on low-latency transaction processing, session continuity, or localized caching benefit from enhanced I/O throughput and reduced travel distances, while also maintaining tighter thermal envelope management. It also limits the need for extensive software reengineering, as the resource environment remains logically intact.

However, each server chassis has physical ceilings, RAM scalability limits, CPU socket capacity, and finite PCIe lane bandwidth, which eventually constrain further upgrades. Vertical scaling extends the useful life of a server node but doesn’t replace the need for architectural scalability planning.

Horizontal Scaling

Horizontal scaling in enterprise dedicated server hosting is the method of expanding infrastructure by adding additional server nodes. Unlike vertical scaling, which amplifies resources within a single server, horizontal scaling distributes load across multiple nodes, eliminating the bottlenecks associated with single-machine limitations.

This deployment model supports elastic traffic absorption and concurrent operations for enterprise websites, particularly under conditions of fluctuating demand or segmented service logic. It also enables service replication across nodes, allowing each machine to duplicate critical workloads in real-time without interdependency stalls.

The architecture of horizontal scaling integrates a dedicated load balancer for traffic routing based on node-level allocation strategies. These components synchronize traffic across server groups using application-layer distribution mechanisms, ranging from HTTP session segmentation across stateless web frontends to database sharding and cache layer replication.

Such orchestration ensures consistent routing, state handling, and replication logic across nodes. To preserve stateful service behavior, synchronization protocols maintain session persistence across the cluster. Each hardware node array communicates via node-to-node heartbeats and operates under a failover-ready configuration to isolate failure domains without collapsing the full environment.

Each node is configured with a real-time failover mechanism to instantly reroute traffic if failure occurs. This system-level redundancy increases service availability through traffic redistribution, mitigates single-point failure risks, and retains SLA guarantees even during partial infrastructure degradation.

For enterprise websites that manage modular applications, run geographically distributed services, or must respond to unpredictable session spikes, horizontal scaling provides a real-time service duplication strategy without rebooting or pausing active operations.

Stateless scaling compatibility and cross-node latency optimization are inherent to this model, making it suitable for microservice architectures, real-time analytics environments, or content delivery frameworks. Horizontal scaling supports elastic growth patterns that bypass the physical limits of vertical expansion and aligns with enterprise-grade development demands by preserving uptime insulation through a redundant architecture of fault-isolated compute zones.

Custom Configuration Support

Custom configuration support in enterprise dedicated server hosting is a tailored, infrastructure-level capability that enables organizations to align server architecture with unique performance, security, or compliance requirements. Unlike standardized setups that apply one-size-fits-all hardware and operating systems, custom configuration support accommodates environment-specific criteria through component-level engineering.

Server architecture is shaped to accommodate intensive applications, non-standard integrations, and compliance-locked environments that conventional hosts fail to address.

This level of configuration enables enterprise websites to operate within server environments engineered for proprietary software stacks, regulatory alignment, and continuous availability.

At the hardware layer, custom configuration support provisions precise CPU series, RAID controller schemas, ECC RAM density, and NIC bonding strategies according to the enterprise’s load architecture. On the software layer, system images are configured from scratch, injecting operating systems like VMware ESXi, Windows Server, or Linux distributions with tuned kernel modules and BIOS-level modifications.

These system-level customizations ensure the hosting environment aligns with the performance parameters and security thresholds of the enterprise workload.

The modifications serve application-layer dependencies such as legacy middleware, custom drivers, or hardware-locked binaries that demand deterministic compatibility.

The network layer receives equal depth of customization. This includes VLAN segmentation by policy tier, static IP address allocations for distributed node groups, firewall configuration templates for ACL-level security policies, and region-specific latency-optimized routing.

It allows enterprise websites to comply with jurisdictional routing mandates and internal policy enforcement across distributed server nodes.

Infrastructure is often integrated with enterprise LDAP directories, SNMP monitoring agents, and dual-authentication stacks to meet SIEM compatibility and identity compliance requirements.

Custom configuration support is architected for enterprise-grade continuity, security, and observability across the entire server stack. Every deployment is preceded by a consultative configuration phase, where platform-specific compatibility is validated against hosting SLAs. Whether the use case is financial transaction processing, regulated data isolation, or hybrid workload bridging, each requirement maps to a physical or virtual parameter on the server.

This configuration capacity enables infrastructure alignment with the operational conditions that enterprise websites impose. Without it, regulated workloads stall, proprietary stacks fail, and the operational baseline of critical enterprise web infrastructure becomes misaligned with its runtime environment.

Why Enterprise Dedicated Hosting Enhances Enterprise Website Development?

Enterprise dedicated hosting enhances enterprise website development by offering an isolated, policy-configurable infrastructure tailored to the needs of performance-critical and compliance-driven systems. It serves as a controlled environment where resource allocation, access control, and deployment behavior can be tightly aligned with each stage of the development lifecycle.

As a specific model of hosting for an enterprise website, enterprise dedicated hosting is a full-stack, single-tenant infrastructure model that delivers predictable, configurable, and isolated environments for enterprise-scale development workflows.

With predictable compute power, teams can simulate load, run performance profiling, and execute version-specific builds without external interference. This staging precision allows developers to maintain staging-to-production fidelity, ensuring that test outcomes mirror live deployment behavior with minimal variance.

The hosting model grants root-level access and VLAN isolation, enabling secure build automation and infrastructure-level customization. These elements directly enable DevOps orchestration by supporting CI/CD integration, infrastructure-level customization, and automated build workflows.

For enterprise website development under compliance constraints, the infrastructure supports data locality control, system-level logging, and OS tuning. Policy-enforced configuration allows enterprise teams to implement build-time compliance enforcement for HIPAA, SOC 2, and PCI-DSS audit requirements. This matches the operational requirements where control over every layer of the stack is non-negotiable.

Scalability options, vertical, horizontal, and hybrid, help teams architect around future load without redesigning deployment logic. Enterprise dedicated hosting maintains SLA-bound consistency, ensuring application behavior, uptime thresholds, and scaling logic remain intact during traffic growth.

Enterprise dedicated hosting is part of the development architecture. It integrates directly into build, test, and deployment cycles, supporting configuration control, environment consistency, and long-term development continuity. Without this integrated infrastructure model, enterprise website development risks losing control over deployment consistency, compliance assurance, and architecture scalability.

Contact

Don't like forms?

Shoot us an email at [email protected]