Scaling Enterprise Website Hosting for High Traffic Performance

Table of Contents

Enterprise hosting scales to manage high traffic by adapting infrastructure capacity in real time to sustain performance under volumetric stress. High traffic typically manifests as spikes in concurrent sessions, increased request throughput, or sustained load levels that surpass baseline capacity thresholds.

When request concurrency intensifies or throughput demands exceed baseline thresholds, the hosting environment undergoes structural adjustments to preserve availability and responsiveness.

High traffic introduces unpredictable surges in session load, forcing enterprise hosting to reallocate compute resources, maintain elastic service boundaries, and mitigate degradation risks. Without this dynamic scaling process, enterprise hosting risks infrastructure saturation, leading to latency accumulation, resource contention, and service instability.

Enterprise hosting mitigates these outcomes by integrating load-aware capacity adaptation across compute, storage, and network layers. Elastic infrastructure components scale deployment density and redistribute traffic paths across modular server configurations to absorb demand variation. This maintains operational continuity even under sustained or spiking request volumes.

Scalable Hosting Setup for Enterprise Websites

Scalable hosting setups are architected to accommodate fluctuating resource demands through elastic compute layers and distributed deployment structures. These configurations manage load surges by provisioning capacity dynamically, enabling the infrastructure to scale in direct response to real-time system stress. Through built-in elasticity and horizontal extensibility, the setup responds to concurrency spikes and maintains performance across predefined load thresholds.

This hosting architecture prepares the infrastructure to support enterprise website demands, including unpredictable access volumes, multi-origin requests, and complex routing conditions.

It supports redundancy through zone-based replication and implements fault-tolerant strategies that ensure continuity during localized strain. The infrastructure enables modular expansion, supports stateless node design, and maintains auto-scaling readiness based on performance indicators.

Scalability metrics, such as response time under concurrent load and throughput per node, guide resource distribution policies baked into the setup. These metrics are continuously evaluated through instance orchestration systems that calibrate node allocation based on observed load patterns.

The enterprise web hosting setup abstracts deployment logic for orchestration across multi-tenant architectures, enabling consistent performance across fragmented workloads. This foundational setup enables service-layer distribution and cross-node processing, operationalized through cloud-native patterns and modular server topologies, detailed in the architectural strategies that follow.

Cloud-Native Architecture for High Availability

Cloud-native architecture in enterprise hosting environments ensures high availability by structuring infrastructure around stateless, distributed services deployed through automated orchestration layers.

Stateless services eliminate data retention at the execution layer, enabling immediate restart or relocation during traffic events without risking session loss. Each service is containerized, abstracting execution from the underlying hardware and supporting rapid replacement during node failure or system degradation.

Distributed deployment spans service instances across multi-zone or regional redundancy, ensuring continuity even during localized infrastructure failures. Failover logic embedded in the deployment topology replicates service availability across physical boundaries, maintaining delivery consistency even during hardware interruptions or zonal network loss.

Orchestration continuously monitors service health and triggers automated recovery processes, spawning new containers, rebalancing traffic routes, or isolating failing nodes without administrative input.

This automated responsiveness forms the core of an auto-healing infrastructure, where orchestration layers recover from node-level failures without manual intervention. The system is architected to withstand concurrency fluctuations and infrastructure volatility without disrupting application response.

In the context of enterprise cloud hosting, this architectural resilience is essential; uninterrupted performance at scale depends on built-in fault tolerance, not post-failure reaction.

This resiliency foundation underpins deployment modularity, allowing each service to scale independently, a critical prerequisite for modular server strategies that adapt to fluctuating traffic loads.

Modular Server Deployment for Traffic Flexibility

Modular server deployment in enterprise hosting environments enables traffic flexibility by segmenting infrastructure into independently managed units that respond dynamically to fluctuations in request load.

Each module, whether a stateless instance, container, or isolated service group, operates autonomously, allowing precise adjustments without system-wide disruption. Because these modules scale independently, the system can stretch or compress resource allocation in real time based on shifting traffic loads.

This structure supports an elastic response under shifting conditions. Modules scale independently to absorb bursts, compress during lulls, or reroute traffic around transient slowdowns. Because deployment segments operate in isolation, failures are contained within fault domains, preserving uptime across the broader hosting environment.

Load-routing granularity across micro-deployments improves throughput distribution, while node segmentation supports service-level scaling tuned to observed concurrency thresholds. Each service module adjusts its operational capacity independently, allowing service-level scaling without affecting unrelated components.

As micro-deployments act independently, they absorb and reroute variable request loads without disrupting broader system functions. This architectural responsiveness forms the operational base for higher-order traffic adaptation strategies throughout the enterprise hosting stack.

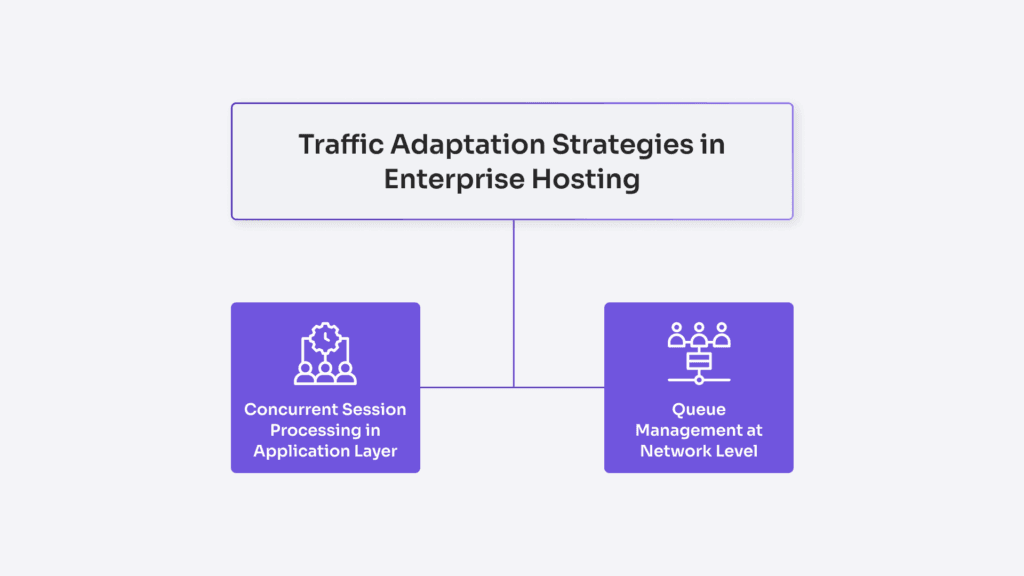

Traffic Adaptation Strategies in Enterprise Hosting

Traffic adaptation strategies in enterprise hosting are the system-level responses triggered by fluctuating request loads and concurrency thresholds. These strategies are reactive behaviors that operate within the runtime environment to preserve responsiveness and control latency. During traffic surges, the application layer adjusts session processing, manages parallel request execution, isolates heavy consumers, or throttles based on priority.

Concurrently, the network layer moderates request flows by buffering queues or temporarily segmenting load pathways. These behaviors form a coordinated response across layers to absorb volatility without compromising throughput.

Adaptation is not limited to scale; it involves balancing execution timing, rerouting request paths through adaptive routing, and holding queued data until processing capacity stabilizes. Such measures mitigate the impact of load shocks and maintain operational continuity across the hosting stack.

Concurrent Session Processing in Application Layer

Concurrent session processing within the application layer enables enterprise hosting environments to manage high-volume request traffic by isolating and distributing execution threads across controlled processing pools. As concurrent requests arrive in dense bursts, execution handlers encounter rising thread allocation pressure, where unchecked concurrency risks thread pool saturation, blocked execution, and latency amplification.

To preserve throughput, the session processor allocates incoming requests through a preconfigured thread pool, where each handler operates within isolated execution contexts to prevent queue blocking and response delays.

The application layer’s concurrency logic segments session streams by entry rate and processing type. Stateless handlers enable horizontal load balancing, while session-bound operations apply stickiness to maintain execution continuity. Session queues are segmented to prevent dominant requests from starving the pool.

Segregated queues balance processing time across heterogeneous session types, avoiding bottlenecks from resource-heavy execution paths. In high-load states, adaptive rate limiting is applied at the session ingress point to prevent resource depletion beyond vertical thresholds. Load-bound execution cycles are scheduled based on concurrency weight, allowing processing runtimes to prioritize throughput over depth of simultaneous handling.

By sustaining balanced thread allocation under peak concurrency, the application layer preserves session integrity and prevents degradation of response time. If concurrency thresholds are breached despite these controls, session overflow must be offloaded to lower infrastructure layers. When session concurrency exceeds application-layer absorption capacity, overflow propagation to the network queue layer becomes necessary, where traffic-level queuing strategies address unresolved backlog.

Queue Management at Network Level

Queue management at the network level acts as a primary control layer for traffic surges in enterprise hosting environments. When high request concurrency saturates available processing capacity, the network edge buffers and regulates the flow before it escalates to the application tier.

Ingress queues capture the initial excess, buffering incoming packets and deferring their passage based on predefined delay windows and throughput caps. This response decouples burst intensity from downstream system load, holding traffic temporarily at controlled intake points.

Rate control is enforced through buffer-based throttling mechanisms that slow the ingress rate once queue depth thresholds are exceeded. When packet intake approaches saturation, prioritization rules reorder traffic based on service class or request criticality, filtering less urgent flows to preserve responsiveness for essential paths. The network enforces timeout logic on queued packets that exceed latency thresholds, discarding stalled sessions to prevent congestion loops.

This layer enforces queue depth constraints as safeguards, preventing unbounded memory allocation and backpressure propagation. By regulating the intake at this layer, the network preempts overload conditions that would otherwise propagate upstream into the application stack.

When queue capacity nears exhaustion, overflow routing protocols redirect traffic to alternative regional nodes or mirrored entry points, preserving continuity while diffusing localized pressure. This delegation is a structured surge containment, engineered into the infrastructure to absorb disruption.

Such queue mechanisms insulate the application tier by regulating packet flow at the network perimeter, shielding internal systems from the volatility of direct load exposure. They regulate packet flow not only by volume but by timing and order, protecting the internal service architecture from concurrency-induced instability.

By holding, filtering, and rerouting inbound request traffic, queue management at the network layer preserves throughput equilibrium and maintains system responsiveness under rising demand, creating the necessary stability for real-time resource adaptation further upstream.

Performance Stability for Traffic Peaks

Performance stability is a measurable, enforced property of enterprise hosting systems under conditions of peak traffic load. It’s a critical requirement in enterprise hosting systems, where infrastructure must absorb load volatility without breaching latency thresholds or interrupting service execution.

Stability in this context refers to the system’s capacity to preserve throughput integrity, maintain request execution times within strict latency variance margins, and avoid resource saturation across CPU, memory, and I/O channels.

Traffic peaks create hostile operational conditions, marked by sudden bursts, irregular request densities, and concurrency surges that pressure allocation layers. These events destabilize infrastructure by accelerating exhaustion of finite processing pools and triggering queuing delays that compound response times. Without an enforced mechanism, baseline performance metrics begin to drift, latency expands beyond acceptable margins, throughput contracts, and session handling becomes inconsistent.

Performance stability prevents this degradation through engineered system behavior that absorbs and adapts. This enforces execution consistency, ensuring uniform handling of requests despite traffic irregularities. It sustains execution consistency by actively provisioning compute, storage, and networking resources in response to observed load characteristics. It mitigates spike-induced volatility by adjusting infrastructure posture to match current traffic realities. This guarantees service uptime under volatile conditions, transforming peak traffic from a threat into a manageable operational state.

Such stability is enforced through two interdependent mechanisms: real-time resource allocation that responds to per-request load shifts, and autoscaling orchestration that extends infrastructure capacity when concurrency thresholds are exceeded.

Real-Time Resource Allocation Per Request Load

Real-time resource allocation in enterprise hosting environments is a dynamic provisioning of compute, memory, and execution bandwidth based on the specific load characteristics of each incoming request. Rather than assigning fixed resource units to all operations, the infrastructure calculates request weight and assigns threshold-based execution paths at runtime.

The system distinguishes between transactional queries, data-heavy operations, and low-latency API calls, each routed through differentiated execution channels according to pre-established request weighting models.

Allocation begins with real-time request classification, separating lightweight stateless calls from resource-intensive workloads such as batch submissions or file handling. Each request type triggers a tailored provisioning routine aligned with its compute intensity and execution cost.

The system executes predictive resource estimation to determine execution cost, considering current system load, memory state, and CPU availability. Lightweight requests are assigned low-overhead compute threads with reduced I/O access, while complex tasks trigger threshold-based assignment of isolated execution lanes to avoid cross-request interference.

This runtime provisioning logic adjusts allocation granularity through compute micro-allocation based on threshold signals from live metrics, CPU saturation levels, memory fault rates, and active thread pool distribution. The system balances against load surges by scaling provisioning depth per request, rather than overcommitting shared pools. As resource pressure fluctuates, allocation logic recalibrates in milliseconds, maintaining throughput and latency bounds without premature scaling triggers.

Per-request adaptation not only prevents bottleneck propagation during traffic spikes but also preserves predictable performance under concurrency stress. These mechanics also provide foundational insights for performance testing for enterprise hosting, where load simulations rely on accurate modeling of real-time provisioning behavior.

The system’s responsiveness is preserved through coordinated execution slot assignment, setting the stage for orchestration-based autoscaling in subsequent capacity shifts.

Autoscaling via Orchestration Tools

Autoscaling via orchestration tools enables enterprise hosting systems to dynamically adjust infrastructure capacity based on real-time system load and performance metrics. This behavior is an integral runtime mechanism that preserves system responsiveness by dynamically aligning infrastructure resources with real-time traffic volatility.

Orchestration logic operates continuously across the runtime environment, ingesting live indicators such as CPU saturation, memory utilization, and inbound request volume to evaluate infrastructure sufficiency against defined thresholds or predictive load patterns.

When resource strain surpasses acceptable ranges, the orchestration layer initiates scaling actions that provision new infrastructure units, such as compute nodes, spin up containers, or replicate service units to absorb demand. These provisioning actions execute asynchronously, without administrative prompts, expanding the infrastructure boundary only as far as required to restore stability.

Conversely, when traffic eases and excess capacity emerges, the same logic retires surplus units, minimizing cost exposure and preventing resource waste. The behavior remains self-regulating, scaling infrastructure dynamically in response to systemic demand rather than arbitrary schedules.

By triggering infrastructure shifts in direct response to live system health metrics, autoscaling preserves service responsiveness under high concurrency, traffic bursts, or sustained peak loads. This adjustment process depends on continuous metric acquisition from the monitoring layer, which must detect and transmit shifting load patterns with minimal delay to uphold the precision of orchestration decisions. Such tight integration between monitoring feedback and orchestration logic forms the foundation of responsive enterprise hosting.

Monitoring Systems for Load Responsiveness

Monitoring systems in enterprise hosting environments are real-time, system-embedded processes that observe load conditions and generate actionable data for autoscaling, load balancing, and failure response.

These systems operate as embedded runtime components; they monitor CPU loads, memory consumption, active request counts, and queue depths across the hosting stack. Such metrics serve as input vectors for detecting system stress and interpreting real-time load behaviors. The following telemetry is interpreted in real time to identify usage-to-capacity deltas, traffic trend acceleration, and signs of degraded performance under concurrent load.

Monitoring systems detect threshold-exceeding events that signal imminent saturation or processing delays. These events trigger orchestration logic such as autoscaling, traffic redirection, or load redistribution.

As system stress indicators breach predefined limits, the monitoring logic feeds data into orchestration pipelines or alert layers. This initiates autoscaling decisions, temporary traffic rerouting, or load redistribution to preserve system throughput and latency under load pressure.

Dynamic baselining models evaluate shifting load patterns against historical usage trends. Traffic volatility is not handled through assumption but through active observation. Usage metrics are evaluated against dynamic baselines, with detection models identifying sustained surges, sudden peaks, or irregular load intervals. These insights activate feedback loops that adapt resource allocation across compute, network, and storage layers. Without real-time telemetry and threshold logic, enterprise hosting environments lack the agility to maintain performance under variable demand.

The responsiveness of the system is the product of this telemetry-driven workflow. Monitoring generates load-aware triggers and directs downstream workflows like capacity scaling, traffic shifting, or service degradation mitigation. These systems form the diagnostic foundation for usage-triggered alert mechanisms and metric-based capacity scaling decisions.

Usage-Triggered Alerts in Hosting Stack

Usage-triggered alerts in the hosting stack are generated when monitored metrics such as CPU usage, session concurrency, memory saturation, or request queue depth exceed defined operational thresholds.

These alerts act as dynamic system triggers, not passive logs, and are embedded directly within the infrastructure’s orchestration layer. Each alert fires when a monitored attribute, whether governed by static baselines or adaptively tuned thresholds, crosses its defined boundary.

Such a violation activates real-time signaling that directs the orchestration pipeline to initiate autoscaling, resource reallocation, or throttling routines in response to the detected spike. The alert engine’s latency is engineered in milliseconds, forming a high-speed feedback loop that intercepts resource exhaustion before saturation cascades can disrupt runtime performance.

By converting anomaly boundary detections into immediate execution directives, these metric-based decision triggers ensure hosting stack responsiveness under volatile load conditions. This fast-reacting layer precedes broader metric aggregation systems that support long-term capacity forecasting and deeper performance analysis.

Live Metrics Tracking for Capacity Decisions

Live metrics tracking in enterprise hosting systems supports capacity decisions by continuously analyzing traffic load, resource utilization, and infrastructure saturation in real time. The telemetry layer aggregates time-series load indicators, including CPU usage, memory footprint, active request volume, response latency, and per-node workload distribution, to inform downstream orchestration decisions.

Operating without interruption, this telemetry informs orchestration engines using performance indicators that reflect operational strain and throughput trends.

As the system observes concurrency increases and load variability, it calculates resource saturation points and projects near-future capacity thresholds. These projections drive proactive infrastructure scaling, horizontal or vertical, based on utilization saturation models rather than reactive guesswork.

When request volume begins to rise beyond pre-set concurrency limits, the system triggers compute expansion based on calculated scaling demand curves, derived from previous patterns of ramp-up duration and resource drain velocity.

Each capacity adjustment feeds into a feedback loop: live system state triggers provisioning at updated thresholds, provisioning shifts system balance, and the updated state re-informs scaling logic. The orchestration logic uses this loop to keep infrastructure size aligned with active system pressure, avoiding premature expansion during temporary bursts or sluggish response under prolonged spikes.

Live metrics tracking prevents misalignment between resource supply and real-time demand. By relying on real-time resource graphing and throughput-driven forecasting, the system sustains optimal alignment between infrastructure capacity and observed demand.

Without live metrics tracking, orchestration logic lacks empirical signals, leading capacity decisions to drift toward inefficiency or outage risk. The orchestration logic correlates CPU heat maps, memory exhaustion rates, and connection queues with projected traffic progression to sustain performance equilibrium under unstable load conditions. Metric-driven analysis is the infrastructure’s method for sustaining responsiveness without structural overreach.

Contact

Don't like forms?

Shoot us an email at [email protected]