Multi-Region Hosting Deployment Strategy

Table of Contents

Multi-region hosting deployment supports the enterprise website by distributing infrastructure across availability zones. This enables global service delivery while managing latency and enforcing uptime. Hosting deployment defines where and how resources operate, forming a core layer of enterprise web hosting strategy.

Enterprise websites must function reliably across various jurisdictions, withstand traffic spikes, and remain operational despite infrastructure failures. To do this, hosting strategies reduce single-region dependency through geographic redundancy. Jurisdictional mapping and latency thresholds shape content placement, routing, and compliance enforcement.

Availability requires structural alignment with user proximity and infrastructure capacity. Uptime depends on load balancing, SLA targets, and legal constraints. Each region applies latency rules and triggers redundancy, traffic partitioning, and auto-scaling based on operational demands.

At scale, the enterprise website distributes content rather than localizing it. Multi-region deployment enables regional execution of global service logic. Hosting adapts to user distribution and compliance, aligning redundancy, provisioning, and failover under a unified SLA framework.

Region Selection Criteria

Selecting a hosting region for an enterprise website is derived from the global delivery architecture. Each region must align with the enterprise website’s deployment viability, defined by the density of enterprise users and the readiness of infrastructure across candidate zones.

User proximity density governs front-end responsiveness. Regions with high user cluster concentrations reduce latency and enable tighter interaction loops. These regions define proximity thresholds that qualify deployment zones by geographic availability and service adjacency.

Infrastructure availability determines backend viability. Regional scope, including compute, storage, and redundancy, constrains hosting feasibility due to resource locality. Locations without tier-aligned infrastructure are excluded from deployment.

Feasibility extends beyond availability; regional hosting requires edge network saturation, failover capability, and server distribution. If infrastructure topology fails to support these, the region is disqualified.

Qualified regions meet both front-end proximity and backend infrastructure thresholds. These predicates map to the enterprise website’s hosting viability logic and support global service continuity.

User Proximity Density

Proximity density is a volume-weighted user aggregation within a specified response radius, aligning directly with SLA latency and throughput thresholds.

The enterprise website determines hosting region eligibility based on user proximity density, which is a metric that measures the degree of geographic concentration of user clusters within latency-critical zones.

Hosting regions activate only when these clusters meet defined proximity-weighted thresholds. This density-calibrated model ensures that region activation is performance-driven, rather than spatially assumed.

High-density clusters, especially those within sub-100ms latency zones, qualify hosting regions by minimizing host-user distance, optimizing signal response paths, and improving packet delivery. The enterprise website assesses the balance between user volume and network response capacity to inform region activation and resource allocation. This evaluation governs scalability parameters and supports efficient routing infrastructure.

Regions matching proximity metrics trigger deployment within the multi-region strategy. These zones anchor failover points and traffic redirection paths, aligning redundancy with traffic baselines.

Proximity density thus governs both activation and scaling logic, ensuring SLA continuity and consistent content delivery during periods of high demand. Hosting decisions rely on geographic overlap tied to volume, positioning density as a key factor in deployment gatekeeping.

Infrastructure Availability

Enterprise website deployments require hosting regions with infrastructure availability that meets strict operational thresholds. This readiness reflects deployment-grade conditions across compute saturation, Tier-1 network provisioning, zonal separation, and redundant power systems.

A hosting region qualifies only if its provisions have failover compatibility and resource elasticity at scale. Regions lacking uptime infrastructure or multi-zone support are disqualified, even if they align with user proximity or demand patterns.

Infrastructure availability acts as a filtering predicate in region selection, underpinning SLA objectives and deployment stability. Absent these thresholds, hosting introduces risk to continuity and service guarantees.

This attribute governs resource orchestration for dynamic scaling and traffic distribution. It determines compatibility with active-active and active-passive architectures by supporting infrastructure redundancy, elastic provisioning, and high-throughput connectivity.

Compliance Enforcement Scope

The enterprise website must deploy infrastructure only in regions where enforceable compliance frameworks govern operations. These frameworks define a legal constraint perimeter, set by regulatory jurisdiction and enforcement authority, that qualifies a region for hosting.

Hosting legality depends on enforcement reach, not just regulatory origin. For example, GDPR applies only where the EU can enforce it. A region adopting GDPR-like terms without enforceable oversight doesn’t meet the compliance threshold. This gap between declared policy and legal applicability separates valid from invalid deployment zones.

The compliance domain governs infrastructure eligibility. It filters out regions lacking enforceable oversight and defines where data can reside, how it is transferred across borders, and which partners are eligible to participate. This regulatory overlay, shaped by legal reach, ensures infrastructure activation remains within the law.

Ignoring the enforcement scope invites jurisdictional conflict and legal invalidation. Cross-border deployments, in particular, expose policy conflict zones that must be resolved before any commitment is made. Regions must be vetted for enforcement compatibility.

Compliance enforcement dictates the hosting strategy; only regions within enforceable perimeters qualify.

Jurisdiction Mapping Strategy

The jurisdiction mapping strategy is a predicate logic layer within the enterprise website’s compliance architecture. It operationalizes regulatory enforcement scopes by mapping abstract compliance requirements to concrete hosting regions using predefined legal and policy rule sets.

The enterprise website maps hosting regions to enforcement groups aligned with specific compliance frameworks, such as data protection laws, cross-border treaties, or sovereignty protocols. Regions are categorized by legal control zones and grouped via a policy-region schema. Aligned regions form enforcement sets that dictate where data can reside and how it can be processed or transferred. This region-policy association defines host-region qualification parameters.

The mapping serves 3 core functions. First, it filters out non-qualifying regions based on the enterprise’s compliance scope. Second, it governs provider validation by linking infrastructure presence to regulatory obligations through the mapping of legal boundaries. Third, it manages fallback orchestration: when enforcement conditions shift, jurisdiction mappings update dynamically to reroute deployments or adjust controls.

These updates use regulatory scope resolution logic, prioritizing legal boundaries based on jurisdictional reach and enforcement precedence.

Such a strategy enables consistent, compliant hosting by maintaining an adaptive mapping matrix. It resolves overlaps and extraterritorial claims while aligning enforcement overlays with evolving regulatory scopes. The result is deployment legality, risk control, and seamless cross-region orchestration, all mapped through a logical framework.

Data Residency Constraints

Data residency constraints enforce non-negotiable boundaries on where enterprise website user data must be stored, processed, and retained. These are binding legal mandates defined by the scope of compliance enforcement and operationalized through jurisdiction mapping strategies.

Laws such as GDPR, CCPA, and PDPA enforce geographic data locality and restrict unauthorized cross-border data transfers. These regulations bind infrastructure to sovereign boundaries and prohibit cross-region replication. Hosting regions operate as jurisdictional zones with explicit legal constraints.

Enterprise hosting compliance requires architectural commitments, such as region-specific data stores, sovereign isolation layers, and legally segmented duplication nodes, forming lawful storage region architectures that comply with cross-border policy restrictions.

For enterprise websites, residency rules often take precedence over latency tuning, scalability logic, and even redundancy strategies. All deployment choices, such as provider selection, storage orchestration, and failover routing, must comply. No active or passive replica may breach physical data boundaries. These form a data immobility policy, governed by jurisdictional storage compliance.

Once mapped, residency rules bind hosting behavior. They enforce localization, prohibit global failovers that break data segregation clauses, and reshape how infrastructure is provisioned and certified.

Failure to comply nullifies deployment legality. Residency constraints govern infrastructure decisions at every layer of the enterprise website’s hosting model.

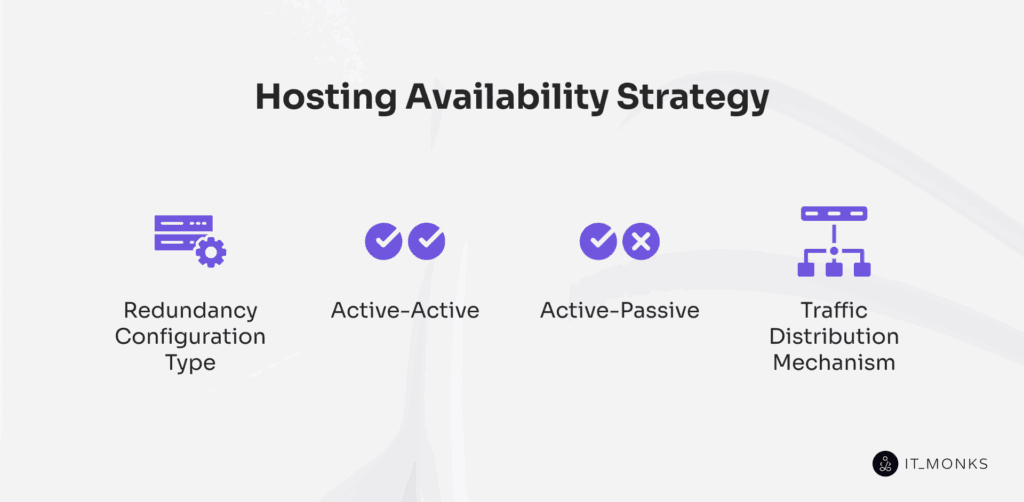

Hosting Availability Strategy

To maintain service continuity across regions, the enterprise website employs a hosting availability strategy built on fault-tolerant infrastructure. Availability is a design condition engineered to meet SLA-bound performance under disruption, latency shifts, or regional outages.

This strategy is informed by regional-level factors, including user proximity, infrastructure saturation, and compliance overlays. These determine initial deployment zones and ensure parity in availability zones through adherence to jurisdictional requirements. From this base, the enterprise website structures availability around three operational pillars: redundancy configuration, failover orchestration, and traffic distribution logic.

Redundancy configuration defines how hosting roles are split or mirrored across zones. These are live operational modes, tuned for concurrent or failover activity based on service thresholds.

Failover orchestration sustains uptime enforcement by rerouting requests during regional degradation. It follows predefined routing table logic to shift load to designated failover regions without service disruption.

Traffic distribution applies ruleset-based load shifting in real time. It aligns user demand with available resources, maintaining latency targets and throughput consistency across zones.

These elements form a high-availability model that enables the enterprise website to respond to disruption, scale effectively, and uphold SLA targets.

Redundancy Configuration Type

Redundancy configuration is the availability model an enterprise website defines to orchestrate hosting region behavior within its hosting availability strategy. It governs how service continuity is maintained through structured deployment across primary and secondary zones.

In this context, redundancy involves real-time synchronization, load replication behavior, and regional failover design. Each configuration defines how secondary regions operate, either in parallel with primaries or in standby mode for failover.

These setups segment regions into operational roles, determining replication delay, synchronous operation, and failover activation logic. They shape how traffic is distributed and how quickly a secondary region becomes active in response to disruptions.

The selected configuration reflects latency budgets, SLA severity, and infrastructure maturity across different regions. Two models dominate: Active-Active and Active-Passive, each representing a distinct approach to regional orchestration and service resilience.

Active-Active

An active-active redundancy configuration sustains the enterprise website’s availability strategy by maintaining synchronized service presence across multiple hosting regions. Each node operates in parallel, handling live traffic instead of remaining on standby. This structure ensures fault tolerance through concurrent operation, not fallback.

When a hosting region is disrupted, user requests reroute to other regions without triggering failover procedures. The routing table logic distributes sessions dynamically, based on latency, health, or load thresholds. This prevents regional dependency and eliminates recovery delays; every node remains fully engaged.

Such a configuration reinforces zone-level parity and embeds resilience into the enterprise website’s infrastructure. By orchestrating simultaneous operation across regions, it absorbs traffic surges, withstands node failures, and minimizes bottlenecks. SLA-bound uptime objectives depend on this continuity, where availability is a built-in property.

Active-Passive

Active-passive is a redundancy configuration type used by the enterprise website to designate a single hosting region as active, while one or more passive replicas remain in a warm standby state. These standby regions maintain asynchronous state replication from the active node.

The enterprise website triggers failover through health check failures, manual promotion, or other system-defined events. When activated, a passive replica is promoted to the primary node, initiating traffic redirection and restoring service during a defined recovery time objective (RTO). This activation protocol may be automated or manual.

By avoiding concurrent load processing, Active-passive reduces infrastructure cost while ensuring availability preservation. It’s a resource conservation strategy suited for deployments where service continuity is essential but full regional concurrency isn’t required.

Traffic Distribution Mechanism

The enterprise website’s traffic distribution mechanism directs incoming requests across hosting regions in real time, adapting to redundancy configuration states. In an Active-passive setup, it prioritizes a single active region for all traffic while keeping passive regions on standby, monitored through continuous health-check logic.

When latency spikes, health anomalies, or availability metrics breach routing trigger conditions, the mechanism activates failover redirection. Requests are rerouted using latency-aware DNS logic or proximity-based paths, allowing traffic to be shifted seamlessly to the standby region. This behavior ensures service continuity and supports the enterprise website’s hosting availability strategy.

Unlike active-active configurations, the orchestration layer here doesn’t balance load across zones. It reserves passive capacity until failure is detected. During failover, traffic allocation patterns are calibrated to restore stability quickly while staying within SLA thresholds. This routing logic strikes a balance between uptime assurance and efficient resource utilization.

Hosting Resource Scalability Logic

Scalability behavior is conditionally executed; logic orchestrates both regional and cross-regional expansion, shaped by redundancy mode. In active-active setups, it balances load using synchronized capacity states. In Active-passive, it queues provisioning triggers to activate dormant resources when elasticity thresholds are breached. All expansion decisions consider proximity-weighted traffic, zone-level resource debt, and cooldown intervals.

Provisioning operates under a centralized autoscaling policy that merges quota governance with metric triggers. Planned bursts and unexpected surges are handled differently: burst initiation bypasses cooldowns under pre-approved limits, while threshold-based scaling follows elasticity caps.

These governed patterns define how the system reallocates or scales infrastructure across its architecture, ensuring performance without overcommitment.

Auto-Scaling Thresholds

Under the governance of hosting resource scalability logic, the enterprise hosting scaling model operates on auto-scaling thresholds that define a performance-bound trigger within the enterprise website’s hosting deployment. It activates when specific telemetry conditions, such as CPU saturation flags, request queue length, response time thresholds, or memory utilization, breach defined limits in a given hosting region.

Such threshold matrix logic continuously evaluates these metrics to identify scaling inflection points, where projected load intersects with the region’s resource usage ceiling. A provision threshold breach triggers the orchestration system to deploy new instances or redistribute traffic through the redundancy layer. Autoscale decisions are capped by cooldown windows, regional resource ceilings, and SLA-aligned latency tolerances.

Each threshold is region-specific, shaped by infrastructure capacity, redundancy schema, and SLA constraints. Threshold sets are recalibrated to reflect shifts in usage patterns or compliance limits.

A rapid RPS spike in a dense user proximity zone may trigger autoscale earlier than expected, activating short-cycle provisioning as a fallback. Each trigger event recalibrates the enterprise website’s elasticity model against regional resource constraints and SLA-defined latency targets.

Burst Resource Provisioning

Burst resource provisioning is a reactive allocation mechanism triggered when the enterprise website breaches auto-scaling thresholds and risks SLA-critical latency or availability degradation. It activates a temporary provisioning layer that overrides standard caps to allocate compute and network resources across hosting regions rapidly.

This mechanism addresses traffic anomalies, such as RPS surges, cascading failover pressure, or flash-sale concurrency. Provisioning occurs through pre-warmed instances, oversized standby deployments, or routing expansion, bypassing baseline scaling logic.

Burst activations are governed by rapid provisioning caps, time-bound burst envelopes, and cooldown intervals to limit scope and cost. Once emergency metrics stabilize, override logic throttles resource flow and returns the system to baseline elasticity.

Burst provisioning functions as a fallback, executed only under SLA-bound conditions to preserve availability during critical load events.

Deployment Goal Alignment

Deployment goal alignment structures the enterprise website’s hosting strategy around defined operational goals, including performance, availability, compliance, and cost control. Each infrastructure choice, region placement, routing logic, redundancy type, and scaling thresholds must directly satisfy one or more of these outcomes.

Alignment turns architecture into an outcome-driven system where each configuration element is governed by a specific operational constraint. Latency targets dictate region placement, ensuring user proximity. SLA mandates structure failover logic to maintain uptime guarantees. Compliance requirements enforce jurisdictional boundaries and data residency policies. Cost ceilings limit resource provisioning, while risk tolerance shapes redundancy design

Without goal linkage, deployment is unverifiable; no SLA, compliance, or performance claim can be audited. Alignment ensures that every infrastructure variable is traceable to a specific objective: sub-200ms latency drives proximity mapping, 99.999% uptime enforces redundancy choreography, and GDPR dictates storage placement.

Deployment alignment maps regions to demand zones, legal domains, and SLA enforcement areas. Scaling logic expresses resiliency thresholds tied to critical site functions. Every component becomes part of a measurable, auditable architecture.

This traceability enables audit defense, SLA enforcement, and resource planning. It separates arbitrary provisioning from outcome-bound orchestration. SLA objectives formalize this alignment, translating goals into enforceable service metrics.

SLA Objectives

SLA objectives define the mandatory service thresholds that the enterprise website enforces through its multi-region deployment.

Availability guarantees govern regional redundancy. A 99.999% uptime objective maps directly to active-active or active-passive configurations, depending on zone-level fault tolerance. Each region functions as its own SLA enforcement zone, architected to meet uptime independently, which is a foundation of any robust uptime SLA for enterprise hosting.

Latency ceilings set strict response time targets, such as sub-200ms, and dictate routing behavior. Traffic is directed based on proximity and response efficiency, not cost. SLA-bound latency shapes the entire routing logic.

Recovery time objectives (RTOs) determine how quickly a failover must occur, e.g., 30 seconds, requiring pre-provisioned nodes to be ready to activate instantly. Recovery point objectives (RPO) define the acceptable data loss windows, typically measured in seconds, which control backup frequency and replication lag across regions.

Failover response SLAs define the trigger window for degraded nodes. If a region drops below the SLA health thresholds, failover initiates according to RTO and latency constraints.

All SLA metrics, such as uptime, latency, and recovery, are continuously monitored, and the architecture is designed to meet these thresholds.

Contact

Don't like forms?

Shoot us an email at [email protected]