What is Enterprise Load Balancer?

Table of Contents

An Enterprise Load Balancer is the process of distributing network traffic across multiple servers by using dedicated hardware. It acts as a traffic proxy that automatically routes millions of incoming requests per second to the most appropriate server based on availability, capacity, and health status. Load balancer is measured in Gbps (gigabits per second) for bandwidth capacity and RPS (requests per second) for processing capability.

Load balancers operate at both Layer 4 (a transport layer) and Layer 7 (an application layer) of the OSI model (Open Systems Interconnection; a seven-layer network communication framework), handling different aspects of traffic management.

They can be deployed in various configurations, including hardware appliances, virtual instances, or cloud services, and can distribute traffic across web servers, application servers, database clusters, or between different geographic regions.

Load balancers work by acting as the entry point for incoming traffic, monitoring server health, and routing requests accordingly. They can be static with fixed IP addresses or dynamic, automatically scaling based on traffic patterns.

Various algorithms drive load balancing decisions, including round robin, least connections, and IP hash methods, each optimizing for different priorities like even distribution or session persistence.

Benefits include high availability, improved performance, scalability, and security while preventing system overloads during peak usage times.

Enterprise Load Balancers are typically set up and maintained by network engineers or system administrators. However, web developers with DevOps experience can also configure and manage them, especially in cloud environments where load balancing is offered as a service.

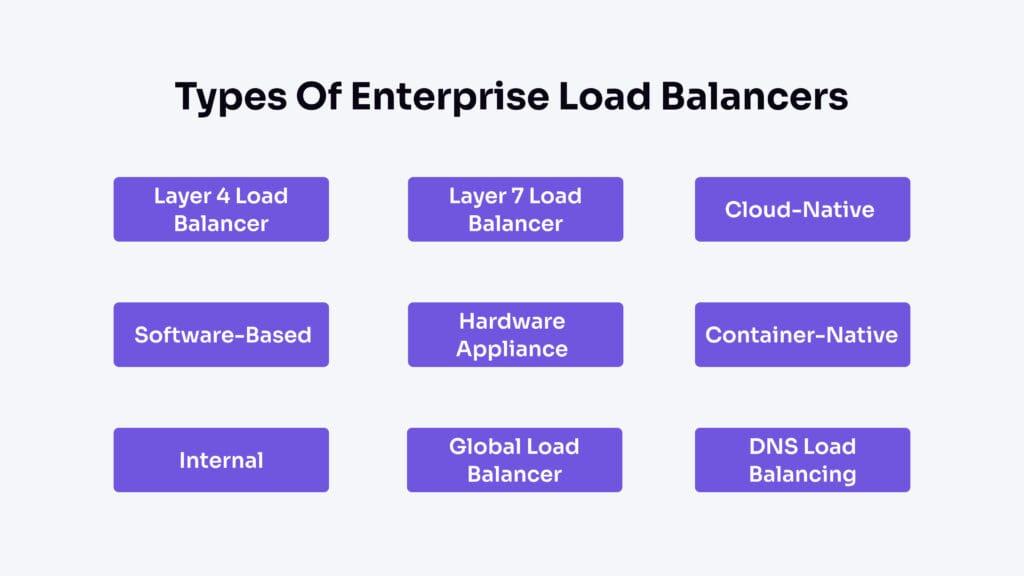

What are the Types of Enterprise Load Balancers?

There are various enterprise load balancers, such as layer 4 load balancer, layer 7 load balancer, Cloud-native, Software-based, Hardware appliance, Container-native, Internal, Global Load Balancer, and DNS Load Balancing. Each one is designed to meet specific technical requirements, workloads, and environments, such as handling different network layers, application types, performance needs, and security features.

Layer 4 Load Balancer

Layer 4 is a transport layer that handles end-to-end communication using TCP/UDP protocols for reliable data transfer and flow control. It breaks the data into smaller packets, but it does not inspect the actual content of the data. Instead, it makes forwarding decisions using information in the transport layer headers, such as source/destination IP and port.

When transferring traffic, the decision is based on simple algorithms (like round robin, which sends requests to each server in turn or least connections) and network-level details, such as which server has the fewest active connections or is next in line. This allows for fast distribution of requests without analyzing the application data.

Some examples of Layer 4 Load Balancers include F5 Networks (using N-Path technology), Kemp LoadMaster, Brocade (using Direct Server Return), Barracuda Load Balancer, A10 Networks (using Direct Server Return), and Free LoadMaster.

Here are some pros and cons of a Layer 4 Load Balancer:

Pros:

- High speed and low latency, as it does not inspect packet contents.

- Efficient for simple, high-volume traffic distribution.

- Supports session persistence using IP and port information.

Cons:

- Cannot make decisions based on application content or user data.

- Doesn’t have features like content caching, SSL termination, or application-level health checks.

Layer 7 Load Balancer

A Layer 7 load balancer operates at the application layer, the highest level of the OSI model, handling protocols like HTTP and HTTPS. Unlike Layer 4 load balancers that route traffic based on IP addresses and ports, Layer 7 load balancers inspect the actual content of each request, such as URLs, HTTP headers, cookies, and data in the body.

The intelligent routing decisions are made by analyzing application-specific data, such as URLs, HTTP headers, cookies, or content type, rather than just relying on basic network information like IP addresses and ports.

A Layer 7 load balancer enhances performance through caching and content manipulation, blocks harmful requests, handles SSL termination, and ensures users stay connected to the same server during their session. Still, it can introduce latency due to packet inspection, needs stronger hardware, and poses security risks because SSL certificates are shared with the load balancer.

Within Layer 7, Application and HTTP load balancers are common subtypes. Both operate at the application layer, but HTTP load balancers specifically handle HTTP protocol traffic, directing requests based on web addresses or browser information. It makes routing decisions based on HTTP-specific details like URLs or headers.

Application load balancers, a broader category, can manage various application protocols (including HTTP). They can route requests based on each request’s specific content or purpose.

Both types use the rich information available at Layer 7 to make intelligent routing choices, improving performance and reliability for users accessing websites and applications.

Common Layer 7 load balancers include NGINX, HAProxy, and AWS Application Load Balancer.

There are several pros and cons of Layer 7 Load Balancer:

Pros:

- Intelligent routing based on application data (for example, URL, HTTP headers, user session).

- Supports caching and content manipulation for improved performance.

- Blocks malicious requests and provides SSL termination.

- Maintains user connections to the same server throughout their session.

Cons:

- Packet inspection introduces latency.

- Demands more powerful hardware and processing capacity.

- Increases security risks since SSL certificates must be shared with the load balancer.

Cloud-Native

A cloud-native load balancer is a software-based system designed to distribute network traffic across multiple servers or containers in a cloud environment. It monitors server health entirely within the cloud, dynamically routing incoming requests based on algorithms such as round robin or least connections, ensuring no single server is overwhelmed.

Cloud-based load balancers offer great scalability, adjusting to demand without hardware purchases. They can scale by using software that runs on virtual machines or in the cloud, so more capacity can be added easily whenever needed.

Automated failover ensures high availability, while the pay-as-you-go model eliminates hardware costs. But while initially cost-effective, expenses can grow significantly with increased traffic or premium feature usage.

Services like Amazon’s ELB, Google Cloud Load Balancing, and Microsoft Azure Load Balancer are popular choices. These tools automatically handle traffic distribution and work well with other cloud services to keep your applications running smoothly.

Key features of a cloud-native load balancer are the following:

- Automatically adjusts the number of servers based on visitor traffic.

- Health checks for backend servers and containers.

- Support multiple protocols (HTTP(S), TCP, UDP).

- SSL/TLS termination and security integrations.

- Integration with cloud-native tools like Kubernetes and service mesh.

- Global and regional load balancing capabilities.

Along with features, there are some pros and cons to consider when using a cloud-native load balancer:

Pros

- High scalability and flexibility.

- Improved performance and reduced latency.

- Automated failover and high availability.

- Reduced operational costs compared to hardware solutions.

Cons

- Limited customization compared to self-managed solutions.

- Potentially complex setup in multi-cloud or hybrid environments.

- Costs can increase with traffic volume and the use of advanced features.

Software-Based Load Balancer

A software-based load balancer is a set of software that distributes incoming network traffic across multiple servers. It runs on standard servers, virtual machines, or in the cloud, and does not require specialized hardware.

When a client sends a request (such as accessing a website), the software load balancer receives it and uses algorithms (like round robin, least connections, or IP hash) to decide which server should handle the request. Before forwarding, it checks the health of servers to avoid sending traffic to any that are down or overloaded. The chosen server processes the request and sends the response back through the load balancer to the client.

Software-based load balancers offer cost-effective flexibility to scale instances and support automation and disaster recovery, but may deliver lower raw performance than hardware alternatives because their throughput and efficiency are limited by the general-purpose server hardware and operating system they run on, lacking the specialized processing and acceleration features found in dedicated hardware appliances and remain dependent on underlying server capabilities.

Popular software load balancers include HAProxy, NGINX Plus, and Avi Vantage. For instance, HAProxy is widely used for TCP/HTTP load balancing on Linux, offering features like health checks, SSL termination, and advanced routing.

Here are some common features of software-based load balancers:

- Advanced traffic distribution algorithms.

- Health checks to ensure only healthy servers receive traffic.

- SSL termination (handling encryption/decryption).

- Autoscaling to adjust resources based on demand.

- Content-based routing and application-layer controls.

The pros and cons of software-based balancers to consider:

Pros:

- Lower cost than hardware solutions.

- High flexibility and easy integration with existing infrastructure.

- Can add or remove instances as needed.

- Quick deployment and updates.

- Supports disaster recovery and automation.

Cons:

- May have lower raw performance compared to hardware appliances.

- Dependent on the underlying server or VM performance.

Hardware Appliance Load Balancer

A hardware appliance load balancer is a physical device built specifically to distribute incoming network traffic across multiple backend servers, ensuring no single server becomes overwhelmed and that applications remain available and responsive.

It operates by sitting between client requests and backend servers, directing each request to the most appropriate server based on predefined algorithms such as round-robin, least connections, or server health checks.

Hardware load balancers operate as dedicated, rack-mounted units with optimized internal components, such as specialized CPUs and advanced chipsets, for high-speed processing and consistent, predictable performance.

They often include features like SSL termination, health monitoring, and traffic shaping, and are typically deployed on-premises in data centers, sometimes in pairs for high availability. At the same time, hardware appliance-based load balancers have high upfront and maintenance costs, require physical installation, and on-site management.

Some examples of hardware appliances are F5 BIG-IP LTM, Citrix ADC, A10 Thunder ADC, and Kemp LoadMaster.

Hardware load balancers include such key features as:

- High throughput and low latency due to purpose-built hardware.

- Advanced security and traffic management capabilities, such as SSL offloading and DDoS protection.

- Turnkey setup with a pre-packaged operating system and user interface.

There are several pros and cons of a hardware appliance load balancer.

Pros:

- Superior, predictable performance and reliability.

- Dedicated hardware ensures consistent results, unaffected by other workloads.

- Enhanced security and advanced traffic management features.

Cons:

- High upfront and maintenance costs; scaling requires purchasing more hardware.

- Less flexibility and scalability compared to software solutions.

- Physical installation and on-site management required.

Container-Native Load Balancer

A container-native load balancer is a type of load balancer designed to distribute network traffic directly to containers (small, isolated environments for running applications) inside a Kubernetes cluster (an automated container management system), rather than routing traffic first through the underlying virtual machines (VMs) or nodes hosting those containers. This approach makes the load balancer “aware” of the containers themselves, allowing for more precise and efficient traffic distribution.

Container-native load balancers send incoming requests to nodes, and then those nodes forward the traffic to containers using internal routing rules (like iptables). This adds an extra network hop and can increase latency. In contrast, a container-native load balancer uses a feature called Network Endpoint Groups (NEGs), which register the IP addresses of the containers as endpoints. The load balancer can then send traffic directly to the container that should handle the request, skipping the node-level routing.

The load balancer delivers traffic faster and more efficiently, offers more accurate health checks, and is better suited for modern microservices applications with simpler troubleshooting. Still, it may not support all load balancer types and require specific cluster setups.

Some examples of container-native load balancers include Google Kubernetes Engine’s (GKE) container-native load balancing using NEGs and Ingress, and similar solutions in other cloud providers that target containers directly

The features of a container-native load balancer include the following:

- Direct traffic routing from the load balancer to containers.

- Even distribution of traffic among healthy containers.

- Improved health checks, since the load balancer checks the container itself, not just the node.

- Lower latency and better network performance due to fewer network hops.

- Support for advanced features like session affinity (keeping a user’s requests going to the same container), security, and integration with cloud-native services.

Here are some pros and cons of the container-native load balancer:

Pros

- Faster and more efficient traffic delivery

- More accurate health checks

- Better support for modern, microservices-based applications

- Simplified troubleshooting and monitoring

Cons

- May not support all types of load balancers (for example, some network-level load balancers are not compatible)

- Requires specific cluster configurations (like VPC-native clusters)

- Some manual configurations may be needed for advanced use cases

Internal Load Balancer

An internal load balancer is a system that distributes network traffic among servers within a private network, making services available only to resources inside that network, not to the public internet.

An internal load balancer sits between client devices and a group of servers. When a request comes in, the load balancer decides which server should handle it, using algorithms such as round robin or health checks. If one server fails, the load balancer automatically redirects traffic to other working servers, keeping the service running smoothly.

While this load balancer increases reliability, performance, and security by distributing traffic and hiding services from the public, it also adds complexity and may need extra resources or expertise to manage.

Examples of internal load balancers include Azure Internal Load Balancer and AWS Internal Elastic Load Balancer, both of which are used to manage traffic within cloud-based virtual networks.

Here are the key features of an internal load balancer:

- Uses a private IP address, so only devices inside the network can access it.

- Distributes requests evenly to prevent any single server from being overloaded.

- Continuously checks server health and redirects traffic if a server goes down.

- Can scale by adding or removing servers without downtime.

Along with features, there are some pros and cons to consider when using an internal load balancer:

Pros:

- Increases reliability by preventing single points of failure.

- Improves performance by balancing the workload.

- Enhances security by keeping services hidden from the public internet.

Cons:

- Adds complication to network setup and management.

- May require additional resources or expertise to configure and monitor

Global Load Balancer

A global load balancer is a system, usually software or hardware, that routes incoming internet traffic across multiple servers located in different geographic regions. Its main goal is to direct users to the server that can provide the fastest and most reliable experience, often by sending them to the closest or healthiest server available.

If a server in one region fails or becomes overloaded, the global load balancer automatically reroutes traffic to another healthy server elsewhere, ensuring continuous service.

Global-based load balancers distribute internet traffic across multiple servers worldwide, ensuring faster performance and greater user reliability regardless of location. However, they require more technical expertise and investment compared to local solutions.

There are AWS Global Accelerator, Google Cloud Load Balancing, and Azure Traffic Manager among global load balancers.

Here are the key features of a global load balancer:

- Distributes traffic based on server health, location, and performance

- Reduces latency (the delay before a response) by sending users to the nearest server

- Provides automatic failover (switching to backup servers if one fails)

- Monitors server health in real time

- Scales easily to handle more users by adding new servers

The pros and cons of a global load balancer are the following:

Pros:

- Improves speed and reliability for users around the world

- Minimizes downtime by rerouting traffic during outages

- Handles sudden spikes in traffic smoothly

- Enhances user experience by reducing delays

Cons:

- More complex to set up and manage than local load balancers

- It can be costly, especially for global infrastructure

- Requires careful configuration to handle different regions effectively

DNS Load Balancing

A DNS load balancer is a system that manages website traffic across multiple servers by cycling through IPs, weighting servers based on capacity, or directing users to the closest server for faster response times.

When a user types a website address (like example.com) into their browser, the DNS resolver processes this request and looks up the domain’s IP addresses. Instead of returning just one IP address, the DNS load balancer evaluates traffic distribution rules.

It provides the IP address of the most suitable server, preventing any single server from overloading. The visitor’s browser then connects to this designated server to load the website. However, DNS caching can slow down traffic redistribution after failures, and it lacks real-time health checks, so that traffic may go to offline servers.

The most popular DNS load balancers are AWS Elastic Load Balancing, F5 Distributed Cloud DNS Load Balancer, Google Cloud Load Balancing, and NS1 Connect.

The features of a DNS load balancer include the following:

- Operates at the DNS layer, which is lightweight and scalable.

- Uses methods like round-robin (cycling through IPs), weighted distribution (sending more traffic to stronger servers), and geo-location-based routing (directing users to the nearest server).

- It can improve fault tolerance by rerouting traffic away from failed servers.

- Does not require dedicated hardware load balancers

Here are some pros and cons of the DNS load balancer:

Pros:

- Simple and cost-effective for distributing traffic globally.

- Enhances performance by directing users to the nearest or least busy server.

- Scalable and reduces single points of failure.

Cons:

- DNS caching can delay traffic redistribution.

- Basic DNS load balancing lacks real-time health checks.

- Users might be routed to different servers during a session, which can cause issues for some applications.

How Enterprise Load Balancer Works?

Load balancers work by sitting between client devices and backend servers, receiving all incoming requests and routing them to the most appropriate server. When a request arrives, the load balancer determines which server should handle it based on its configured algorithm. The workflow of a load balancer depends entirely on its algorithm.

There are two main types of load balancers – static and dynamic.

Static load balancers distribute traffic according to predetermined rules without considering the current state of servers. They use algorithms like Round Robin (sending requests to servers in sequence) or Weighted Round Robin (assigning more traffic to servers with higher capacity). These are simpler to implement but less adaptable to changing conditions.

Dynamic load balancers make decisions based on real-time server conditions like current load, response time, or available resources. They use algorithms such as Least Connections (sending traffic to servers with the fewest active connections) or Resource-Based (distributing based on CPU and memory availability). Dynamic balancers provide better performance optimization but are more complex to implement.

What are Enterprise Load Balancer Algorithms?

Enterprise load balancer algorithms are the predefined rules or methods used by load balancers to route incoming network traffic across multiple servers, ensuring no single server becomes overloaded, thus improving application performance and reliability.

Common types of these algorithms include Round Robin, Least Connections, IP Hash, Weighted Round Robin, and Least Response Time.

Round Robin

Round Robin is a simple scheduling algorithm that works by assigning each incoming task or request to a different server in a fixed, cyclic order, like a queue rotating through the servers one by one. When a request arrives, it goes to the next server in the list, then the following request goes to the next server, and so on, ensuring tasks are evenly distributed across all servers without considering their current load or task duration.

This queue-like approach means each server gets tasks assigned sequentially, cycling back to the first server after the last one, which helps balance the workload fairly when servers have similar capacity.

It is useful in multitasking operating systems, load balancing web servers, and network traffic distribution, where equal resource sharing is needed.

Some pros of Round Robin are fairness and simplicity. The cons are that it can cause high context switching, ignores task priority, and may reduce efficiency if time slices are too small.

Least Connections

The Least Connections algorithm is a dynamic load balancing method that directs each new request to the server with the fewest active connections at that moment. It continuously tracks how many connections each server is handling and assigns traffic accordingly, ensuring no server gets overloaded.

Least connections algorithm is used for environments with uneven or variable request loads, such as web hosting, cloud services, e-commerce platforms, and application servers, where request processing times differ significantly.

The pros of least connections are efficient resource use, adapts to real-time load changes, prevents bottlenecks, and improves response times.

The cons are that it requires connection tracking overhead, assumes equal request cost, and new servers may get sudden bursts.

IP Hash

IP hash is a load-balancing algorithm that uses a hash function on a client’s IP address to assign each client to a specific server consistently. When a request arrives, the load balancer calculates a hash from the client’s IP.

It uses it to pick a server, ensuring all requests from that IP go to the same server, which is useful for session persistence in web apps, banking, or real-time communication.

Pros of IP Hash include simplicity, easy implementation, and consistent routing for each user. However, its cons include uneven load if many clients hash to the same server and do not adapt well if servers are added or removed.

Weighted Round Robin

Weighted Round Robin (WRR) is a scheduling algorithm that manages tasks or network requests among multiple servers or resources based on assigned weights. The weight reflects how much load a server can handle compared to others, so WRR could allocate more tasks to servers with higher weights, ensuring those with greater capacity handle more load.

Weight and capacity are related but not exactly the same: capacity is the actual performance capability or resource power of a server (such as CPU, memory, bandwidth), while weight is a configured value that reflects this capacity and is used by the WRR algorithm to distribute tasks proportionally. In practice, weights are assigned in proportion to the servers’ capacities to achieve balanced load distribution.

WRR is useful in load balancing web servers, network traffic, and content delivery networks where servers have different capabilities.

Its pros include efficient resource use and fairness, while its cons involve the need for accurate weight assignment and manual updates if server capacities change. WRR improves performance but requires careful configuration to maintain balance.

Least Response Time

Least Response Time is a load balancing algorithm that directs each new request to the server with the fewest active connections and the lowest average response time, measured from when a request is sent to when the first response is received.

Least response time can be used for real-time and low-latency applications like online gaming or financial trading, where fast responses are critical.

Its pros are optimized performance and dynamic adjustment to changing server loads, but it is more complex to implement, requires constant monitoring, and can be harder to troubleshoot if server conditions change rapidly.

What Are the Benefits of a Load Balancer for an Enterprise?

Benefits include high availability, improved performance, scalability, security, prevention of system overloads during peak usage times, session persistence, disaster recovery, and cost efficiency.

- High availability: By distributing network traffic across multiple servers, a load balancer ensures that applications remain accessible even if one or more servers fail. This minimizes downtime and prevents single points of failure.

- Improved performance: Load balancers optimize resource usage by directing requests to the most appropriate servers based on real-time availability, capacity, and health status. This results in faster response times and a better user experience during peak usage.

- Easier scalability: Enterprises can easily scale their infrastructure up or down by adding or removing servers behind the load balancer without causing downtime.

- Security enhancements: Load balancers can block malicious requests, provide SSL/TLS termination, and integrate with security tools, helping to protect applications from attacks and unauthorized access.

- Prevention of system overloads: By monitoring server health and distributing traffic intelligently, load balancers prevent any single server from becoming overloaded, which helps keep consistent application performance.

- Session persistence: Certain algorithms (such as IP hash) ensure that a user’s requests are directed to the same server during a session, which is important for applications requiring session consistency.

- Disaster recovery and automated failover: Load balancers support disaster recovery by rerouting traffic if a server or data center becomes unavailable, ensuring business continuity.

- Cost efficiency: Especially with software-based and cloud-native load balancers, organizations can reduce operational costs by leveraging existing infrastructure and paying only for what they use

These benefits depend on a professional setup and ongoing optimization, ensuring that the load balancer is properly configured to match the organization’s specific needs and infrastructure.

Who Can Set Up an Enterprise Load Balancer?

Full-stack developers are well-suited for this task because they handle front and backend development, including configuring servers and infrastructure components like load balancers.

In the context of enterprise full-stack development, their broad skill set allows them to integrate and manage these systems, ensuring smooth traffic distribution and high server availability.

While network engineers or specialized DevOps professionals can also set up load balancers, full-stack developers often have the necessary knowledge to implement and maintain them within application deployments.

Contact

Don't like forms?

Shoot us an email at [email protected]